ローカルマシンで大規模言語モデル (LLM) を実行することは、AI 開発における最もエキサイティングなフロンティアの 1 つです。Docker の目標は、このプロセスをできるだけシンプルでアクセスしやすいものにすることです。そのため、1 つのコマンドで LLM をダウンロードして実行できるツールである Docker Model Runner を構築しました。

これまで、Model Runner による GPU アクセラレーション推論は、CPU、NVIDIA GPU (CUDA 経由)、Apple Silicon (Metal 経由) に限定されていました。本日、ローカル AI の民主化における大きな前進を発表できることを嬉しく思います : Docker Model Runner が Vulkan をサポートするようになりました。

これは、 統合 GPU や AMD、Intel、 および Vulkan API をサポートするその他のベンダーの GPU を含む、はるかに幅広い GPU で LLM 推論にハードウェア アクセラレーションを活用できることを意味します。

Vulkan が重要な理由: すべての人の GPU のための AI

では、Vulkan の何が大きな問題なのでしょうか?

Vulkan は、最新のクロスプラットフォームのグラフィックスおよびコンピューティング API です。NVIDIA GPU に固有の CUDA や Apple ハードウェア用の Metal とは異なり、Vulkan は幅広いグラフィックス カードで動作するオープン スタンダードです。これは、AMD、Intel の最新の GPU、またはラップトップに統合 GPU が搭載されている場合、ローカル AI ワークロードのパフォーマンスを大幅に向上させることができることを意味します。

Vulkan を統合することで (基盤となる llama.cpp エンジンのおかげで)、開発者や愛好家のより広範なコミュニティ向けに GPU アクセラレーション推論を解き放ちます。より多くのハードウェア、より高速、より楽しい!

はじめに: うまくいく

一番良いところ?有効にするために特別なことをする必要はありません。私たちは構成よりも慣習を信じています。Docker Model Runner は、互換性のある Vulkan ハードウェアを自動的に検出し、推論に使用します。Vulkan互換GPUが見つからない場合は、シームレスにCPUにフォールバックします。

試してみる準備はできていますか?ターミナルで次のコマンドを実行するだけです。

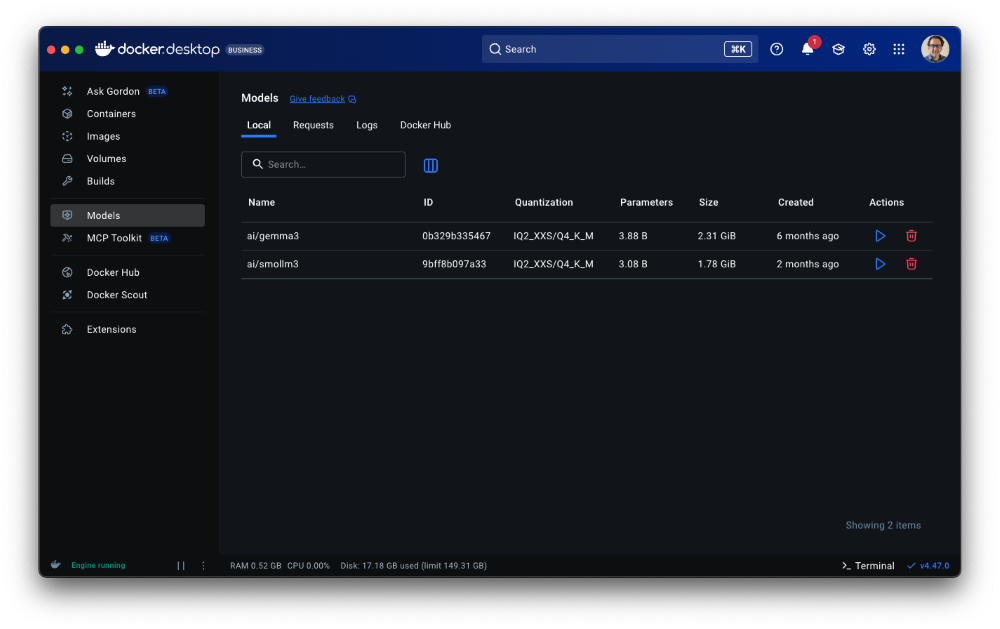

docker model run ai/gemma3

このコマンドは、次のことを行います。

Gemma 3 モデルをプルします。

必要なドライバーがインストールされた Vulkan 互換の GPU があるかどうかを検出します。

GPU を使用してモデルを実行し、プロセスを高速化します。

それはとても簡単です。自分のマシン上で直接実行される強力なLLMと、これまで以上に高速にチャットできるようになりました。

私たちと一緒に、ローカル AI の未来を形作るのに協力してください!

Docker Model Runner はオープンソース プロジェクトであり、コミュニティとともにオープンに構築しています。ハードウェアサポートを拡大し、新機能を追加する際には、皆様のご貢献が不可欠です。

GitHubリポジトリにアクセスして参加してください。

https://github.com/docker/model-runner

リポジトリにスターを付けてサポートを示し、フォークして実験し、独自の改善に貢献しることを検討してください。

さらに詳しく

- Docker Model Runner の一般提供に関するお知らせを確認する

- Model Runner GitHub リポジトリにアクセスしてください。Docker Model Runner はオープンソースであり、コミュニティからのコラボレーションと貢献を歓迎します。

- シンプルなhello GenAIアプリケーションでModel Runnerを使い始める