AI エージェントの構築は複雑な作業になる場合があります。ただし、次の質問に対する回答のかなり単純な組み合わせである場合もあります。

- インテリジェントなファジー計算を強化する AI バックエンドは何ですか?

- 外部システムにアクセスしたり、事前定義されたソフトウェアコマンドを実行したりするために、AIにどのようなツールを提供する必要がありますか?

- これらをまとめてエージェントにビジネスロジックを提供するアプリケーションは何ですか(たとえば、マーケティングエージェントを構築する場合、一般的なチャットGPTモデルよりもマーケティングや特定のユースケースについてより多く知ることができる理由は何ですか)?

現在、エージェントを構築する非常に一般的な方法は、ビジネスロジックを「システムプロンプト」または構成可能なプロファイル命令(後で説明します)として、およびMCPプロトコルを介してツールを使用してAIアシスタントまたはチャットボットを拡張することです。

この記事では、オープンソースツールを使用して、このようなエージェント(YouTube動画を要約するおもちゃ機能付き)を構築する方法の例を見ていきます。分離と再現性のために、すべてをコンテナーで実行します。Docker Model Runnerを使用してLLMをローカルで実行するため、エージェントはプライベートに処理します。

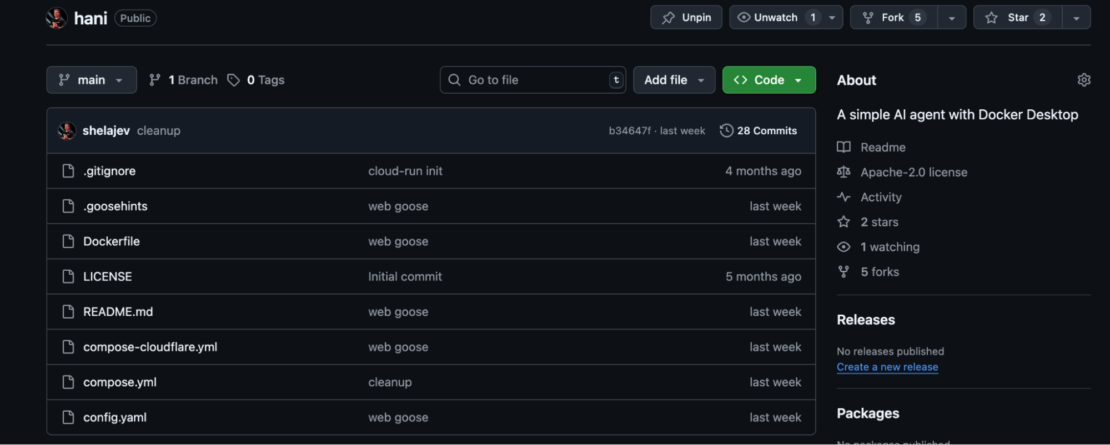

プロジェクトは、GitHub のリポジトリ https://github.com/shelajev/hani にあります。

MCPツールにアクセスするためのエージェントとDocker MCPゲートウェイとしてGooseを使用します。

一般に、hani (エストニア語でガチョウ、今日は学びました!) は、Docker Compose によって定義および調整されるマルチコンポーネント システムです。

ここでは、使用するコンポーネントについて簡単に説明します。全体として、これは少しハックですが、非常に興味深いセットアップだと感じていますし、エージェントの構築に使わなくても、使用されているテクノロジーについて学ぶことは、いつか役立つかもしれません。

|

コンポーネント |

機能 |

|---|---|

|

タスクの実行を担当する AI エージェント。推論にはローカル LLM を使用し、ツール アクセスには MCP ゲートウェイを使用するように構成されています。 |

|

|

ホスト上でローカル LLM 推論エンジンを実行します。Goose エージェントが接続する OpenAI 互換の API エンドポイント ( |

|

|

外部 MCP ツールを独自のコンテナに集約して分離するプロキシ。エージェントに単一の認証済みエンドポイントを提供し、コマンドインジェクションなどのセキュリティリスクを軽減します。 |

|

|

ブラウザーからアクセスできる Web アプリケーションとして、Goose CLI を実行するコンテナーのターミナルを提供するコマンドラインユーティリティー。 |

|

|

(オプション)ローカル |

実装の詳細

環境は、エージェントのイメージを構築するための Dockerfile と、サービスをオーケストレーションするための compose.yml という 2 つの主要な構成ファイルによって定義されます。

まず Dockerfile を見てみましょう。必要なすべての依存関係を持つ hani サービスのコンテナー イメージを作成し、Goose を構成します。

依存関係をインストールした後、強調したい行がいくつかあります。

RUN wget -O /tmp/ttyd.x86_64 https://github.com/tsl0922/ttyd/releases/download/1.7.7/ttyd.x86_64 && \

chmod +x /tmp/ttyd.x86_64 && \

mv /tmp/ttyd.x86_64 /usr/local/bin/ttyd

ttydをインストールします。CLI アプリケーションを含む Docker イメージが必要だが、ブラウザベースのエクスペリエンスが必要な場合に非常に便利です。

RUN wget -qO- https://github.com/block/goose/releases/download/stable/download_cli.sh | CONFIGURE=false bash && \

ls -la /root/.local/bin/goose && \

/root/.local/bin/goose --version

このスニペットは Goose をインストールします。エッジで生活したい場合は、 CANARY=true を追加して、不安定ではあるが最新かつ最高のバージョンを入手できます。

Dockerfileの次の2行を含む既製の構成ファイルを提供してGooseを構成するため、 CONFIGUREも無効にしていることに注意してください。

COPY config.yaml /root/.config/goose/config.yaml

RUN chmod u-w /root/.config/goose/config.yaml

.goosehintsでも同じことをします。これは、Gooseが読み取ってその中の指示を考慮に入れるファイルです(開発者拡張機能が有効になっている場合)。これを使用して、エージェントにビジネスロジックを提供します。

COPY .goosehints /app/.goosehints

残りは非常に簡単ですが、覚えておく必要があるのは、ttyd running goose を実行しているのではなく、後者を直接実行しているのではないということです。

ENTRYPOINT ["ttyd", "-W"]

CMD ["goose"]

今度はGooseの設定を見る絶好の機会ですが、ピースを接着するにはピースを定義する必要があるため、最初に作成ファイルを調べる必要があります。

compose.yml ファイルは、Docker Compose を使用してスタックのサービスを定義し、接続します。

モデルセクション から始まる compose.yml ファイルを見てみましょう。

models:

qwen3:

# pre-pull the model when starting Docker Model Runner

model: hf.co/unsloth/qwen3-30b-a3b-instruct-2507-gguf:q5_k_m

context_size: 16355

まず、操作の頭脳として使用するモデルを定義します。Docker Model Runner でローカルで使用できる場合は、要求を処理するためにオンデマンドで読み込みます。以前に使用したモデルでない場合は、 Docker Hub、HuggingFaceまたはOCIアーティファクト・レジストリから自動的にプルされます。小さなモデルでもかなり大きなダウンロードになるため、これには少し時間がかかる場合があります。

docker model pull $MODEL_NAME

さて、ツールの部分です。MCPゲートウェイはコンテナで実行される「通常の」アプリケーションであるため、「サービス」を定義し、正しいDockerイメージを指定することで、MCPゲートウェイをプルします。

mcp-gateway:

image: docker/mcp-gateway:latest

use_api_socket: true

command:

- --transport=sse

- --servers=youtube_transcript

SSE MCP サーバー自体として使用できるように指示し、現在の展開で有効にする MCP サーバーを指示します。MCP ツールキット カタログには、100 を超える便利な MCP サーバーが含まれています。これはおもちゃの例なので、YouTube動画のトランスクリプトをプルするためのおもちゃのMCPサーバーを有効にします。

依存関係が理解されたので、メインアプリケーションはローカルプロジェクトコンテキストから構築され、 GOOSE_MODEL env変数をDockerモデルランナーにロードする実際のモデルに指定します。

hani:

build:

context: .

ports:

- "7681:7681"

depends_on:

- mcp-gateway

env_file:

- .env

models:

qwen3:

model_var: GOOSE_MODEL

とても簡単ですよね?ここでのコツは、これらすべてのサービスを使用するようにコンテナ内の Goose を構成することです。config.yamlをコンテナにコピーしたことを覚えていますか?それがそのファイルの役割です。

まず、拡張機能を構成します。

extensions:

developer:

display_name: null

enabled: true

name: developer

timeout: null

type: builtin

mcpgateway:

bundled: false

description: 'Docker MCP gateway'

enabled: true

name: mcpgateway

timeout: 300

type: sse

uri: http://mcp-gateway:8811/sse

MCPゲートウェイ1は、作成ファイルによると、MCPゲートウェイが実行されるmcp-gateway:8811/sseURLに接続します。開発者拡張機能にはいくつかの便利なツールが組み込まれていますが、.goosehints も有効になります私たちをサポートします。

残っているのは、脳をつなぐことだけです。

GOOSE_PROVIDER: openai

OPENAI_BASE_PATH: engines/llama.cpp/v1/chat/completions

OPENAI_HOST: http://model-runner.docker.internal

Docker Model Runner が公開する OpenAI API 互換エンドポイントに接続するように Goose を構成します。コンテナでGooseを実行しているため、ホストTCP接続(localhost:他のチュートリアルで見た可能性のある12434 )を経由せず、Docker VMの内部URLを介して移動することに注意してください。 model-runner.docker.internal

それです!

構築したクールなエージェントを友人に見せびらかしたい場合は、compose-cloudflare.ymlをセットアップに組み込むと、cloudflare のランダムな URL から ttyd が実行されているローカル hani コンテナ ポート 7681 Web トンネルが作成されます。

cloudflared:

image: cloudflare/cloudflared

command: tunnel --url hani:7681

depends_on:

- hani

Docker Model Runner が有効になっている Docker Desktop がある場合は、1 つの compose コマンドでセットアップ全体を実行できるようになりました。

docker compose up --build

または、トンネルを含めて Goose をインターネットに公開したい場合は、次のようにします。

docker compose -f compose.yml -f compose-cloudflare.yml up --build

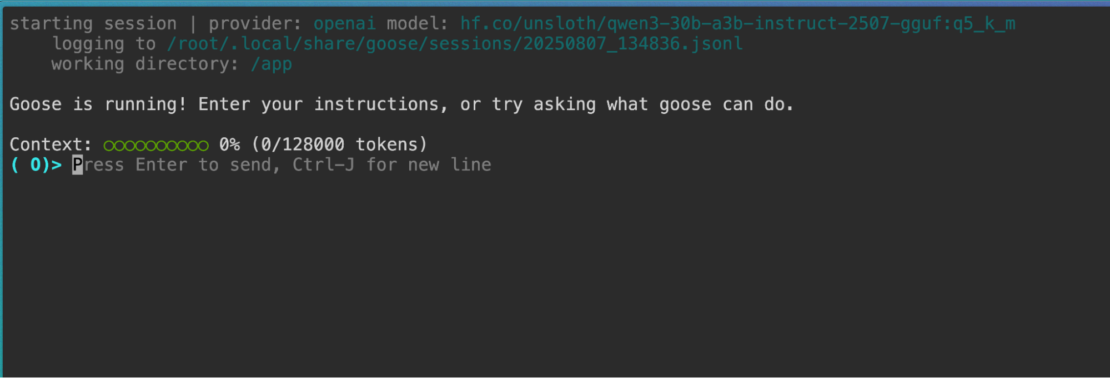

http://localhost:7681 (またはコンテナがログに出力する Cloudflare URL) を開くと、ブラウザーに Goose セッションが表示されます。

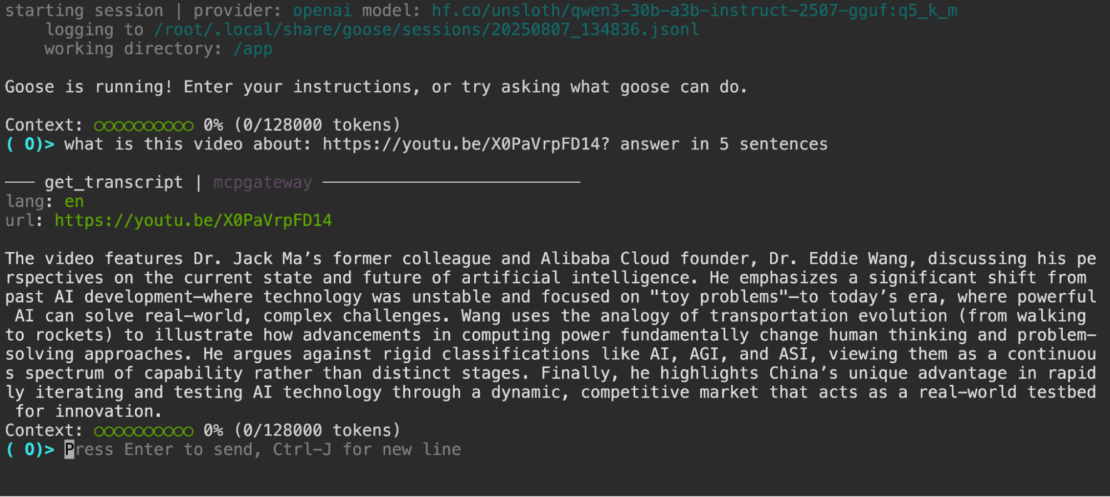

また、たとえば、次のような質問をした場合に、ツールを使用できます。

このビデオの内容: PaVrpFD14https://youtu.be/X0?5文で答える

ツールコールと、ビデオのトランスクリプトに基づく賢明な回答を見ることができます。

このセットアップの最も優れた点の 1 つは、アーキテクチャがモジュール式で、拡張用に設計されていることです。

- モデルの入れ替え: LLM は、compose.yml内のモデル定義を Docker Hub または Hugging Face で利用可能な他の GGUF モデルに変更することで変更できます。

- ツールの追加: MCP ゲートウェイ用に追加のサーバーを定義するか、スタンドアロンの MCP サーバーを配線して Goose 構成を編集することで、新しい機能を追加できます。

- ビジネス ロジックの追加は 、goosehints ファイルを編集してセットアップを再実行するだけです。すべてがコンテナに入っているので、すべてが封じ込められ、一時的です。

- エージェントフレームワーク: 基盤となるプラットフォーム (DMR、MCP Gateway、compose) はフレームワークに依存しないため、同様の設定を再構成して、OpenAI 互換 API と互換性のある他のエージェント フレームワーク (LangGraph、CrewAI など) を実行することができます。

結論

この記事では、Goose AI アシスタント、Docker MCP Gateway を統合し、ローカル AI モデルを Docker Model Runner と実行し、最も簡単な方法で Docker コンテナーでローカルに実行されるプライベート AI エージェントを構築する方法を見てきました。

これらのテクノロジーはすべてオープンソースであるため、レシピを使用してワークフローエージェントを簡単に作成できます。サンプルエージェントは特に役に立つことはなく、その機能はチャットとYouTubeからの動画の文字起こしに限定されていますが、どの方向にも持っていくことができる最小限の出発点です。

リポジトリのクローンを作成し、goosehintsファイルを編集し、お気に入りのMCPサーバーを構成に追加し、docker compose upを実行しれば準備完了です。

どのタスクのためにエージェントを構築していますか?教えてください、知りたいです: https://www.linkedin.com/in/shelajev/。