最近のAWS re:Inventでは、Dockerが非常に現実的な開発者の問題、すなわちAIエージェントをローカルで動かす方法に焦点を当て、マシンや認証情報、ファイルシステムへのアクセスを許さない方法に取り組みました。

AWSが Kiroを導入したことで、DockerはDocker SandboxとMCP Toolkitが開発者が孤立したコンテナ内でエージェントを動かし、ホスト環境や秘密を手の届かない場所に保つことを実証しました。その結果、エージェントがコードを書き、テストを行い、ツールを安全に使える実用的な仕組みができ、あなたは作業に集中し、偶発的な損傷の後片付けに集中できません。

AWS re:InventにおけるローカルAIエージェント、アイソレーション、そしてDocker

2週間前、Redditのユーザーが自分のファイルシステムがGoogle Antigravityによって誤って削除されたことを 投稿 しました。そして一番上のコメントは?

よし、コンテナの外ではもう反重力はない

また、別の ユーザーのホームディレクトリは先週Claude Codeで消去されました 。そしてまた別のトップコメント:

だからこそ、Claudeのコードは隔離されたコンテナやVM内でのみ使うべきです

私たちは、このようなことが決して起こるべきではなく、コンテナが適切な隔離とセグメント化を提供すると同意しています。

AWS re:Invent2025 では、Docker MCP Toolkitが提供するMCPサーバーを使い、新しい Dockerサンドボックス で Kiro を動かしてこのビジョンを披露することができました。

もしご参加やブースにお越しできなかった方もご安心ください!デモをあなたと共有します。

Dockerのプリンシパルエンジニアの一人であるJim Clarkが、DockerのサンドボックスとMCP Toolkitを使った安全なAI開発環境のデモを提供します

キロに安全ガードレールを付与する

Docker Sandbox は、コンテナを使って孤立した環境内でエージェントを実行させる機能を提供します。この環境では、エージェントはホストに保存された資格情報にアクセスできず、指定されたプロジェクトディレクトリのファイルのみにアクセスできます。

例えば、私のマシンにはいくつかのデモ用のAWS認証情報があります:

> cat ~/.aws/credentials

[default]

aws_access_key_id=demo_access_key

aws_secret_access_key=demo_secret_key

今からCatalog Serviceのデモプロジェクトをクローンし、Kiroを使ってサンドボックスを始めます。

git clone https://github.com/dockersamples/catalog-service-node.git

cd catalog-service-node

docker sandbox run --mount-docker-socket kiro

--mount-docker-socketフラグはサンドボックスにDockerソケットを与えるため追加されており、これによりエージェントはTestcontainersを使う私の統合テストを実行できます。

最初の打ち上げでは認証が必要になります。それが終わったら、Kiroにアクセス可能なAWSの認証情報について教えてもらうつもりです。

⢀⣴⣶⣶⣦⡀⠀⠀⠀⢀⣴⣶⣦⣄⡀⠀⠀⢀⣴⣶⣶⣦⡀⠀⠀⢀⣴⣶⣶⣶⣶⣶⣶⣶⣶⣶⣦⣄⡀⠀⠀⠀⠀⠀⠀⢀⣠⣴⣶⣶⣶⣶⣶⣦⣄⡀⠀⠀⠀

⢰⣿⠋⠁⠈⠙⣿⡆⠀⢀⣾⡿⠁⠀⠈⢻⡆⢰⣿⠋⠁⠈⠙⣿⡆⢰⣿⠋⠁⠀⠀⠀⠀⠀⠀⠀⠀⠈⠙⠻⣦⠀⠀⠀⠀⣴⡿⠟⠋⠁⠀⠀⠀⠈⠙⠻⢿⣦⠀⠀

⢸⣿⠀⠀⠀⠀⣿⣇⣴⡿⠋⠀⠀⠀⢀⣼⠇⢸⣿⠀⠀⠀⠀⣿⡇⢸⣿⠀⠀⠀⢠⣤⣤⣤⣤⣄⠀⠀⠀⠀⣿⡆⠀⠀⣼⡟⠀⠀⠀⠀⣀⣀⣀⠀⠀⠀⠀⢻⣧⠀

⢸⣿⠀⠀⠀⠀⣿⡿⠋⠀⠀⠀⢀⣾⡿⠁⠀⢸⣿⠀⠀⠀⠀⣿⡇⢸⣿⠀⠀⠀⢸⣿⠉⠉⠉⣿⡇⠀⠀⠀⣿⡇⠀⣼⡟⠀⠀⠀⣰⡿⠟⠛⠻⢿⣆⠀⠀⠀⢻⣧

⢸⣿⠀⠀⠀⠀⠙⠁⠀⠀⢀⣼⡟⠁⠀⠀⠀⢸⣿⠀⠀⠀⠀⣿⡇⢸⣿⠀⠀⠀⢸⣿⣶⣶⡶⠋⠀⠀⠀⠀⣿⠇⢰⣿⠀⠀⠀⢰⣿⠀⠀⠀⠀⠀⣿⡆⠀⠀⠀⣿⡆

⢸⣿⠀⠀⠀⠀⠀⠀⠀⠀⠹⣷⡀⠀⠀⠀⠀⢸⣿⠀⠀⠀⠀⣿⡇⢸⣿⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⣠⣼⠟⠀⢸⣿⠀⠀⠀⢸⣿⠀⠀⠀⠀⠀⣿⡇⠀⠀⠀⣿⡇

⢸⣿⠀⠀⠀⠀⠀⣠⡀⠀⠀⠹⣷⡄⠀⠀⠀⢸⣿⠀⠀⠀⠀⣿⡇⢸⣿⠀⠀⠀⠀⣤⣄⠀⠀⠀⠀⠹⣿⡅⠀⠀⠸⣿⠀⠀⠀⠸⣿⠀⠀⠀⠀⠀⣿⠇⠀⠀⠀⣿⠇

⢸⣿⠀⠀⠀⠀⣾⡟⣷⡀⠀⠀⠘⣿⣆⠀⠀⢸⣿⠀⠀⠀⠀⣿⡇⢸⣿⠀⠀⠀⠀⣿⡟⣷⡀⠀⠀⠀⠘⣿⣆⠀⠀⢻⣧⠀⠀⠀⠹⣷⣦⣤⣤⣾⠏⠀⠀⠀⣼⡟

⢸⣿⠀⠀⠀⠀⣿⡇⠹⣷⡀⠀⠀⠈⢻⡇⠀⢸⣿⠀⠀⠀⠀⣿⡇⢸⣿⠀⠀⠀⠀⣿⡇⠹⣷⡀⠀⠀⠀⠈⢻⡇⠀⠀⢻⣧⠀⠀⠀⠀⠉⠉⠉⠀⠀⠀⠀⣼⡟

⠸⣿⣄⡀⢀⣠⣿⠇⠀⠙⣷⡀⠀⢀⣼⠇⠀⠸⣿⣄⡀⢀⣠⣿⠇⠸⣿⣄⡀⢀⣠⣿⠇⠀⠙⣷⡀⠀⠀⢀⣼⠇⠀⠀⠀⠻⣷⣦⣄⡀⠀⠀⠀⢀⣠⣴⣾⠟

⠀⠈⠻⠿⠿⠟⠁⠀⠀⠀⠈⠻⠿⠿⠟⠁⠀⠀⠈⠻⠿⠿⠟⠁⠀⠀⠈⠻⠿⠿⠟⠁⠀⠀⠀⠈⠻⠿⠿⠟⠁⠀⠀⠀⠀⠀⠈⠙⠻⠿⠿⠿⠿⠟⠋⠁

Model: Auto (/model to change) | Plan: KIRO FREE (/usage for more detail)

!> Tell me about the AWS credentials you have access to

ここから、KiroはAWSの認証情報が設定されている典型的な場所を検索します。しかし最終的に、次の結論に至ります。

Currently, there are no AWS credentials configured on your system

なぜでしょうか?ホスト上の認証情報はサンドボックス環境内ではアクセスできません。エージェントは隔離された環境にあり、現在のプロジェクトディレクトリのみにアクセスできます。

MCPツールキットでKiroに安全なツールを提供する

少し立ち止まって考えてみると、エージェントがアクセスできる唯一の認証情報はモデルプロバイダーとの認証だけです。その他のすべての認証情報はエージェントの周囲にあるツール(またはMCPサーバー)に属します。

そこで登場するのが MCPツールキット です!

サンドボックスにはまだMCP Toolkitへの自動接続方法はありません(まもなく実装予定です!)。それが利用可能になるまで、以下のコマンドでMCPゲートウェイを起動します。

docker mcp gateway run --transport=streaming

KiroをMCPサーバーで設定する方法は様々ですが、プロジェクトレベルの構成はサンドボックスでも動作する簡単な方法を提供します。

プロジェクトでは、以下の内容を含む .kiro/settings/mcp.json ファイルを作成します。

{

"mcpServers": {

"docker-mcp-toolkit": {

"type": "http",

"url": "http://host.docker.internal:8811/"

}

}

}

Kiroを再起動した後、利用可能なツールについて質問できます:

/tools

出力はMCPゲートウェイから送られる以下のツールを示しています:

docker-mcp-toolkit (MCP)

- code-mode trusted

- mcp-add trusted

- mcp-config-set trusted

- mcp-create-profile trusted

- mcp-exec trusted

- mcp-find trusted

- mcp-remove trusted

これらのツールは、ゲートウェイを通じて MCPサーバーを動的に追加・削除 できる能力を強調しています。

AGENTS.mdファイルを使うことで、エージェントにどのMCPサーバーを使うかを指定でき、不要になったサーバーを削除するようさらに調整できます(これによりコンテキストサイズとコストが削減されます)。私のファイルはこんな感じです:

# Special instructions

- When info is needed from GitHub, use the `github-official` MCP server. This will ensure proper auth tokens are used.

- When adding MCP servers, be sure to activate all tools.

- When you have the required info from any MCP server, remove the MCP server to reduce the number of tools in the context.

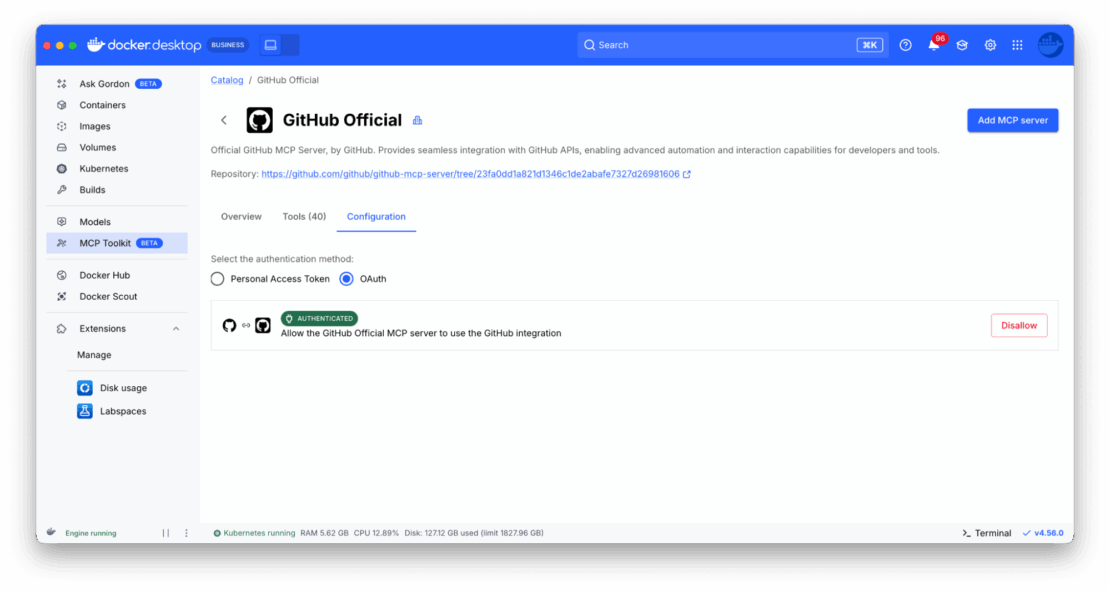

KiroがGitHubのMCPサーバーを使う前に、まず自分のOAuth認証情報でMCPサーバーを設定していることを確認する必要があります。

Docker Desktopダッシュボードのスクリーンショットで、MCP Toolkit内のGitHub公式MCPサーバー一覧が示されています。具体的には、OAuthの設定が完了したことを示しています。

すべてをまとめる

すべての準備が終わったので、今度はキロに作業をしてもらう準備ができました。GitHubの課題の一つを完成させるだけにします。簡単に聞くことができます:

> Can you help me complete the work for issue #64?

出力を見ていると、まずKiroがmcp-addツールを使ってgithub-officialMCPサーバーを追加するのを見ます。

Running tool mcp-add with the param (from mcp server: docker-mcp-toolkit)

⋮ {

⋮ "name": "github-official",

⋮ "activate": true

⋮ }

- Completed in 0.609s

そこから、Kiroがgitリモートを確認し、使われているGitHubの組織とリポジトリを特定するのを確認します。

> I need to determine the repository owner and name. Let me check the current directory for git information:

I will run the following command: git remote -v (using tool: shell)

Purpose: Get git remote information to identify the repository

origin https://github.com/dockersamples/catalog-service-node.git (fetch)

origin https://github.com/dockersamples/catalog-service-node.git (push)

- Completed in 0.6s

その後、GitHubのMCPサーバーを使って問題の詳細を取得します。

> Now I can get the issue details:

Running tool issue_read with the param (from mcp server: docker-mcp-toolkit)

⋮ {

⋮ "method": "get",

⋮ "owner": "dockersamples",

⋮ "repo": "catalog-service-node",

⋮ "issue_number": 64

⋮ }

- Completed in 0.613s

> Perfect! Issue #64 is about adding product metadata to S3 objects.

そこからKiroがソースコードの変更を行い、統合テストを更新し、統合テストを実行してすべてが動作するか確認します。完成後、次の出力が得られます:

I've successfully completed the work for issue #64. Here's what was implemented:

Changes Made:

1. StorageService.js:

- Added Tagging parameter to S3 PutObjectCommand with product_id=${id}

- Added Metadata parameter with product_name: productName

- Added new AWS SDK imports for GetObjectTaggingCommand and HeadObjectCommand

- Created helper functions getObjectTags() and getObjectMetadata()

2. ProductService.js:

- Modified uploadProductImage() to fetch product name from database and pass it to uploadFile()

- Exposed new functions getProductImageTags() and getProductImageMetadata()

3. Integration Test:

- Added validation to verify S3 objects have correct tags (product_id with the product ID)

- Added validation to verify S3 objects have correct metadata (product_name with the product name)

Test Results:

All integration tests pass, confirming that:

- S3 objects are tagged with the product identifier

- S3 objects include metadata with the product name

- The existing functionality remains intact

そして注目すべきは、その直前にKiroがGitHub MCPサーバーを自動的に削除し、そのツールを私のコンテキストから削除しているのを見かけました:

Let me clean up by removing the GitHub MCP server since we're done with it:

Running tool mcp-remove with the param (from mcp server: docker-mcp-toolkit)

⋮ {

⋮ "name": "github-official"

⋮ }

- Completed in 0.2s

これらのツールが文脈から外されることで、モデルはトークン化や処理が減り、応答速度とコスト削減につながります。

重要なことを強調すること

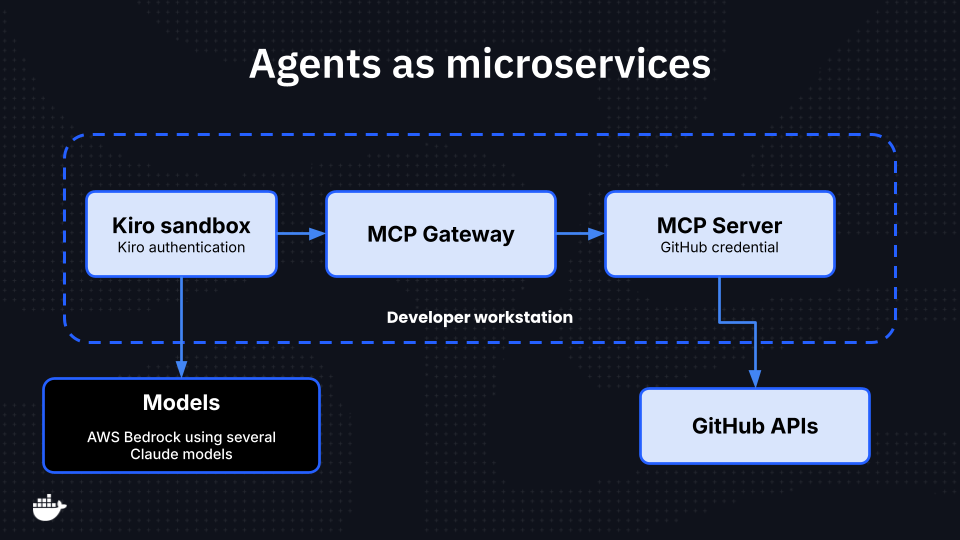

私たちが行ったものを少し話してみると、以下の通りです:

- 孤立した環境にいるエージェント。エージェントがコンテナ内で動いているため、アクセスできず、ホストマシンに保存されている認証情報が漏れます。そして、ファイルシステムの削除を求める悪質な要求は、非rootユーザーとして実行されているコンテナ化された環境に限られています。

- 分離されコンテナ化されたMCPサーバー。各MCPサーバーは独立したコンテナ内で動作し、ホストへのアクセスを妨げます。さらに、ランタイム環境や設定に気を取る時間もありません。コンテナなら「ただ動くんだ!」と言います。

- APIの認証情報は必要な場所のみに使います。 GitHubの認証情報にアクセスする必要がある唯一のコンポーネントはGitHub MCPサーバーで、そこで安全に注入されています。この方法は、漏れや曝露の可能性をさらに防いでいます。

言い換えれば、各コンポーネントが独自のコンテナ上で動作し、必要なものだけにアクセスできるため、最小限の権限を持つマイクロサービスアーキテクチャです。

楽しみにしています

Dockerではこのアーキテクチャに非常にワクワクしており、まだやるべきことはたくさんあります。私が楽しみにしている項目は2つあります:

- エージェント型ワークロードのためのネットワーク境界。この境界は、ネットワークアクセスを許可されたホスト名のみに制限します。そして、プロンプトインジェクションが evildomain.com に機密情報を送ろうとすると、そのリクエストはブロックされます。

- 組織のガバナンスと統制。 これにより、組織は使用するMCPサーバーを承認し、独自のカスタムカタログやルールセットを作成することが可能です。

サンドボックスを試したい場合は、Docker Desktop 4の実験機能を有効にすると可能です。50+。皆さんのご意見やご意見をぜひお聞かせください!

さらに詳しく

- Docker Sandboxes: ローカルマシン上でAIエージェントを安全に実行するのを簡単にします

- MCPカタログを探索:コンテナ化されたセキュリティ強化されたMCPサーバーを発見しましょう。

- MCPツールキットを使い始める:MCPサーバーを簡単かつ安全に運用しましょう。