Docker Model Runner が一般提供 (GA) されたことをお知らせできることを嬉しく思います。2025年 4 月、Docker は Docker Model Runner の最初のベータ リリースを導入し、ローカル AI モデル (特に LLM) の管理、実行、配布を容易にしました。それからわずかな時間が経過しましたが、製品は急速に進化し、継続的な強化により、製品は信頼できるレベルの成熟度と安定性にまで達しています。

このブログ投稿では、Docker Model Runner が開発者にもたらす最も重要で広く評価されている機能を振り返り、近い将来に期待できることを共有するために将来を見据えています。

Docker Model Runnerとは何ですか?

Docker Model Runner (DMR)は開発者向けに構築されており、 Docker Hub (OCI準拠形式)または HuggingFace (モデルがGGUF形式で利用可能な場合、HuggingFaceバックエンドによってOCIアーティファクトとしてオンザフライでパッケージ化される)から直接、大規模言語モデル(LLM)のプル、実行、配布が容易になります。

Docker DesktopおよびDocker Engineと緊密に統合されたDMRを使用すると、OpenAI互換APIを介してモデルを提供し、GGUFファイルをOCIアーティファクトとしてパッケージ化し、コマンドライン、グラフィカル・インタフェースまたは開発者フレンドリー(REST)APIを使用してモデルと対話できます。

生成 AI アプリケーションの作成、機械学習ワークフローの実験、ソフトウェア開発ライフサイクルへの AI の組み込みなど、Docker Model Runner は、ローカルで AI モデルを操作するための一貫性のある安全かつ効率的な方法を提供します。

Docker Model Runner とその機能の詳細については、 公式ドキュメント を確認してください。

Docker Model Runner を使用する理由

Docker Model Runner を使用すると、開発者は、すでに毎日使用しているのと同じ Docker コマンドとワークフローを使用して、エージェント アプリを含む AI アプリケーションを簡単に実験および構築できます。新しいツールを学ぶ必要はありません。

複雑さをもたらしたり、追加の承認を必要とする多くの新しい AI ツールとは異なり、Docker Model Runner は既存のエンタープライズ インフラストラクチャにきちんと適合します。現在のセキュリティとコンプライアンスの境界内で実行されるため、チームはそれを採用するために困難を乗り越える必要はありません。

モデル・ランナーはOCIパッケージ・モデルをサポートしているため、Docker Hubを含む任意のOCI互換レジストリを介してモデルを格納および配布できます。また、Docker Hubを使用しているチームの場合、レジストリアクセス管理(RAM)などのエンタープライズ機能は、ポリシーベースのアクセス制御を提供し、ガードレールを大規模に適用するのに役立ちます。

開発者が最も愛する Docker Model Runner の機能11

以下は、最も際立っており、コミュニティから高く評価されている機能です。

1。llama.cppを搭載

現在、DMRは llama.cppの上に構築されていますが、今後もサポートしていく予定です。同時に、DMR は柔軟性を念頭に置いて設計されており、将来のリリースでは追加の推論エンジン (MLX や vLLM など) のサポートが検討されています。

2。macOSおよびWindowsプラットフォームでのGPUアクセラレーション

GPU サポートにより、ハードウェアのパワーを最大限に活用できます: macOS の Apple Silicon、Windows の NVIDIA GPU、さらには ARM/Qualcomm アクセラレーションなど、すべて Docker Desktop を通じてシームレスに管理されます。

3。ネイティブLinuxのサポート

Docker CE を使用して Linux 上で DMR を実行するため、自動化、CI/CD パイプライン、本番ワークフローに最適です。

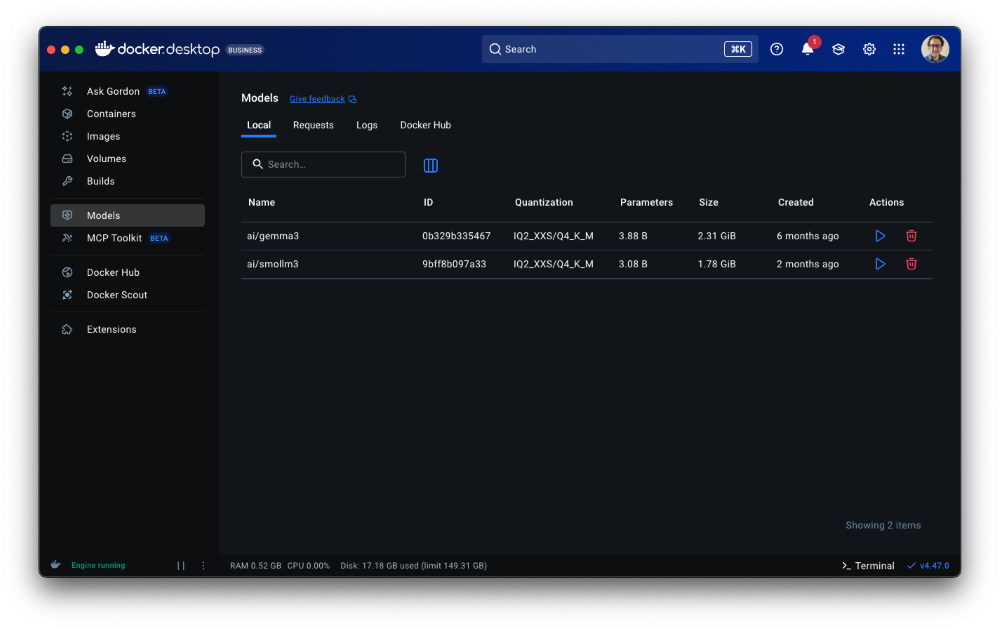

4。CLI と UI のエクスペリエンス

DMR は、Docker CLI (Docker Desktop と Docker CE の両方) または Docker Desktop の UI から使用します。UI はガイド付きオンボーディングを提供し、利用可能なリソース (RAM、GPU など) を自動的に処理し、初めての AI 開発者でもスムーズにモデルの提供を開始できるようにします。

図 1: Docker Model Runner は Docker Desktop と CLI の両方で動作し、すでに知っているのと同じ使い慣れた Docker コマンドとワークフローを使用してモデルをローカルで実行できます

5。柔軟なモデル分布

Docker HubからOCI形式でモデルをプルおよびプッシュするか、GGUF形式でモデルをホストするHuggingFaceリポジトリから直接プルして、モデルのソーシングと共有の柔軟性を最大限に高めます。

6。オープンソースで無料

DMR は完全にオープンソースで誰でも無料で利用できるため、AI を実験したり構築したりする開発者の参入障壁が低くなります。

7。安全で制御された

DMR は、メイン システムやユーザー データに干渉しない、分離された制御された環境で実行されます (サンドボックス)。開発者と IT 管理者は、DMR を有効/無効にしたり、ホスト側の TCP サポートや CORS などのオプションを設定したりすることで、セキュリティと可用性を微調整できます。

8。構成可能な推論設定

開発者は、ユースケースに合わせてコンテキストの長さとllama.cppランタイムフラグをカスタマイズでき、さらに多くの構成オプションが間もなく提供される予定です。

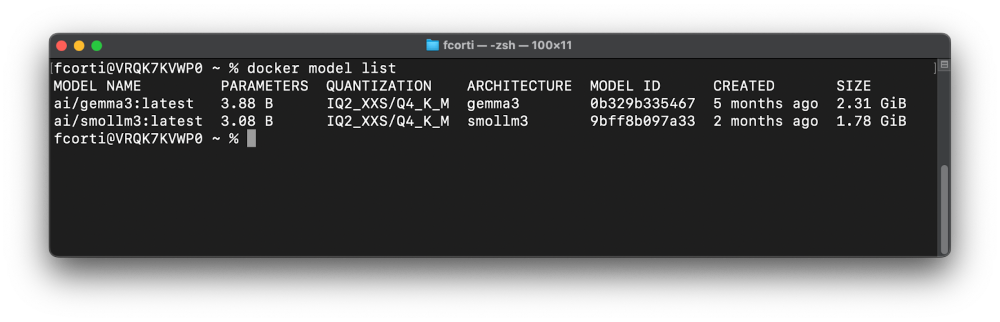

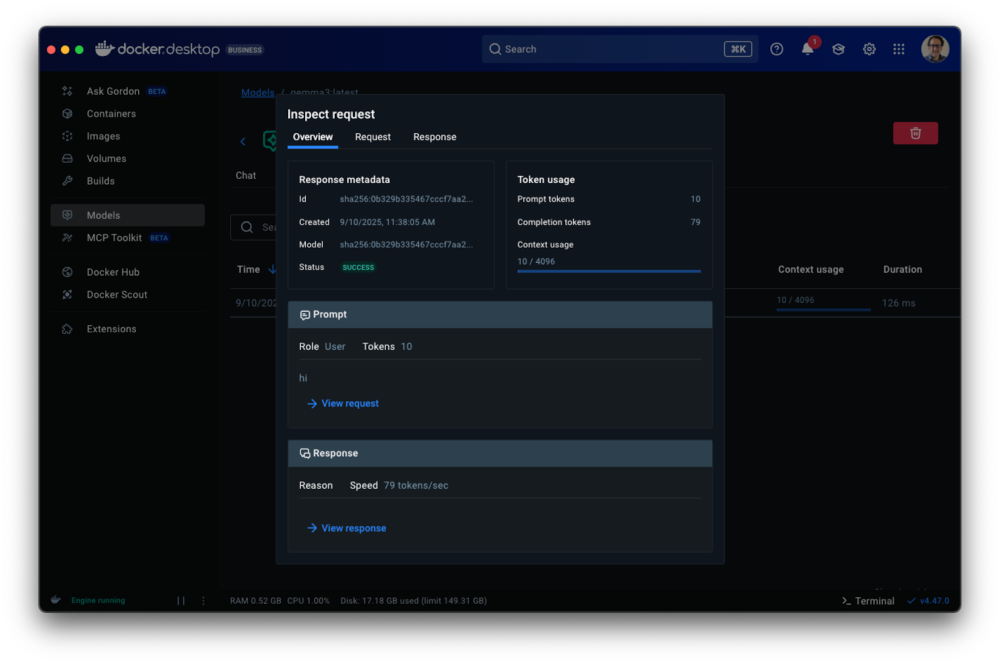

9。デバッグのサポート

組み込みのリクエスト/レスポンスのトレースと検査機能により、トークンの使用状況とフレームワーク/ライブラリの動作を理解しやすくなり、開発者がアプリケーションのデバッグと最適化に役立ちます。

図 2: Docker Desktop に組み込まれているトレースおよび検査ツールにより、デバッグが容易になり、開発者はトークンの使用状況とフレームワークの動作を明確に可視化できます

10。Dockerエコシステムとの統合

DMR は Docker Compose とすぐに連携し、 Docker Offload (クラウド オフロード サービス) や Testcontainers などの他の Docker 製品と完全に統合されており、ローカル ワークフローと分散ワークフローの両方にその範囲が拡張されます。

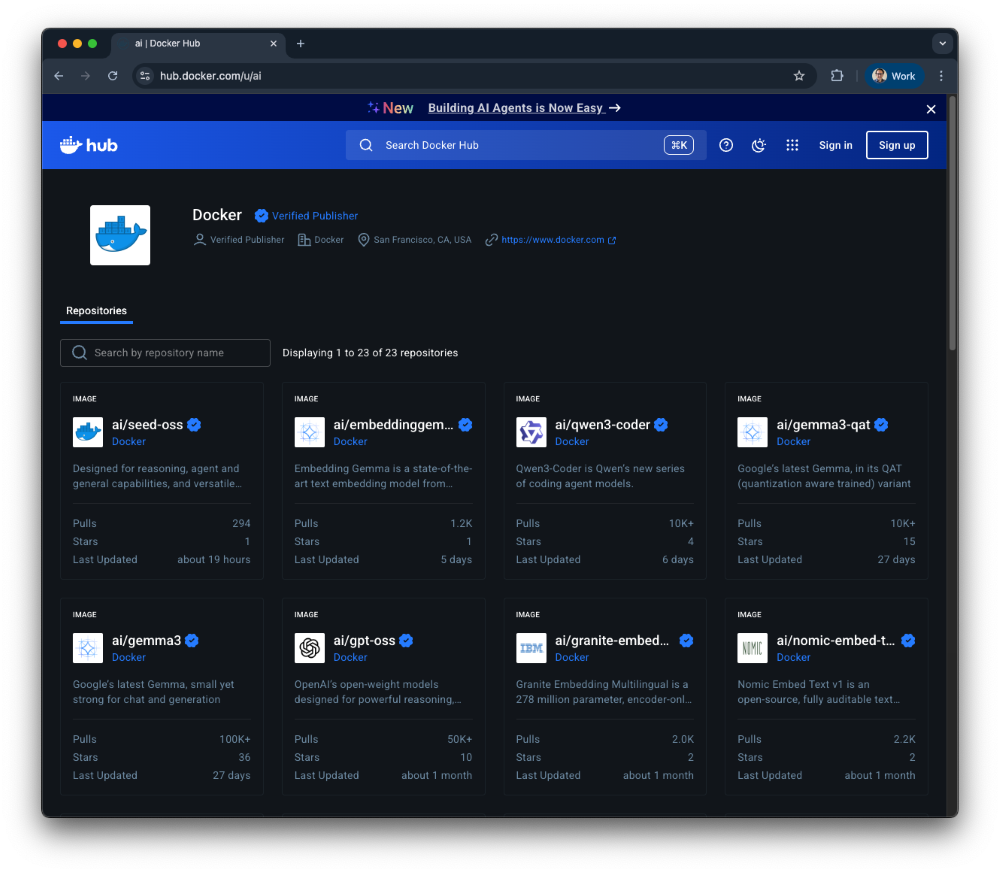

11。最新のモデルカタログ

Docker Hub で最も人気があり強力な AI モデルの 厳選されたカタログ にアクセスします。これらのモデルは無料でプルでき、開発、パイプライン、ステージング、さらには本番環境でも使用できます。

図 3: Docker Hub上のキュレーションされたモデル・カタログ、OCIアーティファクトとしてパッケージ化され、実行可能

今後の道のり

Docker Model Runner の未来は明るく、最近の GA バージョンは最初のマイルストーンにすぎません。以下は、間もなくリリースされる予定の将来の機能強化の一部です。

合理化されたユーザーエクスペリエンス

私たちの目標は、開発者がDMRをシンプルかつ直感的に使用およびデバッグできるようにすることです。これには、Docker Desktop と CLI 内のチャットのようなインターフェイスでのより豊富な応答レンダリング、UI でのマルチモーダル サポート (API を通じてすでに利用可能)、MCP ツールとの統合、強化されたデバッグ機能、および柔軟性を高めるための拡張された構成オプションが含まれます。最後になりましたが、AI エコシステム全体でサードパーティのツールやソリューションとのよりスムーズでシームレスな統合を提供することを目指しています。

機能強化と実行能力の向上

私たちは、ローカルモデルを実行するためのDMRのパフォーマンスと柔軟性を継続的に改善することに引き続き注力しています。今後の機能強化には、最も広く使用されている推論ライブラリとエンジンのサポート、エンジンとモデルレベルでの高度な構成オプション、本番グレードのユースケース向けに Docker Engine から独立して Model Runner をデプロイする機能などが含まれ、さらに多くの改善が予定されています。

摩擦のないオンボーディング

初めての AI 開発者には、アプリケーションの構築をすぐに開始し、適切な基盤を持って構築できるようにしたいと考えています。これを達成するために、DMR へのオンボーディングをさらにシームレスにする予定です。これには、開発者がすぐに使い始めるのに役立つガイド付きのステップバイステップのエクスペリエンスが含まれ、DMR 上に構築された一連のサンプル アプリケーションと組み合わされます。これらのサンプルでは、実際のユースケースとベストプラクティスに焦点を当て、日常のワークフローでDMRを実験して採用するためのスムーズなエントリポイントを提供します。

モデルの発売を常に把握する

推論機能の強化を続ける中で、モデルを含むOCIアーティファクトの主要なレジストリであるDocker HubでAIモデルのファーストクラスのカタログを直接維持することに引き続き取り組んでいます。私たちの目標は、新しい関連モデルが Docker Hub で利用可能になり、一般公開されるとすぐに DMR を通じて実行できるようにすることです。

結論

Docker Model Runner は短期間で大きな進歩を遂げ、ベータ リリースから成熟した安定した推論エンジンに進化し、現在一般公開されています。その核心となる使命は常に明確で、開発者が AI モデルをローカルで取得、実行、提供できるようにシンプルで一貫性があり、安全にすることです。使い慣れたDocker CLIコマンドとツールを使用して、すでに愛用しています。

今が始めるのに最適な時期です。まだインストールしていない場合は、 Docker Desktop をインストールし て、今すぐ Docker Model Runner をお試しください。公式ドキュメントに従ってその機能を調べ、DMR が AI を活用したアプリケーションの構築への取り組みをどのように加速できるかを自分の目で確かめてください。

さらに詳しく

- Docker Model Runner のクイックスタートガイドをお読みください。

- Model Runner GitHub リポジトリにアクセスしてください。Docker Model Runner はオープンソースであり、コミュニティからのコラボレーションと貢献を歓迎します。

- Compose が Model Runner と連携して AI アプリとエージェントを簡単に構築する方法をご覧ください。

- AI チューターを構築する方法を学ぶ

- ハイブリッド AI ワークフローで ローカル モデルとリモート モデルの両方を使用する方法 を調べる

- Goose と Docker で簡単に AI エージェントを構築

- ハギング面でのモデルランナーの使用

- Docker Model Runner による AI 生成テストの強化

- 生成 AI アプリの構築 Spring AIとDocker Model Runnerを使用したJavaで

- ローカルLLMによるツール呼び出し:実践的な評価

- 舞台裏: Docker Model Runner の設計方法と今後の予定

- DockerがAIモデルのパッケージ化にOCI Artifactsを選択した理由

- Docker Model Runner の新機能

- AI モデルを Docker Hub に公開する

- Docker Model Runner を使用して GenAI ChatBot をゼロから構築して実行する方法