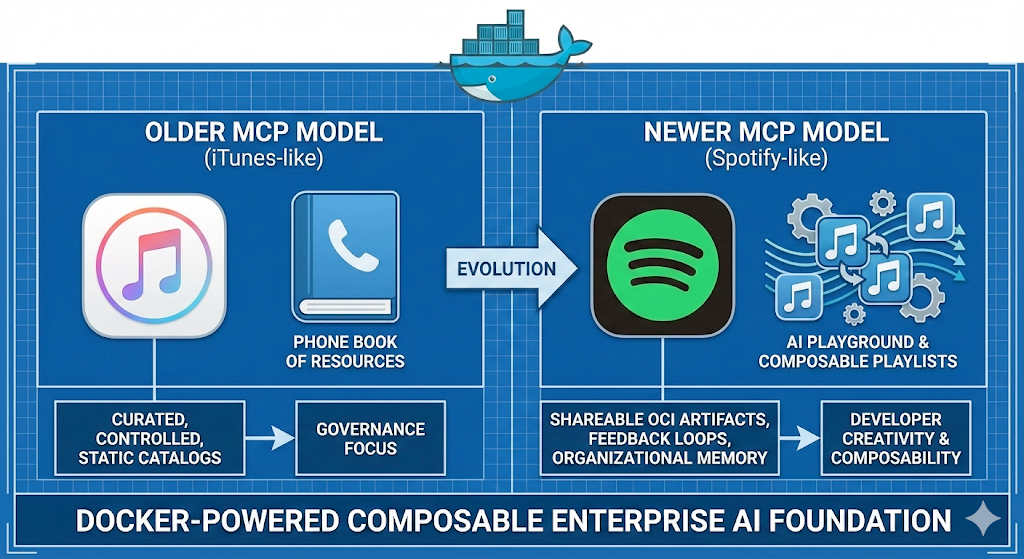

モデルコンテキストプロトコルのインフラに関する議論の多くは、数千のAIツールをどのように管理し、どのMCPサーバーが稼働しているかを監視する方法を問います。この質問は基本的な条件ですが、可能性を過小評価しています。より良い問いは、信頼できる基盤から開発者の創造性を発揮するためにMCPをどう解き放つかということです。

最初の質問は、厳選され管理された静的なリソースの電話帳を生み出します。もう一つは、エージェントと開発者が交流し、互いに学び合うAIの遊び場を示しています。もしMCPサーバーのプライベートカタログが、ツール呼び出しのミキシングやリシェイピング、無数の組み合わせを促すコンポジタブルプレイリストになったらどうなるでしょうか?これには、MCPカタログをデータベースではなくOCIの成果物として扱うことが必要です。

クラウドネイティブコンピューティングは、インフラがコードとなり、デプロイメントが宣言的になり、運用知識が共有可能な成果物となるフィードバックループを生み出しました。MCPカタログも同じ道筋をたどる必要があります。OCIの成果物、不変のバージョン管理、コンテナネイティブのワークフローは、信頼と創造的進化のバランスを保つよく理解されたアプローチを示しているため、モデルを提供します。

広がり学びながら信頼する境界線を

iTunesはストアを提供しました。Spotifyはストア機能に加え、アルゴリズムによる発見、プレイリスト共有、そして時間とともに改善された味わいプロファイルを提供しました。プライベートMCPカタログも同様の進化を可能にします。今日では、これは厳選され、検証されたコレクションを意味します。明日には、これが自己改善型発見システムの基盤となるでしょう。

数万台のMCPサーバーがGitHub、レジストリ、フォーラムに散らばっています。mcp.so、Smithery、Glama、PulseMCPなどのコミュニティ登録機関がこのエコシステムの組織化を試みていますが、出所は不明瞭で品質も大きくまちまちです。より厳格なアクセス制御を持つプライベートカタログは、集中的な発見、検証されたサーバーによるセキュリティ強化、開発者が実際に使用するツールの可視化を提供します。組織は承認されたサーバーのキュレーションされたサブセットを構築し、独自の内部サーバーを追加し、コミュニティレジストリーから選択的にインポートできます。これで電話帳の問題が解決します。

出力が入力になるとき

本当のチャンスは、エージェントが自動的に共有可能な成果物と組織学習を生み出すときです。エージェントは3つのデータソースにわたる顧客の離脱を分析する複雑な課題に直面しています。MCPゲートウェイはツール、APIキー、操作の順序、そして何がうまくいったかのドキュメントをキャプチャしたプロファイルを構築します。そのプロファイルはレジストリ内のOCIアーティファクトとなります。

来月、別のチームが同様の問題に直面します。エージェントはあなたのプロフィールを出発点として取り上げ、それを適応させ、洗練されたバージョンを押し付けます。カスタマーサクセスチームは、データウェアハウスコネクタ、可視化ツール、通知サーバーを組み合わせたチャーンプロファイルを作成します。営業チームはそのプロファイルを取り込み、CRMコネクターを追加し、更新の戦略を立てるために活用します。彼らは強化版をカタログに再公開します。チームは同じソリューションを再構築するのをやめ、代わりに再利用やリミックスを繰り返します。知識は捉えられ、共有され、洗練されます。

なぜOCIがこれを可能にするのか

カタログを不変のOCIアーティファクトとして扱うことで、エージェントはバージョンやプロファイルにピン留めできます。あなたの生産エージェントはカタログV2を使用します。3 QAはv2を使います。4、そして彼らは漂流しません。これがなければ、エージェントAは謎の失敗を招きます。なぜなら、依存していたデータベースコネクタが壊れやすい変更で静かに更新されたからです。監査の記録はシンプルになります。事件Xが発生した時にどの道具が利用可能だったかを証明できます。OCIベースのカタログは、カタログやエージェントをGitOpsツールで完全にアドレス可能な一流インフラにする唯一のアプローチです。

コンテナ付きのOCIはMCPにとって重要な2つの利点をもたらします。まず、コンテナは密閉性がありながらカスタマイズ可能で文脈に富んだセキュリティ境界を提供します。MCPサーバーは、明示的なネットワークポリシー、ファイルシステムの隔離、リソース制限を備えたサンドボックスコンテナ上で動作します。シークレットインジェクションは、プロンプトに認証情報なしで標準的な仕組みで行われます。これは、MCPサーバーが任意のコードを実行したり、ファイルシステムにアクセスできる場合に重要です。

次に、コンテナと関連するOCIのバージョン管理は、一般的なコンテナスタックやワークフローの他のガバナンスツールとマッチングし、再利用可能なガバナンスツールを適切に付加します。カタログはOCIの成果物であるため、画像スキャンも同じように機能します。署名や出所証明では、カタログにも画像と同様にCosignを使用します。Harbor、Artifactory、その他のレジストリーはすでに高度なアクセス制御を備えています。OPAを通じたポリシーの強制は、コンテナ展開と同様にカタログの使用にも適用されます。FedRAMP承認のコンテナレジストリもMCPカタログを扱っています。セキュリティチームは新しいツールを学ぶ必要はありません。

電話帳やiTunesからインテリジェントプラットフォーム、Spotifyまで

組織は信頼の範囲内で動的な発見へと進化することができます。MCPゲートウェイは、エージェントが実行時にカタログを照会し、適切なツールを選択し、必要なもののみをインスタンス化できるようにします。MCPゲートウェイ内の Dockerの動的MCP を使えば、エージェントはmcp-findやmcp-addのような組み込みツールを呼び出してキュレーションされたカタログを検索し、新しいMCPサーバーをオンデマンドで引き出して起動し、不要になったらツールリストや設定をハードコーディングする代わりに廃止することも可能です。動的MCPは未使用ツールをモデルの文脈から排除し、トークンの膨大化を減らし、エージェントがより大きなMCPサーバーのプールからジャストインタイムのワークフローを組み立てられるようにします。長期的なビジョンはさらに広がります。ゲートウェイは、ユーザーがMCPとどのように連携するかに関するセマンティックインテリジェンスを捉え、どのツールが効果的に組み合わせられるかを学び、類似の問題が以前にどのように解決されたかに基づいて関連するサーバーを提案します。

長期的なビジョンはさらに広がります。ゲートウェイは、ユーザーがMCPとどのように連携するかに関するセマンティックインテリジェンスを捉え、どのツールが効果的に組み合わせられるかを学び、類似の問題が以前にどのように解決されたかに基づいて関連するサーバーを提案します。チームはこの知識フィードバックループから学び、さらに加わる一方で、プライベートカタログユーザーは新しいMCPを発見し、MCPを有用に組み合わせ、自分たちの考えやMCPゲートウェイからの提案に触発されて新しいやり方を開発します。このプロセスはまた、リアルタイムの強化学習を提供し、システムに知恵と文脈を伝え、ゲートウェイを利用するすべての人に利益をもたらします。これは組織の記憶をインフラストラクチャとして、実際のエージェントの仕事から生まれ、人間と機械の知能を無限に融合させたものです。

プライベートカタログ、動的MCPによるランタイム発見、OCIアーティファクトとしてのプロファイル、サンドボックス実行を用いたコンテナネイティブアプローチは、この将来のAIプレイグラウンドのために構成可能で安全な基盤を築きます。信頼できる基盤から開発者の創造性を発揮するために、MCPをどのように解き放つことができるでしょうか?コンテナを扱ったように扱いつつも、AIがエージェント的で知能的なシステムとして当然受けるべき特権を与えるべきです。セマンティックインテリジェンスとコンテキスト理解を備えたプライベートMCPカタログは、OCIバージョン対応のインフラ上に構築され、安全な エージェントサンドボックス内で動作するものであり、そのビジョンへの第一歩です。