AIツールをワークフローに取り入れ始めたとき、最初はフラストレーションを感じました。SNSで他の人が称賛していたような 5×や 10×の増えは得られませんでした。むしろ、それが私のペースを遅らせてしまった。

それでも私は諦めませんでした。部分的には、ソフトウェアエンジニアとしてできるだけ生産的に働くことが自分の職業的義務だと考えているから、また部分的には、組織内で実験台になることを自ら志願していたからです。

しばらく葛藤した末、ついに画期的な発見を得ました。AIツールをうまく活用する方法は、私たちが何十年もソフトウェア開発で応用してきた同じ分野を含んでいるのです。

- 作業を合理的な単位に分けましょう

- 問題を解決しようとする前に理解してください

- うまくいったこととそうでなかったことを特定する

- 次の反復のために変数を調整する

この記事では、私がより高い生産性を実現したAI利用のパターンを共有します。

これらは決定的なベストプラクティスではありません。AIのツールや機能はあまりにも速く変化しており、コードベースも大きく異なっています。さらに、AIの確率的な性質も考慮していません。

しかし、これらのパターンをワークフローに取り入れることで、AIの恩恵を受ける開発者の一人になることができると確信しています。フラストレーションを感じたり取り残されたりするのではなく。

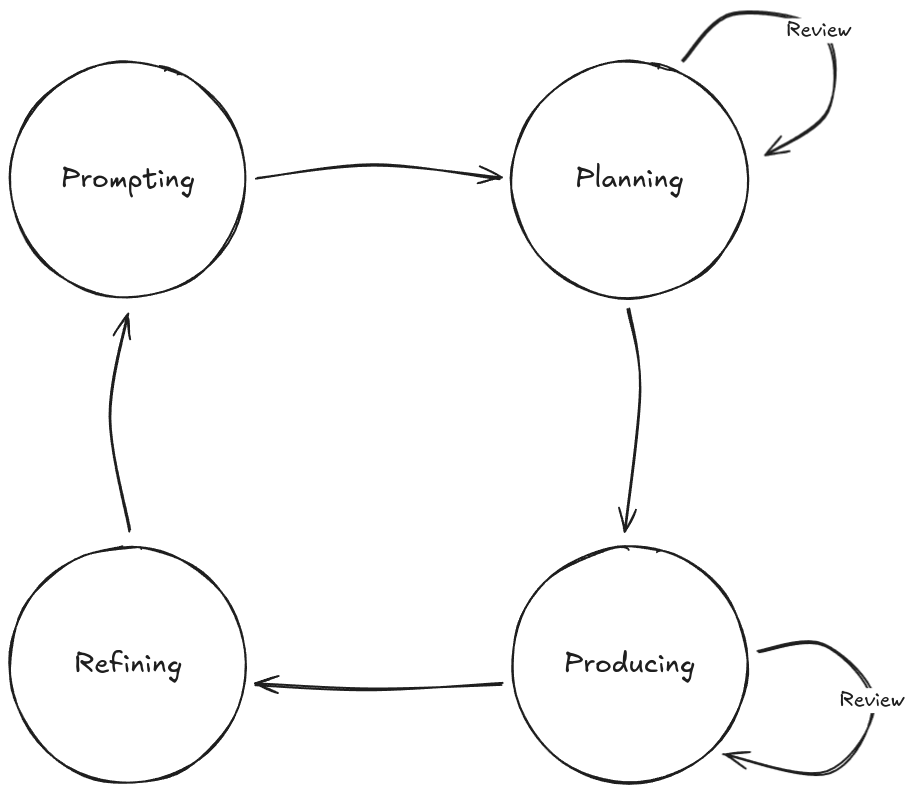

効果的なAIコーディングのためのサイクル

あまりにも多くの人がAIを魔法の杖のように扱い、自分のコードを書いて思考をしてくれると考えがちです。そんなことはありません。これらのツールは、あくまで道具に過ぎません。これまでのすべての開発者ツールと同様に、その影響はどれだけうまく使うかに依存します。

AIツールを最大限に活用するには、常にアプローチを微調整し洗練させる必要があります。

使用するツールの機能によっても、具体的な手順は異なります。

この記事では、Claude Codeのようなエージェント型AIツール、またはそれに似たものを使っていると仮定します。調整 可能なレバー と専用のプランニングモードを備えたバランスの取れたコーディングエージェントで、多くのツールが採用しています。このタイプのツールが最も効果的だと感じています。

このようなツールがあれば、効果的なAIコーディングサイクルは次のようなものになるはずです。

サイクルは4つのフェーズで構成されています。

- プロンプト:AIに指示を出す

- 計画:AIと協力して変革計画を構築する

- プロデュース:AIがコードを変更する際に誘導すること

- 洗練:この反復から得た学びを活かして次のサイクルに向けてアプローチを更新する

これは複雑すぎると思うかもしれません。きっと、プロンプトとプロンピングを繰り返し行き来することはできるのではないでしょうか?はい、それはできますし、小さな変更には十分に効果的かもしれません。

しかし、すぐにそれが 持続可能な コードを素早く書く助けにはならないことに気づくでしょう。

このループの各ステップがなければ、AIツールはその位置や文脈を失い、出力の質が急落するリスクがあります。これらのツールの大きな限界の一つは、こうした状況が起きたときに停止して警告を出してくれないことです。彼らはただ最善を尽くし続けるだけです。ツールの操作者であり、最終的にコードの所有者として、AIを成功させるのはあなたの責任です。

このワークフローが実際にどのようなものか見てみましょう。

1。プロンプト

AIツールは真の自律性を持っていません。出力の質はあなたが提供する入力を反映しています。だからこそ、プロンプトはループの中で最も重要な段階と言えるでしょう。どれだけうまくやるかが、結果として得られる成果の質、そしてそれに伴いAIの活用効率を左右します。

このフェーズには主に2つの考慮事項があります:コンテキスト管理とプロンプト作成です。

コンテキスト管理

現世代のAIツールに共通する特徴は、文 脈が増えるにつれて出力の質が低下する傾向があることです。これはいくつかの理由で起こります:

- 中毒:誤りや幻覚が文脈に残る

- 気を散らす点:モデルはより良い情報を求める代わりに、平凡な文脈を再利用します

- 混乱:無関係な詳細が出力品質を下げる

- 衝突:古くなったり矛盾した情報が誤りを招く

AIツールにこの制限がある限り、コンテキストを厳密に管理した方がより良い結果が得られます。

実際には、エージェントと一つの長期的な会話をするのではなく、タスクの合間にそのコンテキストを「消去」するべきだということです。毎回新しい設定から始め、次のタスクに必要な情報を再度プロンプトして、蓄積された文脈に無理に頼らないようにしましょう。Claude Codeでは、 /clear スラッシュコマンドでこれを行います。

文脈を明確にしないと、Claudeのようなツールはそれを「自動コンパクト化」してしまい、これは誤りを後押しし、時間とともに品質を低下させる損失のあるプロセスです。

セッション間で知識を持続させる必要がある場合は、AIにマークダウンファイルにダンプしてもらうことができます。これらのマークダウンファイルをツールのエージェントファイル(Claude Code CLAUDE.md)で参照するか、特定のタスク作業時に関連ファイルを言及してエージェントに読み込んでもらうことができます。

構造は様々ですが、こんな感じかもしれません...

.

├── CLAUDE.MD

└── docs

└── agents

└── backend

├── api.md

├── architecture.md

└── testing.md

```

プロンプト作成

クリーンなコンテキストウィンドウで作業しているか確認した後、次に重要なのは入力です。ここでは、扱う作業に応じて取れるさまざまなアプローチをご紹介します。

分解

一般的には、作業を離散的で実行可能な塊に分けて行うのが良いでしょう。「認証システムを実装する」といった曖昧な高レベルの指示は、あまりにもばらつきが大きいので避けてください。代わりに、もし手作業で作業を行ったらどうやって作業するかを考え、AIも同じ方向に導くようにしましょう。

こちらは私がClaudeに出したドキュメント管理システムのタスクの例です。この GitHubリポジトリで全てのインタラクション概要を見ることができます。

- プロンプト:「DocumentProcessorを見て、どの文書タイプが顧客、プロジェクト、または契約を指しているか教えてください。」

- 出力:AIがすべての参照を特定しました

- プロンプト:「{location}のマッピング機能を更新して、それらの関係を使いテストを作成してください。」

- 出力:実装されたマッピング+テスト

- プロンプト:「各タイプの関係が正しいことを確認するために文書を更新する。バックエンドのトランスをチェックして、何があるか確認してください。」

- アウトプット:欠けている関係を埋めた

作業がどのように段階的に進められているかに注目してください。単一のメガプロンプトは、複数の接点と複雑さの累積により、いずれ失敗した可能性が高いです。代わりに、反復的な文脈を持つ小さなプロンプトが高い成功率につながりました。

作業が終わったら、AIを混乱させないようにコンテキストを再度消去して次に進みます。

チェーン

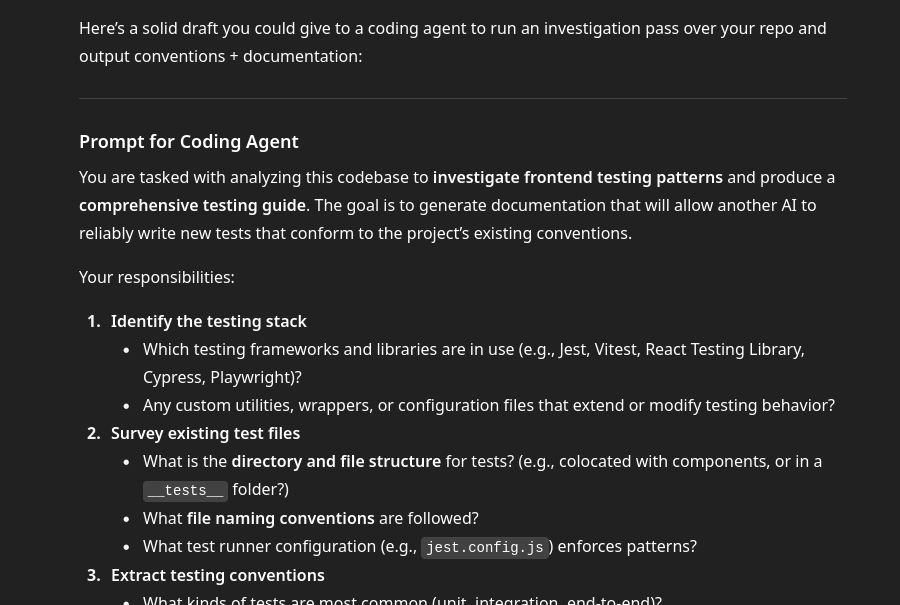

時には、AIに大規模な調査課題を任せるなど、より詳細なプロンプトが必要なこと もあります 。この場合、プロンプトをつなげることで成功の可能性を大幅に高めることができます。

最も一般的な方法は、最初のプロンプトをChatGPTやClaudeチャットなど別のLLMに渡し、特定の目的のためのプロンプトを作成してもらうことです。詳細なプロンプトのパラメータに満足したら、それをコーディングエージェントに入力してください。

例を挙げます:

プロンプト(ChatGPT):「このコードベースのフロントエンドテストパターンを調査するためのコーディングエージェントのプロンプトを作成し、AIがコードベースの慣習に従う新しいテストを書けるように包括的なドキュメントを作成してください。」

このプロンプトは、レビュー・洗練・エージェントに提供できるかなり詳細な第2段階プロンプトを作成します。

全文は こちらでご覧いただけます。

この方法は、出力がコードの現実に合致していることを確実にするときに最も効果的に機能します。例えば、このプロンプトでは「jest.config.js」について言及しています。しかし、もしジェストを使わない場合は、使っているものに変えるべきです。

再使用

時には、自分のコードベースや作業方法に非常に合うパターンが見つかることもあります。多くの場合、これはStep 4: Refiningの後に起こりますが、いつでも起こり得ます。

うまくいくものを見つけたら、再利用のために取っておきましょう。Claude Codeにはいくつかの方法があり、最も慣用的なのは カスタムスラッシュコマンドです。ここでの考え方は、もしまったプロンプトがあれば、それをカスタムコマンドとしてエンコードして再利用できるようにするということです。

例えば、私が見つけた素晴らしい時間短縮方法は、エージェントを使ってLaravelのAPIを調べ、 Postmanコレクションを作成することでした。これは新しいモジュールを作成するときに手動でやっていたことで、かなり時間がかかることがあります。

チェーピング方式を用いて、次のプロンプトを作成しました:

- 特定のバックエンドモジュールに対して新しいPostmanコレクションを生成する

- Controller/APIテストスイートを使ってリクエスト本体の値を通知してください

- コントローラとルート定義を使って利用可能なエンドポイントを特定します

エージェントにプロンプトを通すと、ほぼ即座に動作するポストマンコレクションが一つずつ完成しました。プロンプトはこちらでご覧いただけます。

このような価値あるパターンやプロンプトを見つけたら、チームとも共有することを検討してください。チーム全体の生産性向上こそが、本当の複利効果をもたらすポイントです。

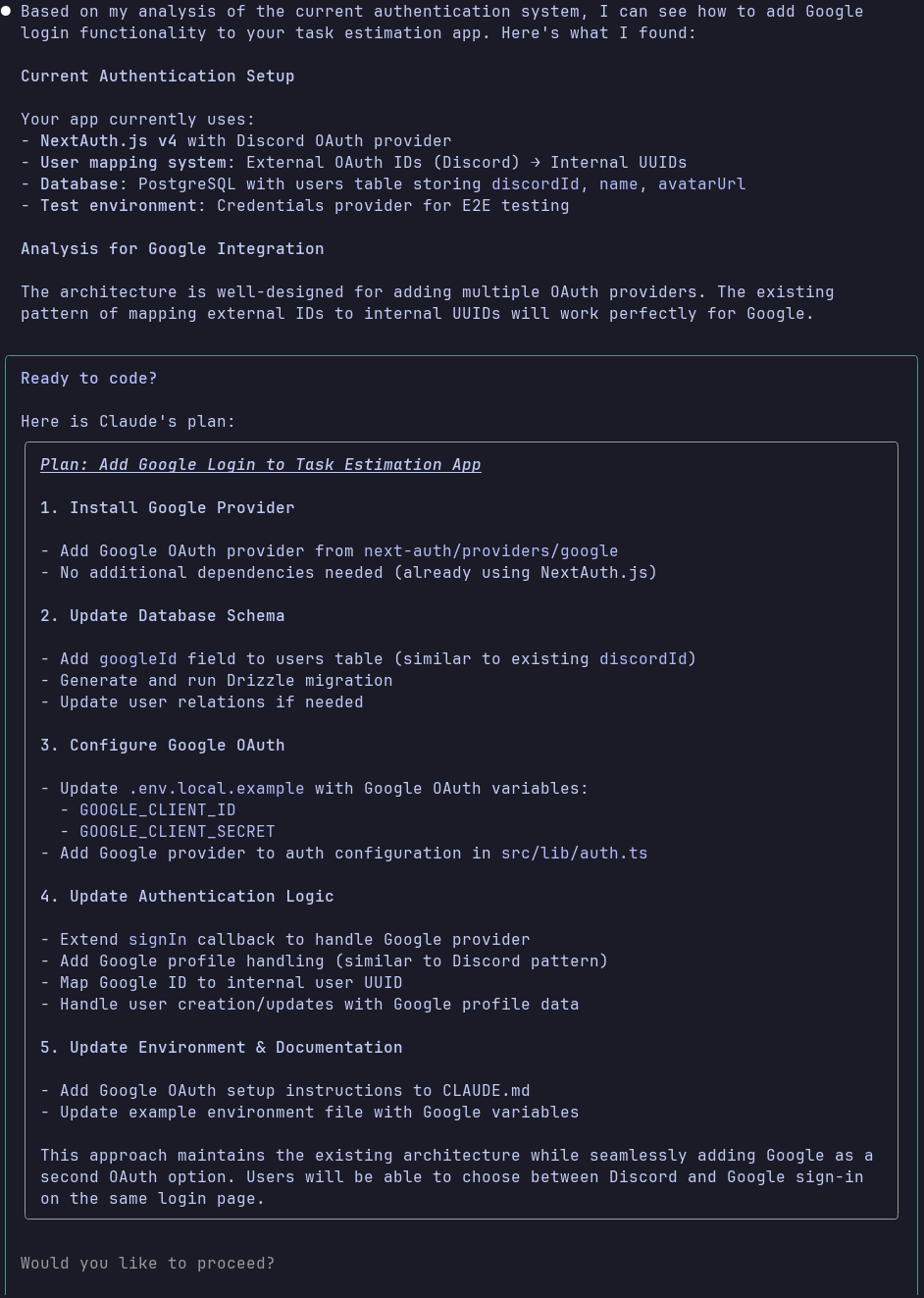

2。企画

Claude Codeのようなツールには、変更なしでコンテキストを構築するためのプロンプトを実行する計画モードがあります。この機能は必ずしも必要とは限りませんが、かなり複雑な変更に対応する場合には非常に貴重です。

通常、ツールは計画モードでなければ 何をするか を判断するために必要な情報を見つけるために調査を行います。その後、意図された変更点の概要が表示されます。ここでの重要な転換点は、AIが何を計画しているかを確認できることです。

下のスクリーンショットでは、プランニングモードを使って、すでに「Discordでログイン」をサポートしている既存のアプリに「Googleでログイン」を追加するために必要なものをClaudeに尋ねました。

AIが自分のユースケースに合うかどうか判断するために、変更しようとしているすべての計画が見えます。

重要:計画をよく読んでください!AIの言っていることをしっかり理解し、意味が通っているか確認してください。もし理解できなかったり、正確でないと感じたら、説明を求めたり、さらに調べたりしてください。

計画が期待通りのものになるまでは、計画段階から進まないでください。

AIが大量のコードを書き換えようと提案したら、それを赤信号として扱いましょう。ほとんどの開発は進化的かつ反復的であるべきです。作業を小さな部分に分ければ、AIが提案して小さな変更を加えるので、それが審査しやすくなります。もし計画に予想以上に多くの変更が含まれている場合は、AIが重要な文脈を見落としていないか入力を見直してください。

計画を繰り返したら、AIに実行の合図を出せます。

3。作り

第3フェーズでは、AIがコードベースの変更を始めます。ここでの出力の大部分はAIが出しますが、あなたも無罪ではありません。良くも悪くも、あなたがあなたの指示で生成するコードは依然として所有しています。したがって、制作段階はあなたとAIの協働と捉える方が良いでしょう。AIがコードを生成し、あなたがリアルタイムでそれを導くのです。

AIツールを最大限に活用し、リワークに費やす時間を最小限に抑えるには、AIを導く必要があります。覚えておいてください、あなたの目標は最大の生産性です――単なるコードの行ではなく、本物 の生産性です。そのためには、ツールを自由に扱うのではなく、積極的にツールと関わり、構築過程で作業する必要があります。

プロンプトの作成や計画に十分注意を払えば、実際のコーディング段階であまり驚きは起きないはずです。しかし、AIもミスを犯すことがあり、特に大規模なシステムでは見落としがちです。(これが、完全に「バイブコード」されたプロジェクトがスケールが大きくなるにつれて急速に崩壊してしまう主な理由の一つです。たとえシステム全体がAIによって構築されていても、コードベース内のすべてを記憶したり知ったりするわけではありません。)

AIがミスをしていない日がまだ一日経っているはずです。既存の定数の代わりに文字列リテラルを使ったことや、命名規則の一貫性がなかったりといった小さなミスかもしれません。これらのことはコードの動作を妨げることすらないかもしれません。

しかし、これらの変化を放置すると、回復が難しい滑り坂の始まりとなります。勤勉に、AI生成のコードを他のチームメンバーと同じように扱いましょう。さらに良いのは、このコードにはあなたの名前がついていることを理解し、あなたが永続的に「所有」する覚悟がないものは受け入れないことです。

もしミスに気づいたら、それを指摘し、修正方法を提案してください。もしツールが計画から外れたり、何かを忘れたりしたら、早めに気づいて軌道修正を試みてください。プロンプトが小さく焦点が絞られているため、AIが構築する機能も小さくなるはずです。これにより、レビューがより容易になります。

4。精錬

幸いなことに、機械と絶えず戦い、細かい問題で行ったり来たりするのではなく、ループの最終段階である「洗練」は、AIツールを時間をかけてより持続可能なキャリブレーション方法を提供します。

毎ループごとにセットアップを変更するわけではありませんが、ループごとにうまくいっている点と変える必要がある点の洞察が得られます。

AIツールの挙動を調整する最も一般的な方法は、そのツール固有のステアリングドキュメントを使うことです。例えば、クロードは CLAUDE.mdカーソルには ルールがあります。

これらのステアリングドキュメントは通常、エージェントのコンテキストに自動的に読み込まれるマークダウンファイルです。その中で、プロジェクト固有のルール、スタイルガイド、アーキテクチャなどを定義できます。例えば、テストでAIがモックの設定に常に苦労している場合は、ドキュメントに知っておくべきことや参照用の例、または参照可能なコードベースの良好なファイルへのリンクを記載したセクションを追加できます。

このファイルはLLMの文脈で容量を取るので、大きくなりすぎないようにすべきです。インデックスのように扱い、常にファイルに直接必要な情報を含め、必要に応じてAIが取り込めるより専門的な情報にリンクしてみてください。

こちらは私のよく使っている CLAUDE.md ファイルの抜粋です:

```md

...

## Frontend

...

### Development Guidelines

For detailed frontend development patterns, architecture, and conventions, see:

**[Frontend Module Specification](./docs/agents/frontend/frontend-architecture.md)**

This specification covers:

- Complete module structure and file organization

- Component patterns and best practices

- Type system conventions

- Testing approaches

- Validation patterns

- State management

- Performance considerations

...

```

AIはマークダウンファイルの階層を理解しているため、フロントエンド開発ガイドラインに関するセクションやモジュール仕様へのリンクを確認します。その後、ツールは内部でこの情報が必要かどうかを判断します。例えば、バックエンド機能で動作している場合はスキップしますが、フロントエンドモジュールを作業している場合はこの追加ファイルを取り込みます。

この機能により、エージェントの動作を条件付きで拡張・洗練させ、特定の分野で問題が起きるたびに微調整し、最終的にはコードベースで効果的に動作できるようになるまで続けられます。

サイクルの例外

この流れから逸脱するのが理にかなっている場合もあります。

迅速な修正や些細な変更には、 プロンプト → プロデューシングだけで十分かもしれません。それ以上のものは、計画や洗練を省略するとたいてい裏目に出るので、おすすめしません。

初期のコーディングや新しいコードベースへの移行時には、かなり頻繁に調整が必要になるでしょう。プロンプトやワークフロー、セットアップが成熟するにつれて、洗練の必要性は薄れていきます。一度調整すれば、ほとんど調整は必要ないでしょう。

最後に、AIは機能作業やバグ修正の加速力になれますが、時には作業のペースを妨げることもあります。これはチームやコードベースによって異なりますが、目安としては、パフォーマンスチューニングや重要なロジックのリファクタリング、または厳格に規制された分野で働いている場合、AIはむしろ助けになるよりもむしろ妨げになる可能性が高いです。

その他の考慮事項

AIツールでワークフローを最適化すること以外にも、出力品質に大きく影響するいくつかの要素があり、念頭に置く価値があります。

よく知られたライブラリとフレームワーク

すぐに気づくのは、AIツールはよく知られたライブラリの方がはるかに良いパフォーマンスを発揮することです。これらは通常、よく文書化されており、モデルのトレーニングデータに含まれる可能性が高いです。対照的に、新しいライブラリやドキュメントが不十分なライブラリ、社内のライブラリは問題を引き起こす傾向があります。社内ライブラリは多くの場合最も難しいです。なぜなら、多くはほとんどドキュメントがないからです。これにより、AIツールだけでなく人間の開発者にとっても困難な状況となっています。これがAIの生産性が既存のコードベースに比べて遅れをとる最大の理由の一つです。

こうした状況では、洗練段階ではAIがライブラリと効果的に連携できるように指針となるドキュメントを作成することが多いです。事前に時間を投資して、AIに包括的なテストやドキュメントを作成してもらうことを検討してください。それがなければ、AIはコードを扱うたびにライブラリを一から再分析しなければなりません。一度ドキュメントやテストを作成することで、そのコストを前払いし、今後の利用がずっとスムーズになります。

プロジェクトの発見可能性

プロジェクトの組織方法がAIがどれだけ効果的に連携できるかに大きな影響を与えます。クリーンで一貫したディレクトリ構造は、人間もAIもコードのナビゲーション、理解、拡張を容易にします。逆に、乱雑だったり一貫性のない構造は混乱を招き、結果の質を下げてしまいます。

例えば、清潔で一貫した構造は次のようなものかもしれません。

```

.

├── src

│ ├── components

│ ├── services

│ └── utils

├── tests

│ ├── unit

│ └── integration

└── README.md

```

これをこの混乱した構造と比べてみてください:

```

.

├── components

│ └── Button.js

├── src

│ └── utils

├── shared

│ └── Modal.jsx

├── pages

│ ├── HomePage.js

│ └── components

│ └── Card.jsx

├── old

│ └── helpers

│ └── api.js

└── misc

└── Toast.jsx

```

明確な構造の中では、すべてが予測可能な場所に住んでいます。混乱を招く場合は、コンポーネントが複数のフォルダ(「components」「pages/components」「shared」「misc」)に分散し、ユーティリティは重複し、古いコードは「old/」に残っています。

AIは他の開発者と同様に、プロジェクトの明確なメンタルモデルを構築するのに苦労し、それが重複やエラーのリスクを高めます。

コードベースの構造が混乱していて再構築が難しい場合は、共通のパターンをマッピングし、AIツールの発見や探索の量を減らすために、共通のパターンをマッピングし、それらを調整文書に追加しましょう。

まとめ

AIツールをワークフローに加えたからといって、一夜にして 10x 開発者になれるわけではありません。新しいツールがそうであるように、最初は少し遅くなることもあるかもしれません。しかし、時間をかけて学び、ワークフローを適応させれば、驚くほど早く成果が訪れます。

AIツールの分野は急速に進化しており、今日使っているツールは1年後には原始的に感じられるかもしれません。しかし、あなたが身につけた習慣やワークフロー――促し方、計画、行動、洗練の仕方――は何らかの形で引き継がれていきます。基本をしっかり守れば、単に時代遅れに追いつくだけでなく、常に先を行くことができます。