NVIDIA DGX™ Spark のサポートを Docker Model Runner に導入できることを嬉しく思います。新しい NVIDIA DGX Spark は驚異的なパフォーマンスを提供し、Docker Model Runner によりアクセスできるようになります。Model Runner を使用すると、すでに信頼しているのと同じ直感的な Docker エクスペリエンスを使用して、ローカルマシン上でより大きなモデルを簡単に実行および反復処理できます。

この投稿では、DGX Spark と Docker Model Runner がどのように連携してローカル モデル開発をより迅速かつ簡単に行うかを示し、開梱エクスペリエンス、Model Runner のセットアップ方法、実際の開発者ワークフローでの使用方法について説明します。

NVIDIA DGX Spark とは

NVIDIA DGX Spark は、DGX ファミリーの最新メンバーであり、ローカル モデル開発に驚異的なパフォーマンスを提供する Grace Blackwell GB10 Superchip を搭載したコンパクトなワークステーション クラスの AI システムです。研究者や開発者向けに設計されており、クラウドに依存することなく、大規模モデルのプロトタイピング、微調整、提供をすべて迅速かつ簡単に行うことができます。

ここ Docker では、幸運にも DGX Spark の試作バージョンを入手することができました。そして、はい、NVIDIA の発売資料に見られるのと同じくらい、実際に見るとあらゆる点で印象的です。

ローカル AI モデルを実行する理由と、Docker Model Runner と NVIDIA DGX Spark でそれを簡単にする方法

Dockerやより広範な開発者コミュニティの多くは、ローカルAIモデルを実験しています。 ローカルで実行することには、明らかな利点があります。

- データのプライバシーと制御: 外部 API 呼び出しはありません。すべてがマシンにとどまります

- オフラインでの可用性:切断されているときでも、どこからでも作業できます

- カスタマイズの容易さ: リモート インフラストラクチャに依存せずに、プロンプト、アダプター、または微調整されたバリアントを試すことができます

しかし、おなじみのトレードオフもあります。

- ローカル GPU とメモリは、大規模なモデルでは制限される可能性があります

- CUDA、ランタイム、依存関係の設定には、多くの場合、時間がかかります

- AI ワークロードのセキュリティと分離の管理は複雑になる場合があります

ここで DGX Spark と Docker Model Runner (DMR) が威力を発揮します。DMRは、Docker DesktopまたはDocker Engineと完全に統合された、サンドボックス環境でAIモデルを実行するための簡単で安全な方法を提供します。DGX Spark の NVIDIA AI ソフトウェア スタックと大容量 128GB ユニファイド メモリと組み合わせると、プラグ アンド プレイの GPU アクセラレーションと Docker レベルのシンプルさという両方の長所が得られます。

NVIDIA DGX Spark の開梱

このデバイスは、よく梱包され、洗練された、そして驚くほど小さく、サーバーというよりミニワークステーションに似ていました。

セットアップはさわやかで簡単で、電源、ネットワーク、周辺機器を接続し、NVIDIA ドライバー、CUDA、AI ソフトウェア スタックがプリインストールされた NVIDIA DGX OS で起動します。

ネットワークに接続したら、SSH アクセスを有効にすると、Spark を既存のワークフローに簡単に統合できます。

このようにして、DGX Sparkは日常の開発環境のAIコプロセッサとなり、プライマリマシンを置き換えるのではなく、拡張します。

NVIDIA DGX Spark での Docker Model Runner の使用開始

DGX Spark への Docker Model Runner のインストールは簡単で、数分で完了します。

1。Docker CE がインストールされていることを確認する

DGX OS には、Docker Engine (CE) がプリインストールされています。持っていることを確認します。

docker version

見つからないか古い場合は、通常の Ubuntu インストール手順に従ってインストールしてください。

2。Docker Model CLI プラグインをインストールする

Model Runner CLI は、Docker の apt リポジトリを介して Debian パッケージとして配布されます。リポジトリが設定されたら(上記のリンク先の手順を参照)、次のコマンドを使用してインストールします。

sudo apt-get update

sudo apt-get apt-get install docker-model-plugin

または、Dockerの便利なインストールスクリプトを使用します。

curl -fsSL https://get.docker.com | sudo bash

インストールされているかどうかは、次の方法で確認できます。

docker model version

3。モデルのプルと実行

プラグインがインストールされたので、 Docker Hub AI カタログからモデルをプルしましょう。たとえば、 Qwen 3 Coder モデルは次のとおりです。

docker model pull ai/qwen3-coder

Model Runner コンテナーは、OpenAI 互換のエンドポイントを次の場所で自動的に公開します。

http://localhost:12434/engines/v1

簡単なテストで公開されていることを確認できます。

# Test via API

curl http://localhost:12434/engines/v1/chat/completions -H 'Content-Type: application/json' -d

'{"model":"ai/qwen3-coder","messages":[{"role":"user","content":"Hello!"}]}'

# Or via CLI

docker model run ai/qwen3-coder

GPU は nvidia-container-runtime を介して Model Runner コンテナに割り当てられ、Model Runner は利用可能な GPU を自動的に利用します。GPU の使用状況を確認するには:

nvidia-smi

4。アーキテクチャの概要

内部で何が起こっているかは次のとおりです。

[ DGX Spark Hardware (GPU + Grace CPU) ]

│

(NVIDIA Container Runtime)

│

[ Docker Engine (CE) ]

│

[ Docker Model Runner Container ]

│

OpenAI-compatible API :12434

NVIDIA Container Runtime は、NVIDIA GB10 Grace Blackwell Superchip ドライバーおよび Docker Engine をブリッジするため、コンテナは CUDA に直接アクセスできます。次に、Docker Model Runner は独自のコンテナ内で実行され、モデルのライフサイクルを管理し、標準の OpenAI API エンドポイントを提供します。(Model Runner アーキテクチャの詳細については、この ブログを参照してください)。

開発者の観点からは、他のDocker化されたサービスと同様にモデルと対話し、 docker model pull、 list、 inspect、 run はすべて箱から出してすぐに機能します。

日常のワークフローでのローカル モデルの使用

ラップトップまたはデスクトップをプライマリマシンとして使用している場合、DGX Sparkは リモートモデルホストとして機能します。いくつかの SSH トンネルを使用すると、ローカルワークステーションから DGX ダッシュボードを介して Model Runner API にアクセスし、GPU 使用率を監視できます。

1。DMRポートを転送します(モデルアクセス用)

SSH 経由で DGX Spark にアクセスするには、まず SSH サーバーをセットアップします。

日常のワークフローでのローカル モデルの使用

ラップトップまたはデスクトップをプライマリマシンとして使用している場合、DGX Sparkは リモートモデルホストとして機能します。いくつかの SSH トンネルを使用すると、ローカルワークステーションから DGX ダッシュボードを介して Model Runner API にアクセスし、GPU 使用率を監視できます。

sudo apt install openssh-server

sudo systemctl enable --now ssh

次のコマンドを実行して、ローカルマシンからModel Runnerにアクセスします。user を DGX Spark を最初に起動したときに設定したユーザ名に置き換え、dgx-spark.local をローカルネットワーク上の DGX Spark の IP アドレスまたは /etc/hosts で設定したホスト名に置き換えます。

ssh -N -L localhost:12435:localhost:12434 user@dgx-spark.local

これにより、Model Runner API が DGX Spark からローカル マシンに転送されます。

OpenAI 互換の API を期待する IDE、CLI ツール、またはアプリで、次の項目をポイントするだけです。

http://localhost:12435/engines/v1/models

モデル名を設定します(例:ai/qwen3-coder) で、ローカル推論をシームレスに使用する準備が整いました。

2。DGX ダッシュボード ポートの転送(モニタリング用)

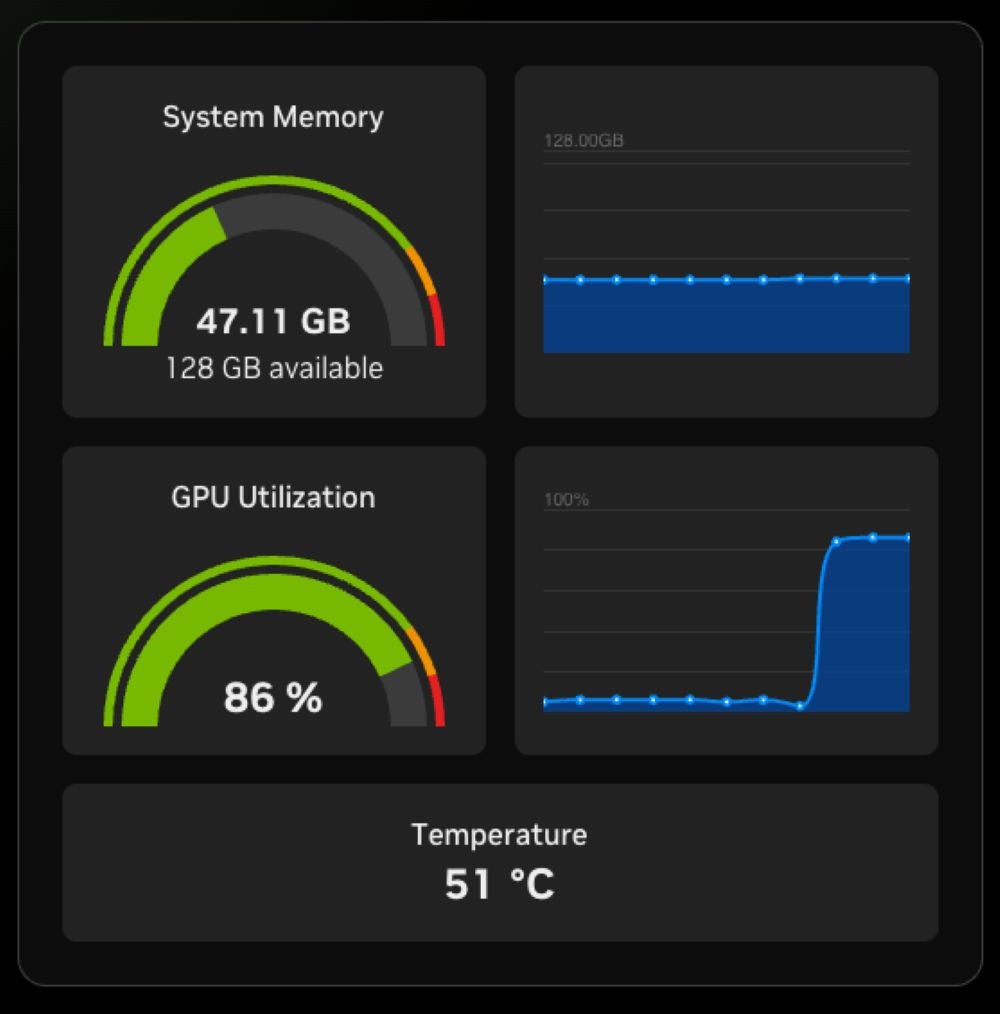

DGX Spark は、リアルタイムの GPU、メモリ、および熱統計を表示する軽量のブラウザー ダッシュボードを公開し、通常は次の場所でローカルに提供されます。

http://localhost:11000

同じ SSH セッションまたは別の SSH セッションを介して転送できます。

ssh -N -L localhost:11000:localhost:11000 user@dgx-spark.local

次に、メインワークステーションのブラウザで http://localhost:11000 を開き、モデルの実行中にDGX Sparkのパフォーマンスを監視します。

この組み合わせにより、DGX Spark は開発環境の GPU を利用したリモート拡張機能のように感じられます。IDE やツールは引き続きラップトップ上に存在しますが、モデルの実行とリソースを大量に消費するワークロードは Spark 上で安全に行われます。

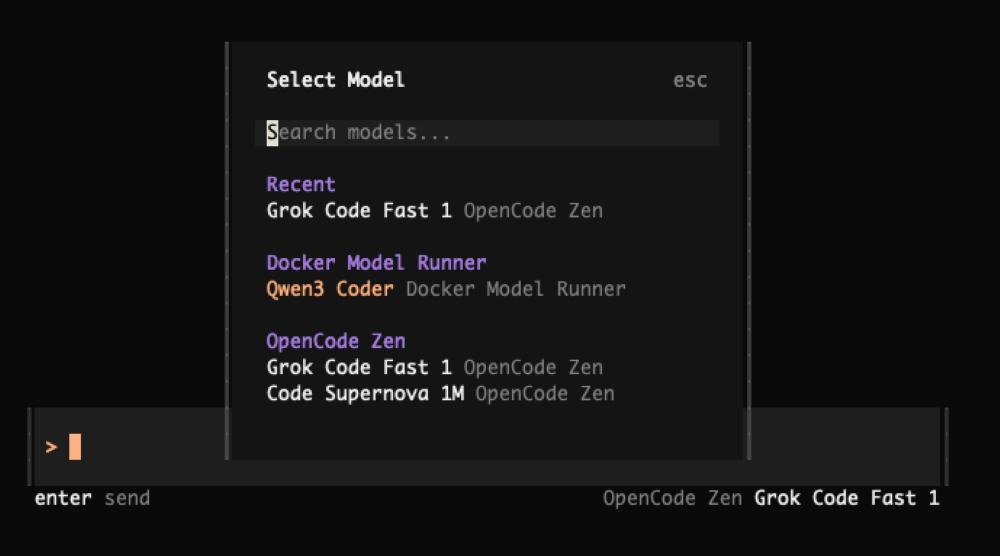

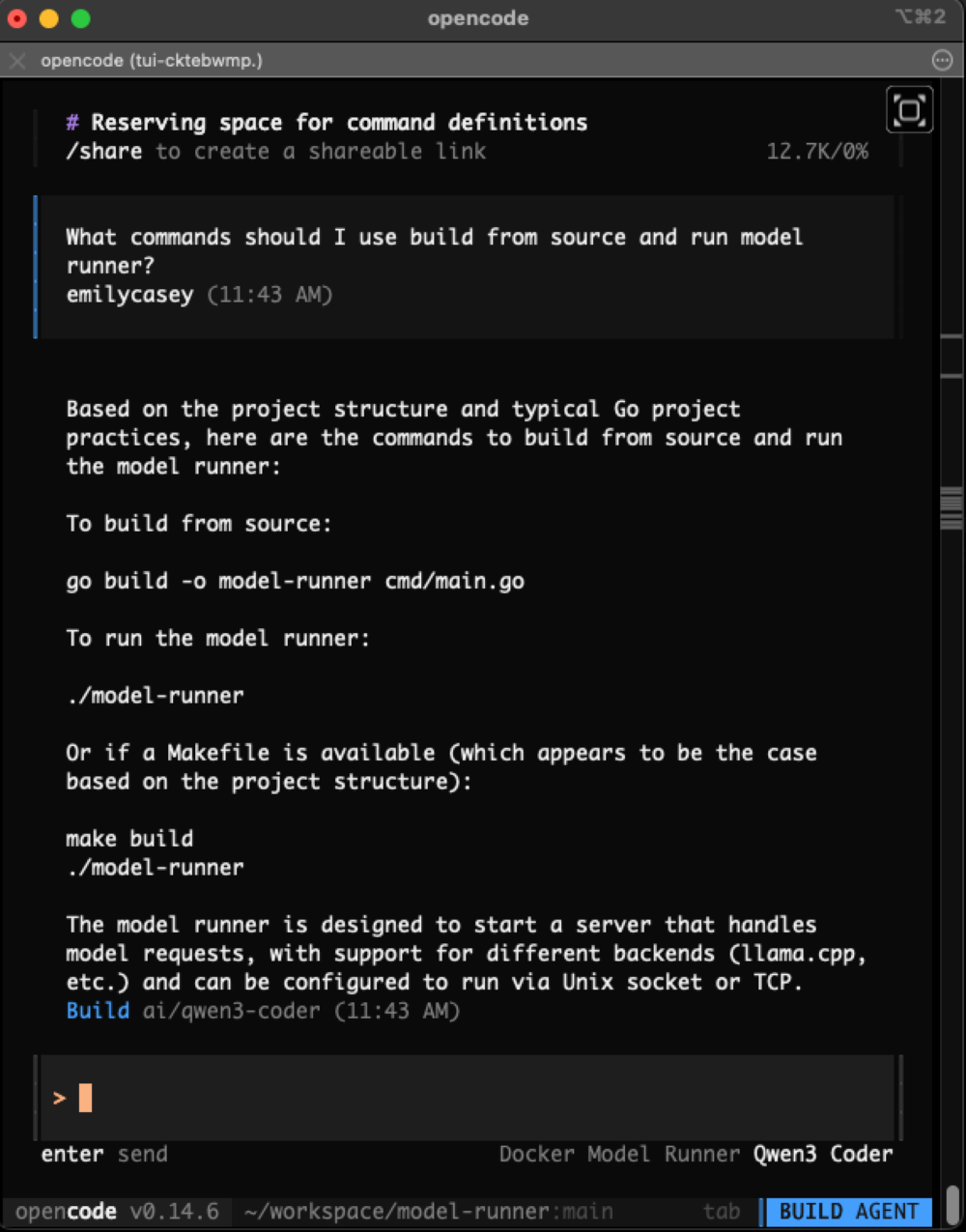

アプリケーションの例: Qwen3-Coder を使用した Opencode の構成

これを具体化しましょう。

オープンソースのターミナルベースの AI コーディング エージェントである OpenCode を使用しているとします。

DGX Spark で Docker Model Runner が実行され、 ai/qwen3-coder プルされ、ポートが転送されたら、以下を追加して OpenCode を構成できます。 ~/.config/opencode/opencode.json

{

"$schema": "https://opencode.ai/config.json",

"provider": {

"dmr": {

"npm": "@ai-sdk/openai-compatible",

"name": "Docker Model Runner",

"options": {

"baseURL": "http://localhost:12435/engines/v1" // DMR’s OpenAI-compatible base

},

"models": {

"ai/qwen3-coder": { "name": "Qwen3 Coder" }

}

}

},

"model": "ai/qwen3-coder"

}

次に、opencodeを実行し、/modelsコマンドでQwen3 Coderを選択します。

それです!完了とチャット要求は、DGX Spark 上の Docker Model Runner を介してルーティングされるため、Qwen3-Coder がエージェント開発エクスペリエンスをローカルで強化するようになりました。

http://localhost:11000 (DGX ダッシュボード) を開いて、コーディング中に GPU 使用率をリアルタイムで監視することで、モデルが実行されていることを確認できます。

この設定により、次のことができます。

- DGX Spark GPUを活用しながら、ノートパソコンを軽量に保ちます

- DMRによるカスタムモデルまたは微調整モデルの実験

- プライバシーとコスト管理のために、ローカル環境内に完全に留まります

概要

NVIDIA DGX Spark で Docker Model Runner を実行すると、強力なローカル ハードウェアを日常の Docker ワークフローのシームレスな拡張に非常に簡単に変えることができます。

1 つのプラグインをインストールし、使い慣れた Docker コマンド (docker model pull、docker model run) を使用します。

NVIDIA のコンテナ ランタイムを通じて完全な GPU アクセラレーションが得られます。

モデル API と監視ダッシュボードの両方をメイン ワークステーションに転送して、開発と可視性を簡単に行うことができます。

この設定により、開発者の生産性とAIインフラストラクチャの間のギャップが埋められ、Dockerが提供する信頼性とシンプルさを備えたローカル実行の速度、プライバシー、柔軟性が得られます。

ローカルモデルのワークロードが増加し続ける中、DGX Spark + Docker Model Runnerの組み合わせは、データセンターやクラウドへの依存を必要とせずに、本格的なAIコンピューティングをデスクに導入するための実用的で開発者に優しい方法です。

もっと詳しく知る:

- DGX Spark の発売の公式発表を NVIDIA ニュースルームで読む

- Docker Model Runner の一般提供に関するお知らせを確認する

- Model Runner GitHub リポジトリにアクセスしてください。Docker Model Runner はオープンソースであり、コミュニティからのコラボレーションと貢献を歓迎します。スターを付けて、フォークし、貢献します。