We’re excited to share that Docker Model Runner is now generally available (GA)! In April 2025, Docker introduced the first Beta release of Docker Model Runner, making it easy to manage, run, and distribute local AI models (specifically LLMs). Though only a short time has passed since then, the product has evolved rapidly, with continuous enhancements driving the product to a reliable level of maturity and stability.

This blog post takes a look back at the most important and widely appreciated capabilities Docker Model Runner brings to developers, and looks ahead to share what they can expect in the near future.

What is Docker Model Runner?

Docker Model Runner (DMR) is built for developers first, making it easy to pull, run, and distribute large language models (LLMs) directly from Docker Hub (in an OCI-compliant format) or HuggingFace (if models are available in the GGUF format, in which case they will be packaged as OCI Artifacts on-the-fly by the HuggingFace backend).

Tightly integrated with Docker Desktop and Docker Engine, DMR lets you serve models through OpenAI-compatible APIs, package GGUF files as OCI artifacts, and interact with them using either the command line, a graphical interface, or developer-friendly (REST) APIs.

Whether you’re creating generative AI applications, experimenting with machine learning workflows, or embedding AI into your software development lifecycle, Docker Model Runner delivers a consistent, secure, and efficient way to work with AI models locally.

Check the official documentation to learn more about Docker Model Runner and its capabilities.

Why Docker Model Runner?

Docker Model Runner makes it easier for developers to experiment and build AI application, including agentic apps, using the same Docker commands and workflows they already use every day. No need to learn a new tool!

Unlike many new AI tools that introduce complexity or require additional approvals, Docker Model Runner fits cleanly into existing enterprise infrastructure. It runs within your current security and compliance boundaries, so teams don’t have to jump through hoops to adopt it.

Model Runner supports OCI-packaged models, allowing you to store and distribute models through any OCI-compatible registry, including Docker Hub. And for teams using Docker Hub, enterprise features like Registry Access Management (RAM) provide policy-based access controls to help enforce guardrails at scale.

11 Docker Model Runner Features Developers Love Most

Below are the features that stand out the most and have been highly valued by the community.

1. Powered by llama.cpp

Currently, DMR is built on top of llama.cpp, which we plan to continue supporting. At the same time, DMR is designed with flexibility in mind, and support for additional inference engines (such as MLX or vLLM) is under consideration for future releases.

2. GPU acceleration across macOS and Windows platforms

Harness the full power of your hardware with GPU support: Apple Silicon on macOS, NVIDIA GPUs on Windows, and even ARM/Qualcomm acceleration — all seamlessly managed through Docker Desktop.

3. Native Linux support

Run DMR on Linux with Docker CE, making it ideal for automation, CI/CD pipelines, and production workflows.

4. CLI and UI experience

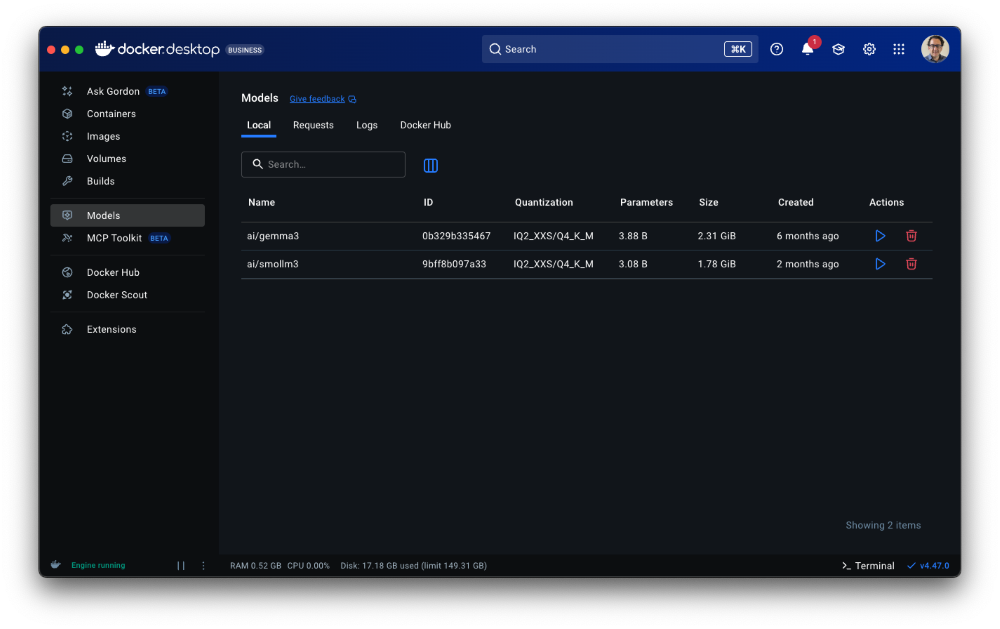

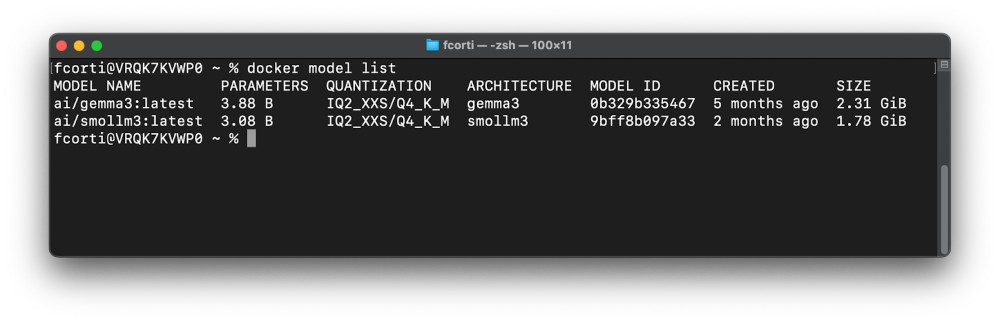

Use DMR from the Docker CLI (on both Docker Desktop and Docker CE) or through Docker Desktop’s UI. The UI provides guided onboarding to help even first-time AI developers start serving models smoothly, with automatic handling of available resources (RAM, GPU, etc.).

Figure 1: Docker Model Runner works both in Docker Desktop and the CLI, letting you run models locally with the same familiar Docker commands and workflows you already know

5. Flexible model distribution

Pull and push models from Docker Hub in OCI format, or pull directly from HuggingFace repositories hosting models in GGUF format for maximum flexibility in sourcing and sharing models.

6. Open Source and free

DMR is fully open source and free for everyone, lowering the barrier to entry for developers experimenting with or building on AI.

7. Secure and controlled

DMR runs in an isolated, controlled environment that doesn’t interfere with the main system or user data (sandboxing). Developers and IT admins can fine-tune security and availability by enabling/disabling DMR or configuring options like host-side TCP support and CORS.

8. Configurable inference settings

Developers can customize context length and llama.cpp runtime flags to fit their use cases, with more configuration options coming soon.

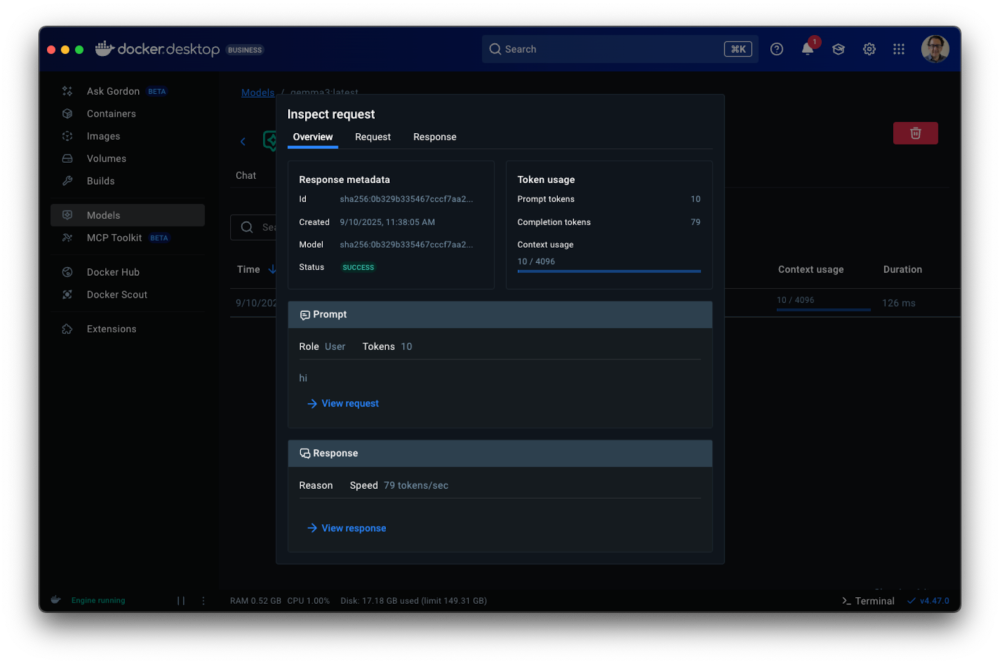

9. Debugging support

Built-in request/response tracing and inspect capabilities make it easier to understand token usage and framework/library behaviors, helping developers debug and optimize their applications.

Figure 2: Built-in tracing and inspect tools in Docker Desktop make debugging easier, giving developers clear visibility into token usage and framework behavior

10. Integrated with the Docker ecosystem

DMR works out of the box with Docker Compose and is fully integrated with other Docker products, such as Docker Offload (cloud offload service) and Testcontainers, extending its reach into both local and distributed workflows.

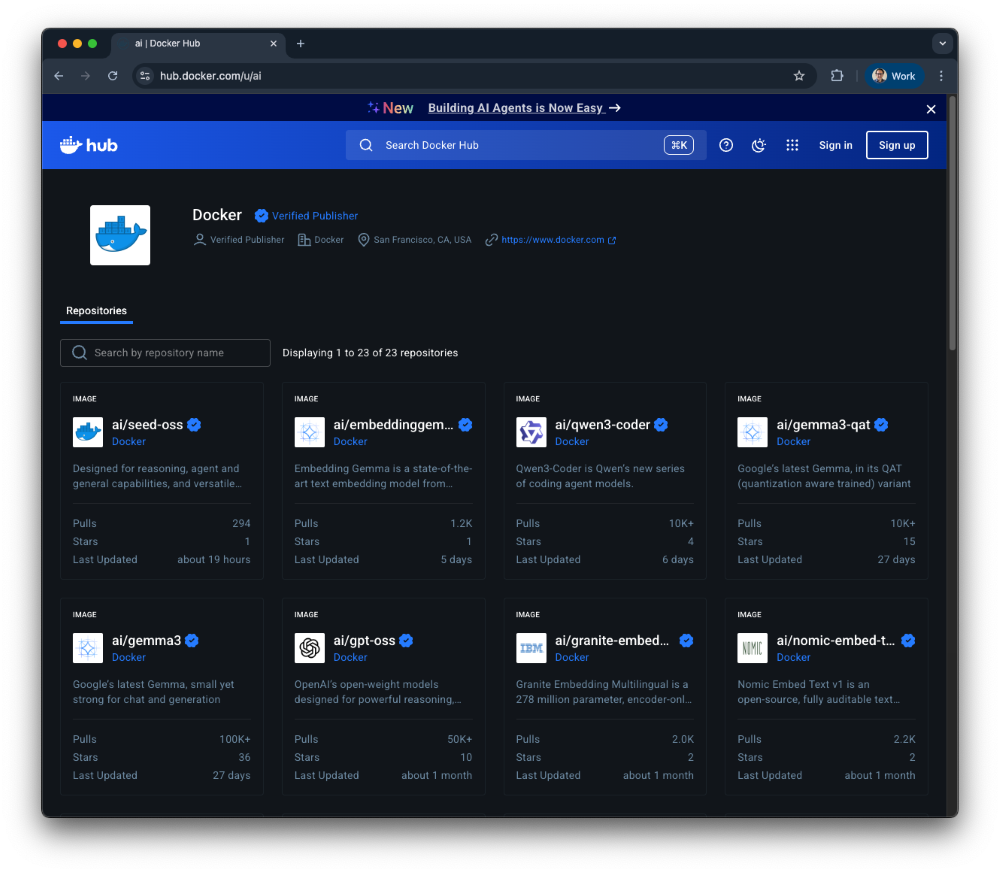

11. Up-to-date model catalog

Access a curated catalog of the most popular and powerful AI models on Docker Hub. These models can be pulled for free and used across development, pipelines, staging, or even production environments.

Figure 3: Curated model catalog on Docker Hub, packaged as OCI Artifacts and ready to run

The road ahead

The future is bright for Docker Model Runner, and the recent GA version is only the first milestone. Below are some of the future enhancements that you should expect to be released soon.

Streamlined User Experience

Our goal is to make DMR simple and intuitive for developers to use and debug. This includes richer response rendering in the chat-like interface within Docker Desktop and the CLI, multimodal support in the UI (already available through the API), integration with MCP tools, and enhanced debugging features, alongside expanded configuration options for greater flexibility. Last but not least, we aim to provide smoother and more seamless integration with third-party tools and solutions across the AI ecosystem.

Enhancements and better ability to execute

We remain focused on continuously improving DMR’s performance and flexibility for running local models. Upcoming enhancements include support for the most widely used inference libraries and engines, advanced configuration options at the engine and model level, and the ability to deploy Model Runner independently from Docker Engine for production-grade use cases, along with many more improvements on the horizon.

Frictionless Onboarding

We want first-time AI developers to start building their applications right away, and to do so with the right foundations. To achieve this, we plan to make onboarding into DMR even more seamless. This will include a guided, step-by-step experience to help developers get started quickly, paired with a set of sample applications built on DMR. These samples will highlight real-world use cases and best practices, providing a smooth entry point for experimenting with and adopting DMR in everyday workflows.

Staying on Top of Model Launch

As we continue to enhance inference capabilities, we remain committed to maintaining a first-class catalog of AI models directly in Docker Hub, the leading registry for OCI artifacts, including models. Our goal is to ensure that new, relevant models are available in Docker Hub and runnable through DMR as soon as they are publicly released.

Conclusion

Docker Model Runner has come a long way in a short time, evolving from its Beta release into a mature and stable inference engine that’s now generally available. At its core, the mission has always been clear: make it simple, consistent, and secure for developers to pull, run, and serve AI models locally,. using familiar Docker CLI commands and tools they already love!

Now is the perfect time to get started. If you haven’t already, install Docker Desktop and try out Docker Model Runner today. Follow the official documentation to explore its capabilities and see for yourself how DMR can accelerate your journey into building AI-powered applications.

Learn more

- Read our quickstart guide to Docker Model Runner.

- Visit our Model Runner GitHub repo! Docker Model Runner is open-source, and we welcome collaboration and contributions from the community!

- Learn how Compose works with Model runner, making building AI apps and agents easier

- Learn how to build an AI tutor

- Explore how to use both local and remote models in hybrid AI workflows

- Building AI agents made easy with Goose and Docker

- Using Model Runner on Hugging Face

- Powering AI generated testing with Docker Model Runner

- Build a GenAI App With Java Using Spring AI and Docker Model Runner

- Tool Calling with Local LLMs: A Practical Evaluation

- Behind the scenes: How we designed Docker Model Runner and what’s next

- Why Docker Chose OCI Artifacts for AI Model Packaging

- What’s new with Docker Model Runner

- Publishing AI models to Docker Hub

- How to Build and Run a GenAI ChatBot from Scratch using Docker Model Runner