A practical approach to escaping the expensive, slow world of API-dependent AI

The $20K Monthly Reality Check

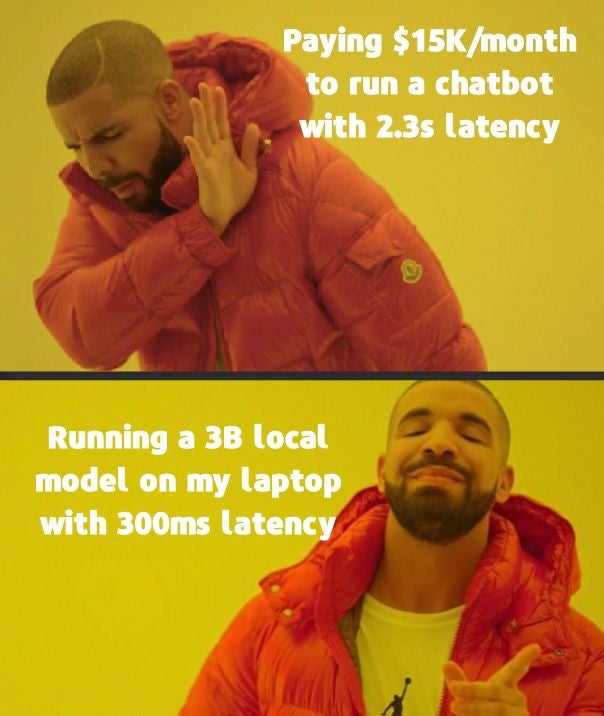

You built a simple sentiment analyzer for customer reviews. It works great. Except it costs $847/month in API calls and takes 2.3 seconds to classify a single review. Your “smart” document classifier burns through $3,200/month. Your chatbot feature? $15,000/month and counting.

The Shared Pain:

- Bloated AI features that drain budgets faster than they create value

- High latency that kills user experience (nobody waits 3 seconds for a “smart” response)

- Privacy concerns when sensitive data must leave your network

- Compliance nightmares when proprietary data goes through third-party APIs

- Developer friction from being locked into massive, remote-only models

Remocal + Minimum Viable Models = Sweet Spot

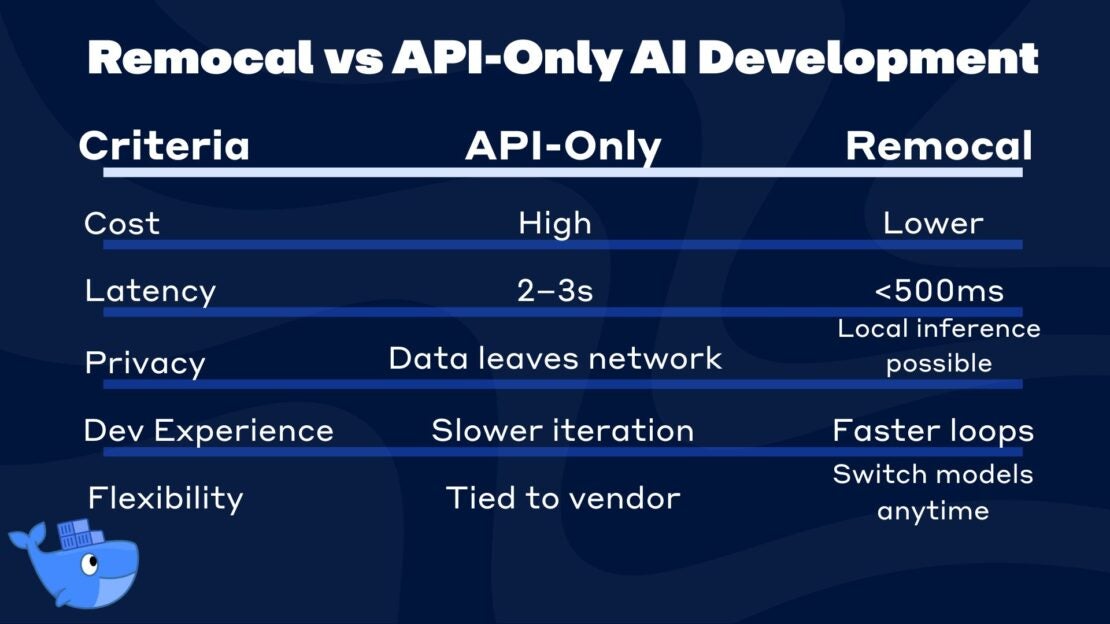

Remocal (remote + local) is a novel hybrid development approach that combines local development environments with on-demand access to cloud resources, allowing developers to work locally while seamlessly tapping into powerful remote compute when needed. This approach solves longstanding problems in traditional development by eliminating the friction of deploying to staging environments for realistic testing, reducing the overhead of managing complex cloud setups, and providing instant access to production-scale resources without leaving the familiar local development workflow.

It’s particularly effective for AI development because it addresses the fundamental tension between accessible local iteration and the substantial computational requirements of large modern AI models. With Remocal, developers can build and test their agents locally, using local models. They can also burst out to cloud GPUs when the AI use case or workload exceeds local viability. We believe this will democratize AI development by making it easier and cheaper to build AI applications with minimal resources. For businesses, a Remocal approach to AI development presents a much more affordable path to build machine learning applications and a much better developer experience that allows faster iteration and causes less frustration.

Remocal teaches us to develop locally and then add horsepower with cloud acceleration only when needed. A logical and increasingly essential extension of this principle is Minimum Viable Model (MVM).

In a nutshell, Minimum Viable Models (MVMs) means deploying the smallest, most efficient models that solve your core business problem effectively. It is also good guidance for deploying models in the cloud, as well. Just as you never spin up a massive Kubernetes cluster well before you need it or fire up an enormous PostgreSQL instance in the cloud simply to test out an application. Combine MVM with Remocal software development and you get the best of both worlds.

Why Right-Sized Models Are Having Their Moment

In fact, if you can’t build a POC with a local model, you should think hard about what you are building because local models today can handle just about anything the vast majority of AI applications require during development stages. AI researchers have come up with a number of clever ways to shrink models without losing capability including:

Curated-data SLMs (small language models): Microsoft’s latest Phi-4 family shows that carefully filtered, high-quality training corpora let sub-15B models rival or beat much larger models on language, coding and math benchmarks, slashing memory and latency needs.

Quantization: Packing weights into NF4 4-bit blocks plus low-rank adapter layers preserves accuracy within ~1 pt while cutting GPU RAM by ~75% and allowing laptop-class training or inference.

Sparse Mixture-of-Experts (MoE): Architectures such as Mistral’s Mixtral 8×7B route each token through just two of 8 expert blocks, activating <25% of parameters per step yet matching dense peers, so serving costs scale with active, not total, parameters.

Memory-efficient attention kernels: Libraries like FlashAttention-2 reorder reads/writes so attention fits in on-chip SRAM, doubling throughput and enabling larger context windows on commodity GPUs.

On-device “nano” models: Google’s Gemini Nano ships directly inside Chrome and Android, proving that sub-4B models can run private, low-latency inference on phones and browsers without cloud calls.

MVM-Friendly Production-Ready Models

MVM-Ready Models

|

Model |

Size (B params) |

What it’s good at |

Typical hardware footprint* |

Why it punches above its weight |

|---|---|---|---|---|

|

32B |

Dual-mode reasoning (thinking/non-thinking), multilingual (119 languages), long context (32K-128K), tool calling |

RTX 4090 or H100; ~64GB VRAM full precision, ~16GB with 4-bit quantization |

Hybrid thinking modes allow switching between fast responses and deep reasoning; competitive with much larger models on coding and math |

|

|

27B |

Multimodal (text + images), 140+ languages, function calling, 128K context |

Single H100 or RTX 3090 (24GB); ~54GB full precision, ~7GB with int4 quantization |

Achieves 98% of DeepSeek-R1’s performance using only 1 GPU vs 32; optimized quantization maintains quality at 4x compression |

|

|

24B |

Fast inference (150 tokens/s), multimodal, function calling, instruction following |

RTX 4090 or A100; ~55GB VRAM bf16/fp16, ~14GB with quantization |

3x faster than Llama 3.3 70B; improved stability and reduced repetitive outputs; optimized for low-latency applications |

|

|

~70 B |

Text + emerging multimodal, long context (128 K tokens) |

Larger desktops in 4-bit quantization mode (~45GB VRAM) |

trained on 15T high-quality tokens, competitive on benchmarks, integrates well into RAG/ agent pipelines, versatile |

|

|

3B |

Dual-mode reasoning, multilingual (6 languages), long context (128K), tool calling |

RTX 3060 or modest hardware; ~6GB VRAM, runs on laptops and edge devices |

Competes with 4B+ models despite 3B size; efficient architecture with grouped-query attention and optimized training curriculum |

|

|

14B |

Complex reasoning, math, coding, general chat |

4-bit ≈ 10-15 GB VRAM; runs on RTX 4090 or H100 |

Trained on 9.8T tokens with synthetic data and rigorous filtering; outperforms Llama 3.3 70B on math/reasoning tasks while being 5x smaller |

Hardware footprint notes: Requirements vary significantly with quantization level and context length. Full precision numbers assume bf16/fp16. Quantized models (4-bit/8-bit) can reduce memory requirements by 2-4x with minimal quality loss. Edge deployment possible for smaller models with appropriate optimization.

If anything, efforts to shrink models while maintaining efficacy are only getting started, so the future means Remocal + MVM will be even more viable and cover a wider swathe of currently inapplicable use cases. Right now, too, there are a number of highly capable models that can run locally without any problem. Some developers are even looking to build apps and libraries that take advantage of browser-based AI capabilities. Over time, this means better models that can run on the same hardware as their predecessors.

Even today, these new, small models with superpowers represent the full spectrum of capabilities and tool use – NLP, machine vision, general language models, and more. We expect that diversity to grow as fine-tuned versions of small models continue to emerge on HuggingFace (and are pre-packaged on Docker Model Runner).

All of this being said, there are plenty of use cases where local development on an MVM is only the first step and access to bigger models and more powerful GPUs or AI training or inference clusters are essential. Remocal + MVM delivers the best of both worlds: fast, cost-effective local inference for everyday tasks combined with frictionless access to powerful cloud models when you hit complexity limits. This hybrid approach means you can prototype and iterate rapidly on local hardware, then seamlessly scale to frontier models for demanding workloads—all within the same development environment. You’re not locked into either local-only constraints or cloud-only costs; instead, you get intelligent resource allocation that automatically uses the right model size for each task, optimizing both performance and economics across your entire AI pipeline.

Rubrics for Local Models vs API Giants

All of this being said, Remocal + MVM may not work for certain situations. It’s important to run a full product requirement spec to clarify use cases and verify that MVM is a viable approach for what you are planning to build.

Stick with API Models When:

- You need broad world knowledge or current events

- Complex, multi-step reasoning across diverse domains is required

- You’re building general-purpose conversational AI

- You have fewer than 1,000 requests per month

- Accuracy improvements of 2-5% justify 100x higher costs

Use Right-Sized Models When:

- Your task is well-defined (classification, code completion, document processing)

- You need consistent, low-latency responses

- Cost per inference matters for your business model

- Data privacy or compliance is a concern

- You want developer independence from API rate limits

The classic 80/20 Power Law applies in Remocal + MVM. Most production AI applications fall into well-defined categories where right-sized models can achieve near-equivalents of large model performance at a small fraction of the cost, with higher velocity, greater flexibility and better security.

Conclusion: The Dawn of Practical AI

The era of “bigger is always better” in AI is giving way to a more nuanced understanding: the right model for the right job and a “Unix” mindset of “smaller tools for more specific jobs”. With Microsoft’s Phi-4 achieving GPT-4o-mini level performance in a 14B parameter package, and Gemini Nano running sophisticated AI directly in your browser, we’re witnessing the democratization of AI capabilities. That means any developer anywhere with a decent laptop can build sophisticated AI applications.

The Remocal + Minimum Viable Models approach to AI goes beyond cost savings. It also solves issues around control, flexibility, pace of iteration, and developer experience that formerly vexed Platform and MLOps teams. This approach also will allow a thousand AI apps to bloom, and make it far simpler to build to spec and modify on a dime. As a starting point, too, local AI development means better security, better compliance and minimized risk. This is particularly important for the vast majority of developers who are not experienced in AI security and compliance.

Whether you’re building a customer service chatbot, a code completion tool, or a document analyzer, there’s likely a small, efficient model that can handle your use case without the complexity, cost, and privacy concerns of cloud APIs. Then, when the time is right and the application requires it, an organization can burst their AI workload to more powerful GPU clusters in the cloud for training and inference. They can switch to a larger, more powerful model, on demand, as needed. Local vs cloud for AI development is a 100% false choice. Most organizations, in fact, are better off having both, giving their developers the tools to be fast, nimble and autonomous with small local models but the capacity to burst into big cloud GPUs and state-of-the-art model APIs as needed if an application or use case requires it.

The future belongs to organizations that can strategically mix local efficiency with cloud scale, using the minimum viable model that gets the job done. Start local, prove value, then scale strategically.