Hi, I’m Sergei Shitikov – a Docker Captain and Lead Software Engineer living in Berlin. I’m focused on DevOps, developer experience, open source, and local AI tools. I created this extension to make it easier for anyone – even without a technical background – to get started with local LLMs using Docker Model Runner and Open WebUI.

This new Docker extension lets you run Docker Model Runner as the inference service behind Open WebUI, powering a richer chat experience. This blog walks you through why Docker Model Runner and Open WebUI make a powerful duo, how to set up your own local AI assistant, and what’s happening under the hood.

Local LLMs are no longer just experimental toys. Thanks to rapid advances in model optimization and increasingly powerful consumer hardware, local large language models have gone from proof-of-concept curiosities to genuinely useful tools.

Even a MacBook with an M-series chip can now run models that deliver fast, meaningful responses offline, without an internet connection or API keys.

Docker Model Runner, accessible via Docker Desktop (and also available as a plugin for Docker CE and of course fully OSS), makes getting started easy: just pick a model in the UI or run a single docker model run from the CLI.

You’ll have a fully operational model up and running in seconds.

Docker Model Runner + Open WebUI: A powerful duo for running richer, local AI chat experiences

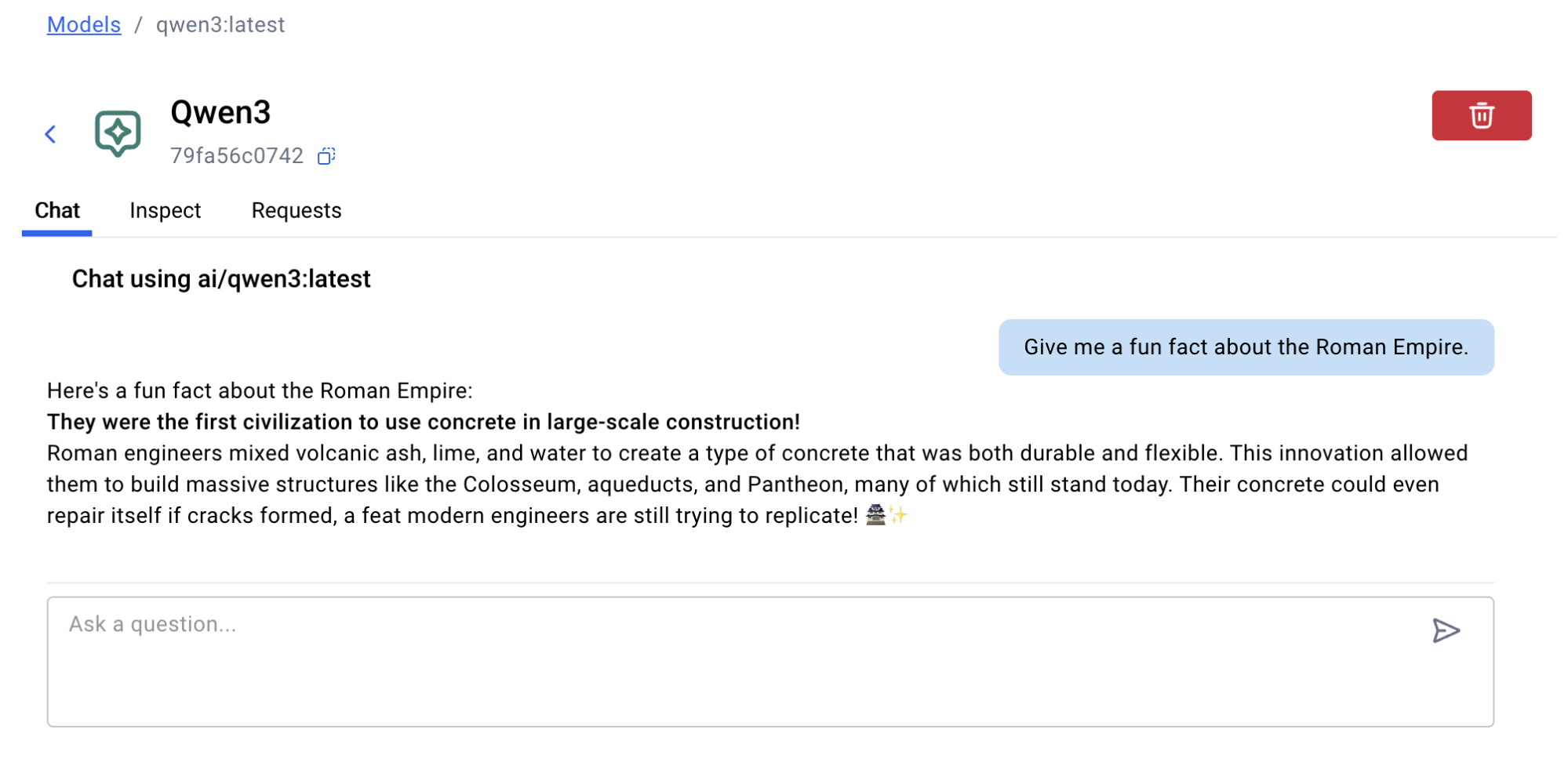

Docker Model Runner is designed as an inference service in Docker Desktop or CLI, allowing developers to run models locally with familiar workflows and commands they trust and know. This means from a design perspective, it only provides the bare minimum: a prompt box and a response field. There’s no memory. No file upload. No chat flow. No interface that feels like a real assistant. Note this is actually by design. There is no intention to replicate an experience within Docker Desktop; that is already well implemented by other offerings within the wider ecosystem.

That’s where Open WebUI comes in: a modern, self-hosted interface designed specifically for working with local LLMs.

It brings chat history, file uploads, prompt editing, and more. All local. All private.

That’s why an extension was created: to combine the two.

This Docker Extension launches Open WebUI and hooks it directly into your running model via Docker Model Runner. No configuration. No setup. Just open and go.

Let’s see how it works.

From Zero to Local AI Assistant in a Few Clicks

If you already have Docker Desktop installed, you’re almost there.

Head over to the Models tab and pick any model from the Docker Hub section: GPT-OSS, Gemma, LLaMA 3, Mistral or others.

One click, and Docker Model Runner will pull the container and start serving the model locally.

Prefer the CLI? A single docker model pull does the same job.

Next, you might want something more capable than a single input box.

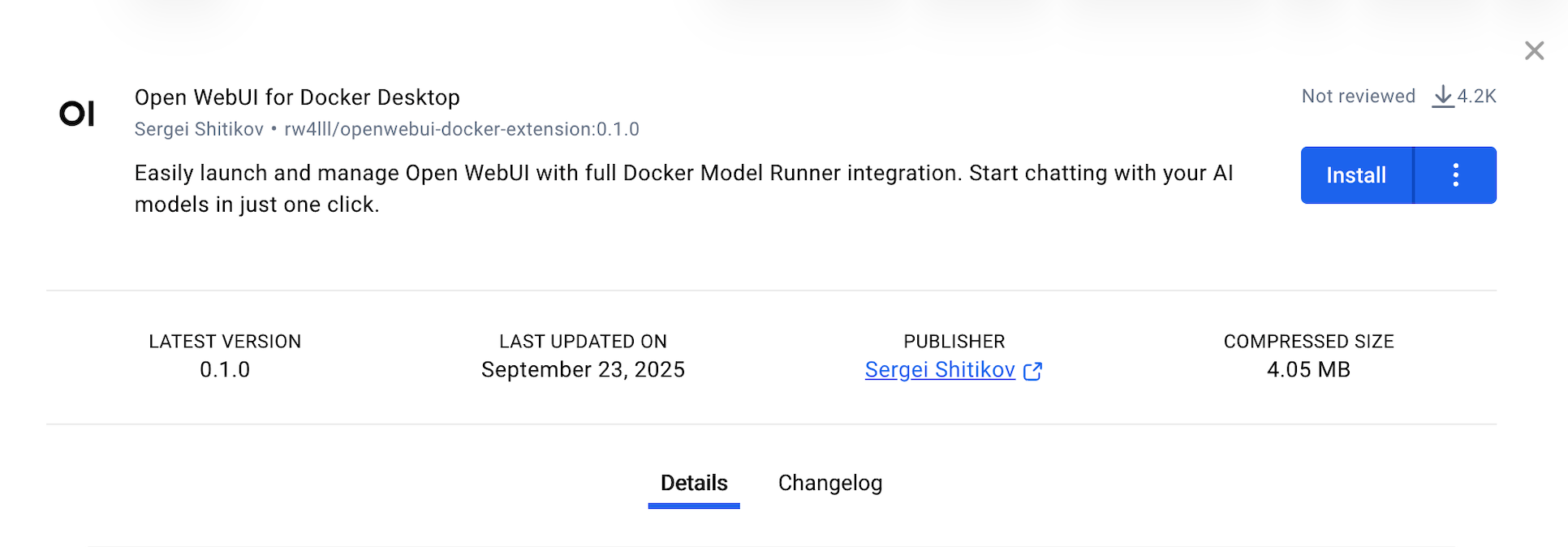

Open the Extensions Marketplace inside Docker Desktop and install the Open WebUI extension, a feature-rich interface for local LLMs.

It automatically provisions the container and connects to your local Docker Model Runner.

All models you’ve downloaded will appear in the WebUI, ready to use; no manual config, no environment variables, no port mapping.

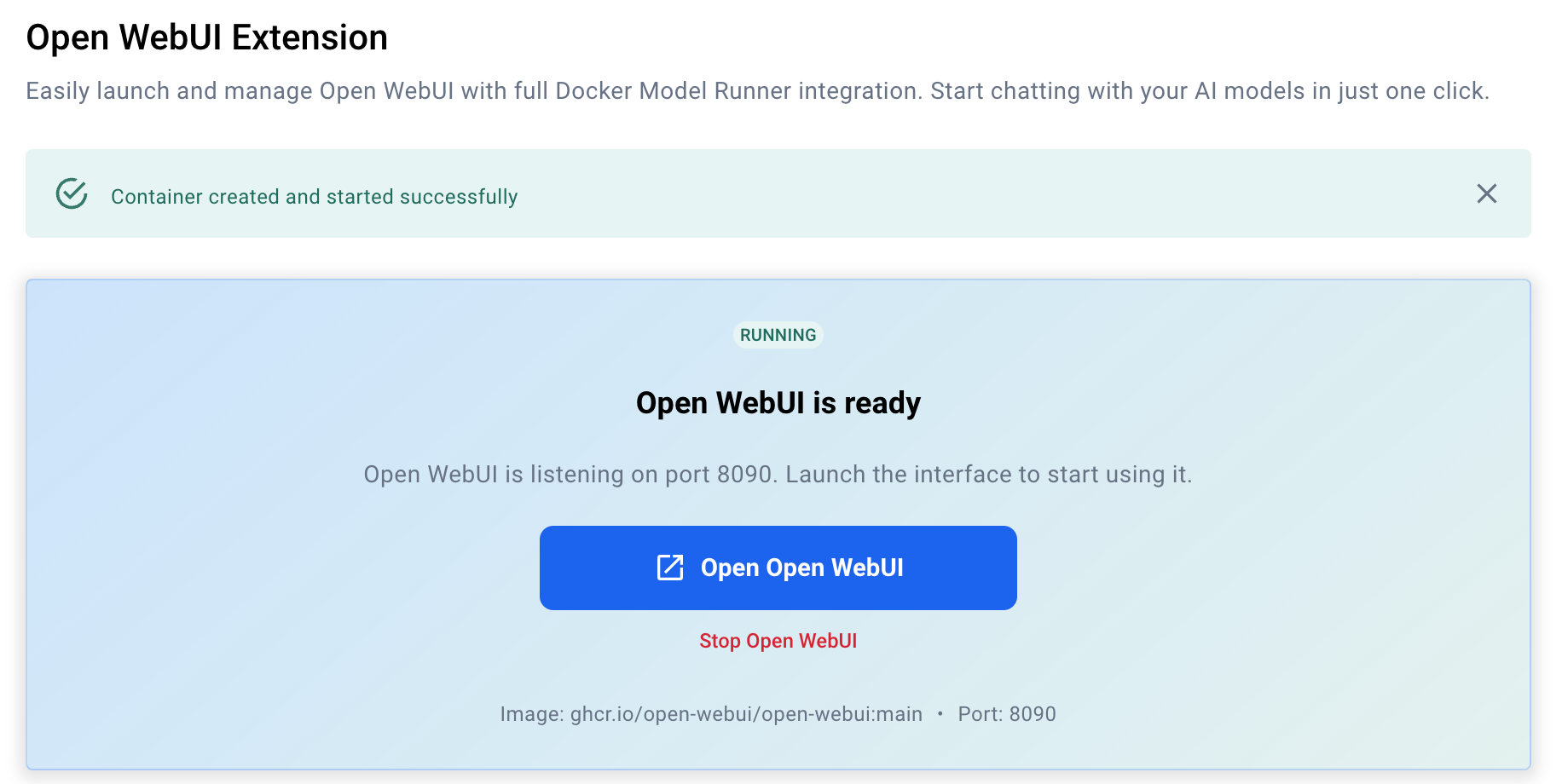

Once setup completes, you’ll see a screen confirming the extension is running, along with a button to launch the interface in your browser.

Alternatively, you can open it manually at http://localhost:8090 (default port) or bookmark it for future use.

Note: The first-time startup may take a couple of minutes as Open WebUI installs required components and configures integration.

Subsequent launches are much faster – nearly instant.

What You Can Do with Open WebUI

Once installed, Open WebUI feels instantly familiar, like using ChatGPT, but running entirely on your own machine.

You get a full chat experience, with persistent conversations, system prompt editing, and the ability to switch between models on the fly.

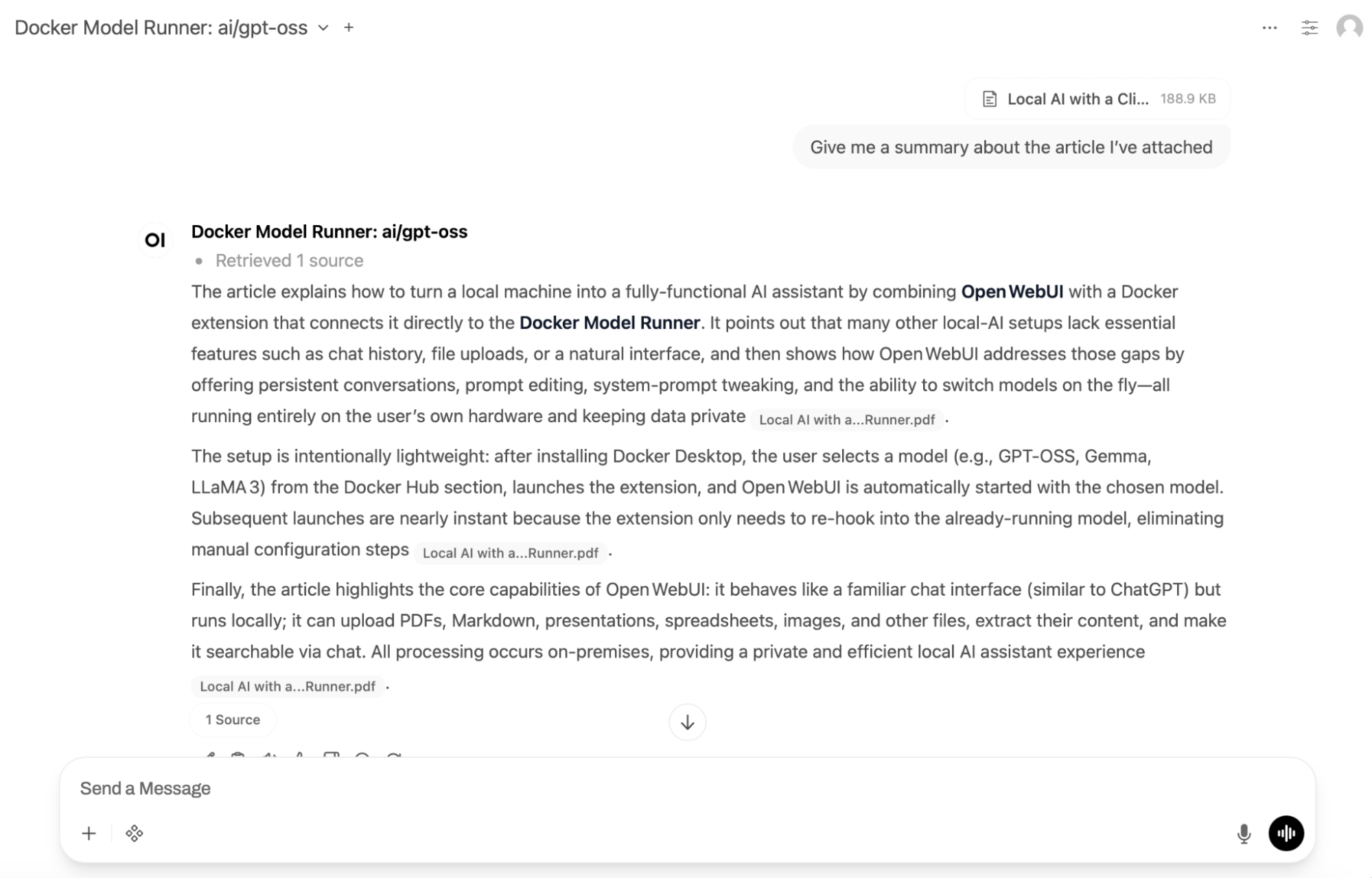

Upload files and chat with them

Drop in PDFs, Markdown files, presentations, spreadsheets, or even images.

Open WebUI extracts the content and makes it queryable through the chat.

Need a summary, quick answer, or content overview? Just ask: all processing happens locally.

Speak instead of type

With voice input turned on, you can talk to your assistant right from the browser.

This is great for hands-free tasks, quick prompts, or just demoing your local AI setup to a friend.

Requires permission setup for microphone access.

Define how your model behaves

Open WebUI supports full control over system prompts with templates, variables, and chat presets.

Whether you’re drafting code, writing blog posts, or answering emails, you can fine-tune how the model thinks and responds.

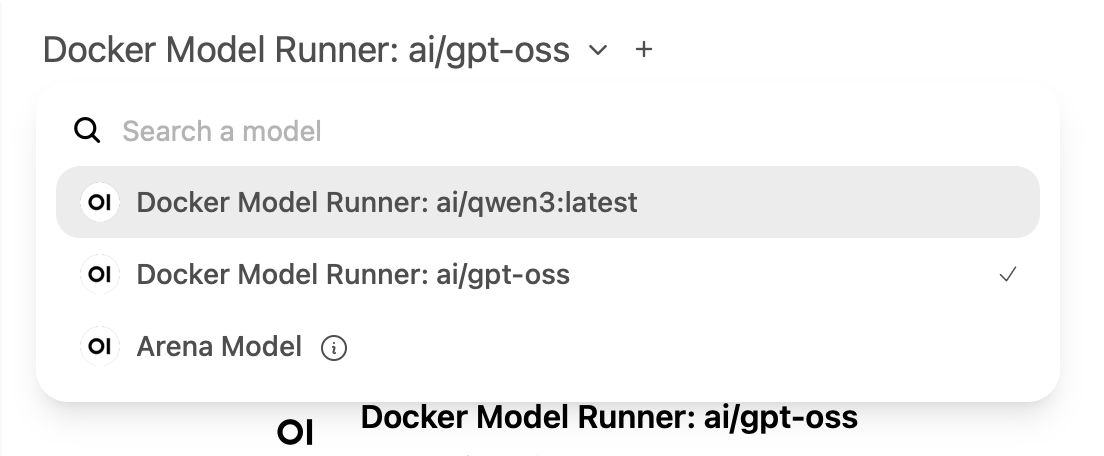

Switch between models instantly

Already downloaded multiple models using Docker Model Runner?

Open WebUI detects them automatically. Pick any model from the dropdown and start chatting; no restart required.

Save insights to memory

Want the model to remember something specific?

You can store facts or reminders manually in the personal memory panel and edit or remove them at any time.

More Things You Can Do

Open WebUI goes beyond chat with advanced tools that power real workflows:

- Function Calling & Plugins

- Use prebuilt tools or define your own Python functions via the Pipelines framework, ideal for automations, translations, or data lookups.

- Multilingual UI

- Open WebUI supports a wide range of interface languages and is fully localizable, perfect for international teams or non-English users.

- Secure, local-first by design

- No sign-up and no cloud storage. Everything stays on your machine, under your control.

Note: Not all features are universally available. Some depend on the model’s capabilities (e.g., function calling, image understanding), your current Open WebUI settings (e.g., voice input, plugins), or the hardware you’re running on (e.g., GPU acceleration, local RAG performance).

Open WebUI aims to provide a flexible platform, but actual functionality may vary based on your setup.

How it works inside

Under the hood, the extension brings together two key components: integration between Open WebUI and Docker Model Runner, and a dynamic container provisioner built into the Docker extension.

Open WebUI supports Python-based “functions”, lightweight plugins that extend model behavior.

This extension includes a function that connects to Docker Model Runner via its local API, allowing the interface to list and access all downloaded models automatically.

When you install the extension, Docker spins up the Open WebUI container on demand. It’s not a static setup, the container is configured dynamically based on your environment. You can:

- Switch to a different Open WebUI image (e.g., CUDA-enabled or a specific version)

- Change the default port

- Support for custom environments and advanced flags – coming soon

The extension handles all of this behind the scenes, but gives you full control when needed.

Conclusion

You’ve just seen how the Docker Open WebUI Extension turns Docker Model Runner from a simple model launcher into a fully-featured local AI assistant with memory, file uploads, multi-model support, and more.

What used to require custom configs, manual ports, or third-party scripts now works out of the box, with just a few clicks.

Next steps

- Install the Open WebUI Extension from the Docker Desktop Marketplace

- Download a model via Docker Model Runner (e.g., GPT-OSS, Gemma, LLaMA 3, Mistral)

- Launch the interface at

http://localhost:8090and start chatting locally - Explore advanced features: file chat, voice input, system prompts, knowledge, plugins

- Switch models anytime or try new ones without changing your setup

The future of local AI is modular, private, and easy to use.

This extension brings us one step closer to that vision and it’s just getting started.

Get involved

- Star or contribute to Open WebUI Docker Extension on GitHub

- Follow updates and releases in the Docker Extensions Marketplace

- Contribute to the Docker Model Runner repo: it’s open source and community-driven

- Share feedback or use cases with the Docker and Open WebUI communities