This is a guest post written by Marton Elek, Principal Software Engineer at Storj.

In part one of this two-part series, we discussed the intersection of Web3 and Docker at a conceptual level. In this post, it’s time to get our hands dirty and review practical examples involving decentralized storage.

We’d like to see how we can integrate Web3 projects with Docker. At the beginning we have to choose from two options:

- We can use Docker to containerize any Web3 application. We can also start an IPFS daemon or an Ethereum node inside a container. Docker resembles an infrastructure layer since we can run almost anything within containers.

- What’s most interesting is integrating Docker itself with Web3 projects. That includes using Web3 to help us when we start containers or run something inside containers. In this post, we’ll focus on this portion.

The two most obvious integration points for a container engine are execution and storage. We choose storage here since more mature decentralized storage options are currently available. There are a few interesting approaches for decentralized versions of cloud container runtimes (like ankr), but they’re more likely replacements for container orchestrators like Kubernetes — not the container engine itself.

Let’s use Docker with decentralized storage. Our example uses Storj, but all of our examples apply to almost any decentralized cloud storage solution.

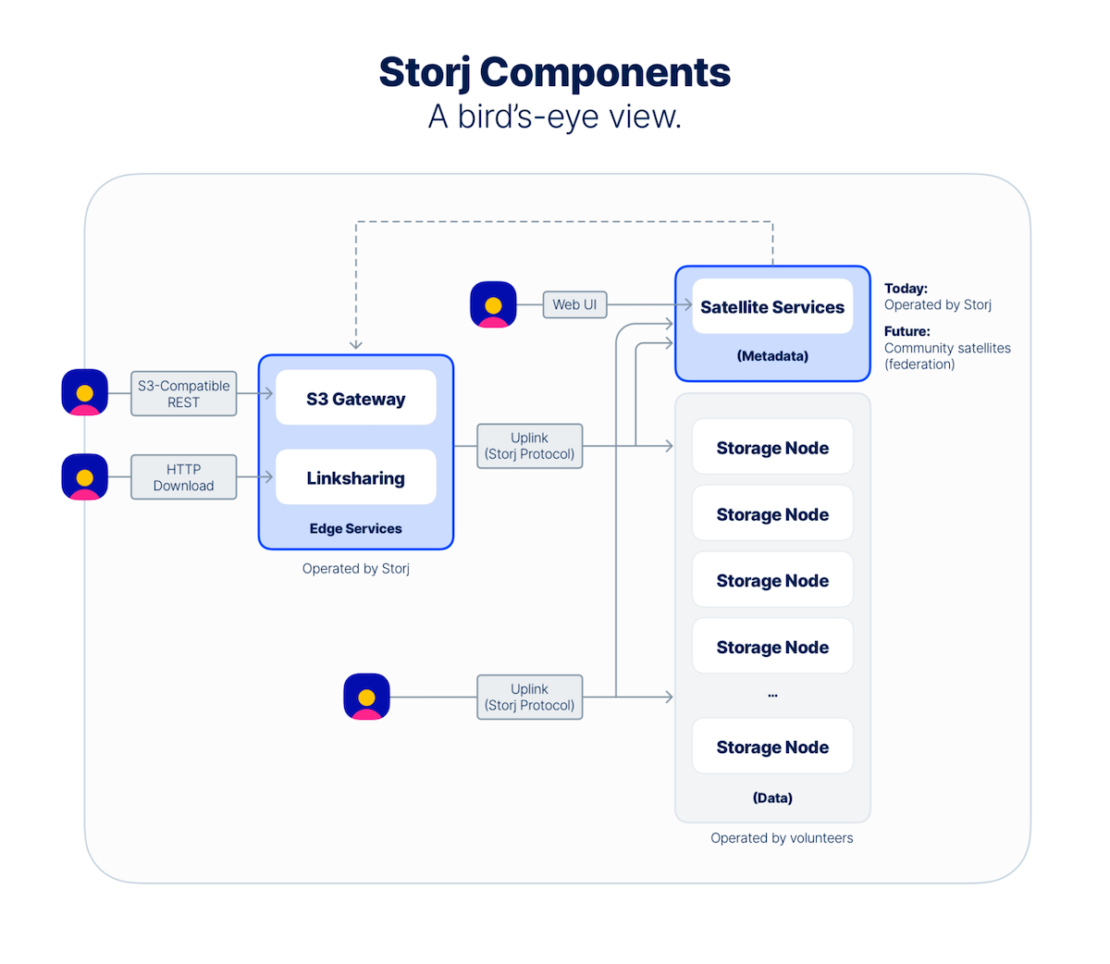

Storj is a decentralized cloud storage where node providers are compensated to host the data, but metadata servers (which manage the location of the encrypted pieces) are federated (many, interoperable central servers can work together with storage providers).

It’s important to mention that decentralized storage almost always requires you to use a custom protocol. A traditional HTTP upload is a connection between one client and one server. Decentralization requires uploading data to multiple servers.

Our goal is simple: we’d like to use docker push and docker pull commands with decentralized storage instead of a central Docker registry. In our latest DockerCon presentation, we identified multiple approaches:

- We can change Docker and containerd to natively support different storage options

- We can provide tools that magically download images from decentralized storage and persists them in the container engine’s storage location (in the right format, of course)

- We can run a service which translates familiar Docker registry HTTP requests to a protocol specific to the decentralized cloud

- Users can manage this themselves.

- This can also be a managed service.

Leveraging native support

I believe the ideal solution would be to extend Docker (and/or the underlying containerd runtime) to support different storage options. But this is definitely a bigger challenge. Technically, it’s possible to modify every service, but massive adoption and a big user base mean that large changes require careful planning.

Currently, it’s not readily possible to extend the Docker daemon to use special push or pull targets. Check out our presentation on extending Docker if you’re interested in technical deep dives and integration challenges. The best solution might be a new container plugin type, which is being considered.

One benefit of this approach would be good usability. Users can leverage common push or pull commands. But based on the host, the container layers can be sent to a decentralized storage.

Using tool-based push and pull

Another option is to upload or download images with an external tool — which can directly use remote decentralized storage and save it to the container engine’s storage directory.

One example of this approach (but with centralized storage) is the AWS ECR container resolver project. It provides a CLI tool which can pull and push images using a custom source. It also saves them as container images of the containerd daemon.

Unfortunately this approach also have some strong limitations:

- It couldn’t work with a container orchestrator like Kubernetes, since they aren’t prepared to run custom CLI commands outside of pulling or pushing images.

- It’s containerd specific. The Docker daemon – with different storage – couldn’t use it directly.

- The usability is reduced since users need different CLI tools.

Using a user-manager gateway

If we can’t push or pull directly to decentralized storage, we can create a service which resembles a Docker registry and meshes with any client.ut under the hood, it uploads the data using the decentralized storage’s native protocol.

This thankfully works well, and the standard Docker registry implementation is already compatible with different storage options.

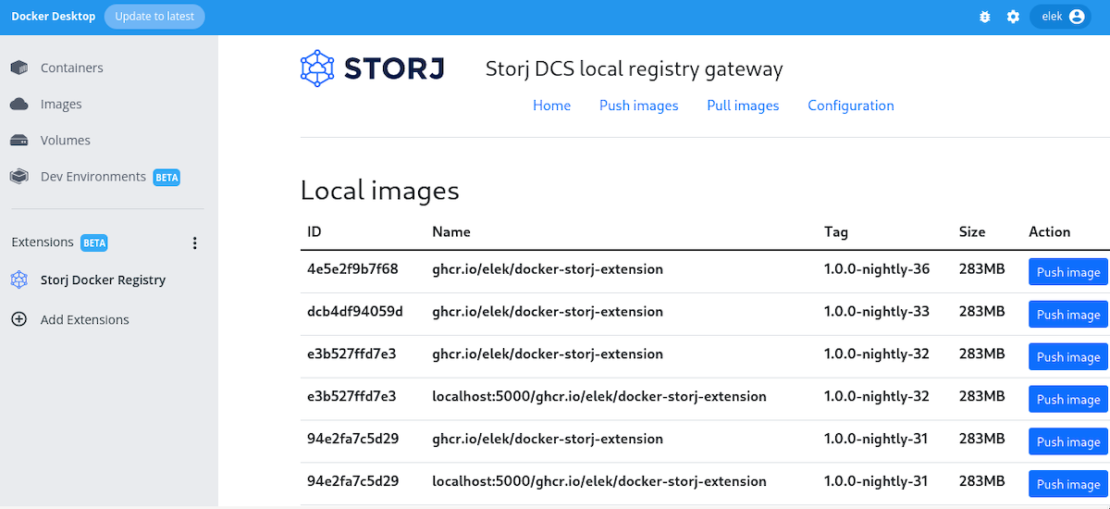

At Storj, we already have an implementation that we use internally for test images. However, the nerdctl ipfs subcommand is another good example for this approach (it starts a local registry to access containers from IPFS).

We have problems here as well:

- Users should run the gateway on each host. This can be painful alongside Kubernetes or other orchestrators.

- Implementation can be more complex and challenging compared to a native upload or download.

Using a hosted gateway

To make it slightly easier one can provide a hosted version of the gateway. For example, Storj is fully S3 compatible via a hosted (or self-hosted) S3 compatible HTTP gateway. With this approach, users have three options:

- Use the native protocol of the decentralized storage with full end-to-end encryption and every feature

- Use the convenient gateway services and trust the operator of the hosted gateways.

- Run the gateway on its own

While each option is acceptable, a perfect solution still doesn’t exist.

Using Docker Extensions

One of the biggest concerns with using local gateways was usability. Our local registry can help push images to decentralized storage, but it requires additional technical work (configuring and running containers, etc.)

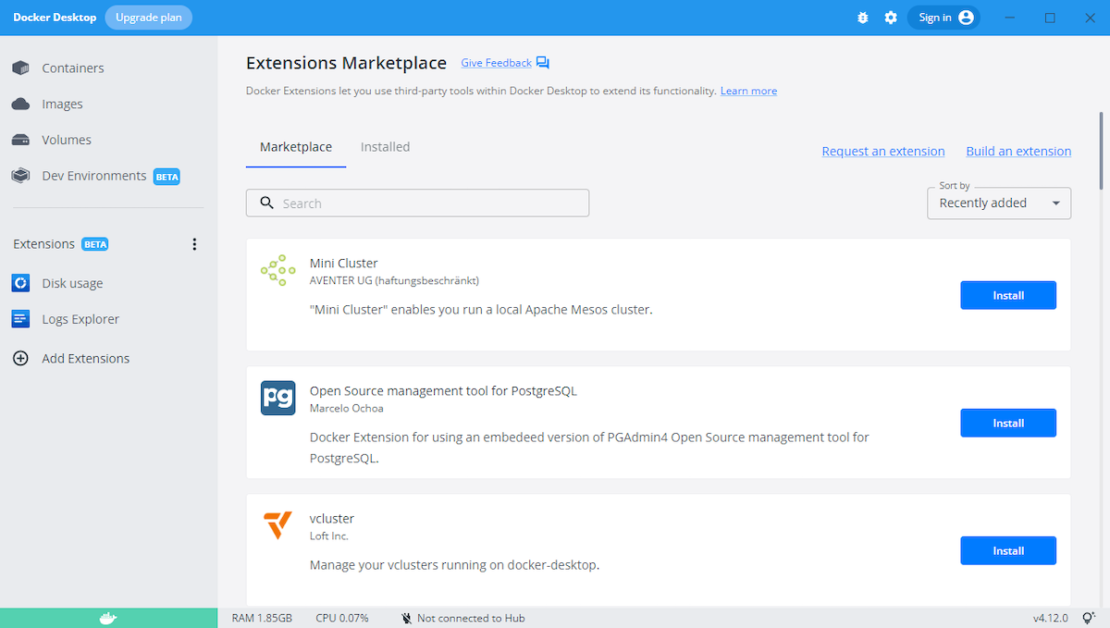

This is where Docker Extensions can help us. Extensions are a new feature of Docker Desktop. You can install them via the Docker Dashboard, and they can provide additional functionality — including new screens, menu items, and options within Docker Desktop. These are discoverable within the Extensions Marketplace:

And this is exactly what we need! A good UI can make Web3 integration more accessible for all users.

Docker Extensions are easily discoverable within the Marketplace, and you can also add them manually (usually for the development).

At Storj, we started experimenting with better user experiences by developing an extension for Docker Desktop. It’s still under development and not currently in the Marketplace, but feedback so far has convinced us that it can massively improve usability, which was our biggest concern with almost every available integration option.

Extensions themselves are Docker containers, which make the development experience very smooth and easy. Extensions can be as simple as a metadata file in a container and static HTML/JS files. There are special JavaScript APIs that manipulate the Docker daemon state without a backend.

You can also use a specialized backend. The JavaScript part of the extension can communicate with any containerized backend via a mounted socket.

The new docker extension command can help you quickly manage extensions (as an example: there’s a special docker extension dev debug subcommand that shows the Web Developer Toolbar for Docker Desktop itself.)

Thanks to the provided developer tools, the challenge is not creating the Docker Desktop extension, but balancing the UI and UX.

Summary

As we discussed in our previous post, Web3 should be defined by user requirements, not by technologies (like blockchain or NFT). Web3 projects should address user concerns around privacy, data control, security, and so on. They should also be approachable and easy to use.

Usability is a core principle of containers, and one reason why Docker became so popular. We need more integration and extension points to make it easier for Web3 project users to provide what they need. Docker Extensions also provide a very powerful way to pair good integration with excellent usability.

We welcome you to try our Storj Extension for Docker (still under development). Please leave any comments and feedback via GitHub.