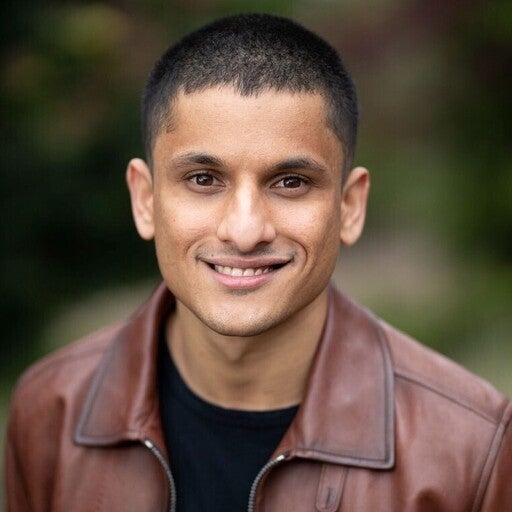

As large language models (LLMs) evolve from static text generators to dynamic agents capable of executing actions, there’s a growing need for a standardized way to let them interact with external tooling securely. That’s where Model Context Protocol (MCP) steps in, a protocol designed to turn your existing APIs into AI-accessible tools.

My name is Saloni Narang, a Docker Captain. Today, I’ll walk you through what the Model Context Protocol (MCP) is and why, despite its growing popularity, the developer experience still lags behind when it comes to discovering and using MCP servers. Then I will explore Docker Desktop’s latest MCP Catalog and Toolkit and demonstrate how you can find the right AI developer tools for your project easily and securely.

What is MCP?

Think of MCP as the missing middleware between LLMs and the real-world functionality you’ve already built. Instead of doing the prompt hacks or building custom plugins for each model, MCP allows you to define your capabilities as structured tools that any compliant AI client can discover, invoke, and interact with safely and predictably. While the protocol is still maturing and the documentation can be opaque, the underlying value is clear: MCP turns your backend into a toolbox for AI agents. Whether you’re integrating scraping APIs, financial services, or internal business logic, MCP offers a portable, reusable, and scalable pattern for AI integrations.

Overview of the Model Context Protocol (MCP)

The Pain Points of Equipping Your AI Agent with the Right Tools

You might be asking, “Why should I care about finding MCP servers? Can’t my agent just call any API?” This is where the core challenges for AI developers and agent builders lie. While MCP offers incredible promise, the current landscape for using AI agents with external capabilities is riddled with obstacles.

Integration Complexity and Agent Dev Overhead

Each MCP server often comes with its own unique configurations, environment variables, and dependencies. You’re typically left sifting through individual GitHub repositories, deciphering custom setup instructions, and battling conflicting requirements. This “fiddly, time-consuming, and easy to get wrong” process makes quick experimentation and rapid iteration on agent capabilities nearly impossible, significantly slowing down your AI development cycle.

A Fragmented Landscape of AI-Ready Tools

The internet is a vast place, and while you can find some random MCP servers, they’re scattered across various registries and personal repositories. There’s no central, trusted source, making discovery of AI-compatible tools a hunt rather than a streamlined process, impacting your ability to find and integrate the right functionalities quickly.

Trust and Security for Autonomous Agents

When your AI agent needs to access external services, how do you ensure the tools it interacts with are trustworthy and secure? Running an unknown MCP server on your machine presents significant security risks, especially when dealing with sensitive data or production environments. Are you confident in its provenance and that it won’t introduce vulnerabilities into your AI pipeline? This is a major hurdle, especially in enterprise settings where security and AI governance are paramount.

Inconsistent Agent-Tool Interface

Even once you’ve managed to set up an MCP server, connecting it to your AI agent or IDE can be another manual nightmare. Different AI clients or frameworks might have different integration methods, requiring specific JSON blocks, API keys, or version compatibility. This lack of a unified interface complicates the development of robust and portable AI agents.

These challenges slow down AI development, introduce potential security risks for agentic systems, and ultimately prevent developers from fully leveraging the power of MCP to build truly intelligent and actionable AI.

Why is Docker a game-changer for AI, and specifically for MCP tools?

Docker has already proven to be the de facto standard for creating and distributing containerized applications. Its user experience is the key reason why I and millions of other developers use Docker today. Over the years, Docker has evolved to cater to the needs of developers, and it entered the AI game too. With so many MCP servers having a set of configurations living on separate GitHub repositories and different installation methods, Docker has again changed the game on how we think and run these MCP servers and connect to MCP clients like Claude.

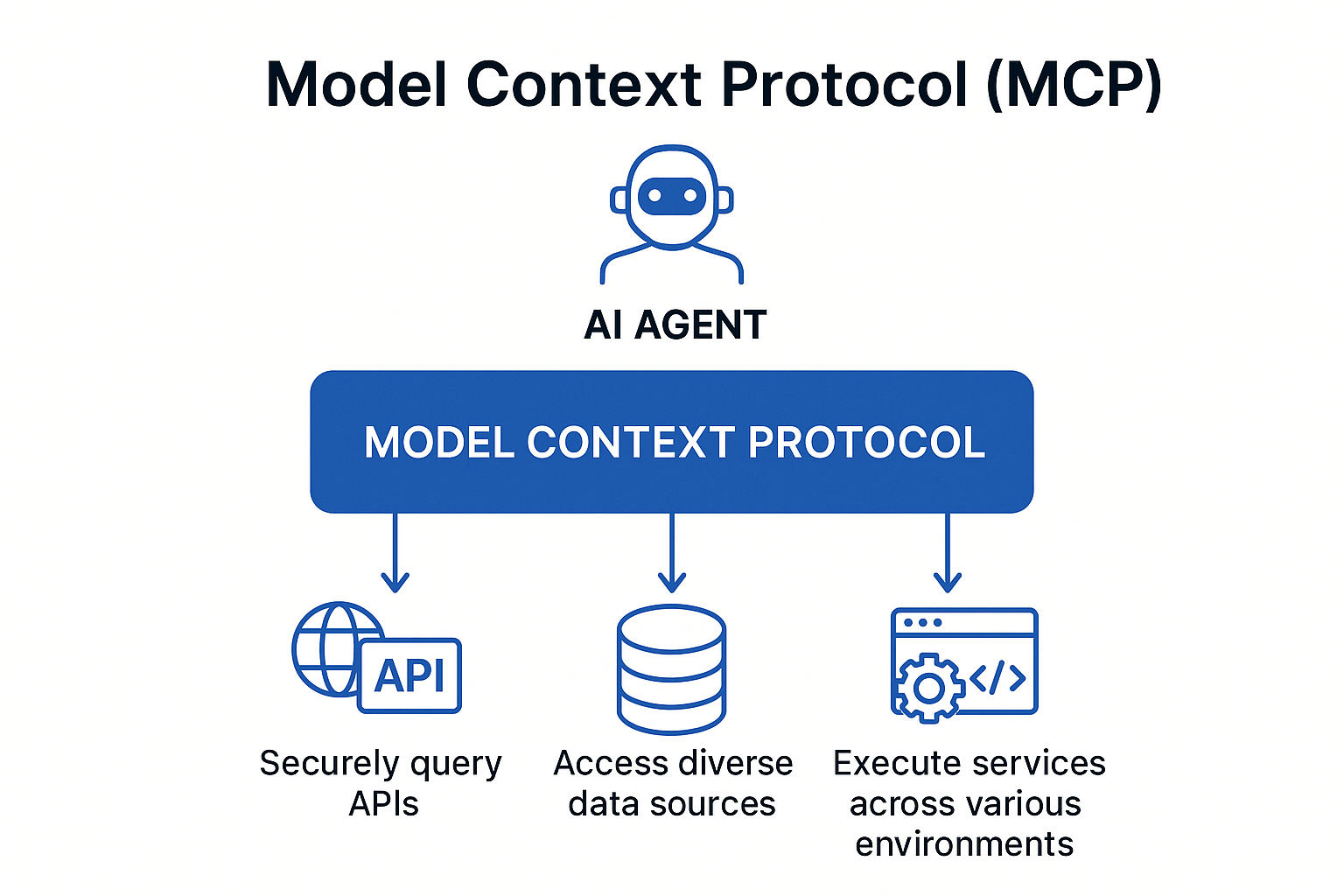

Docker has introduced the Docker MCP Catalog and Toolkit (currently in Beta). This is a comprehensive solution designed to streamline the developer experience for building and using MCP-compatible tools.

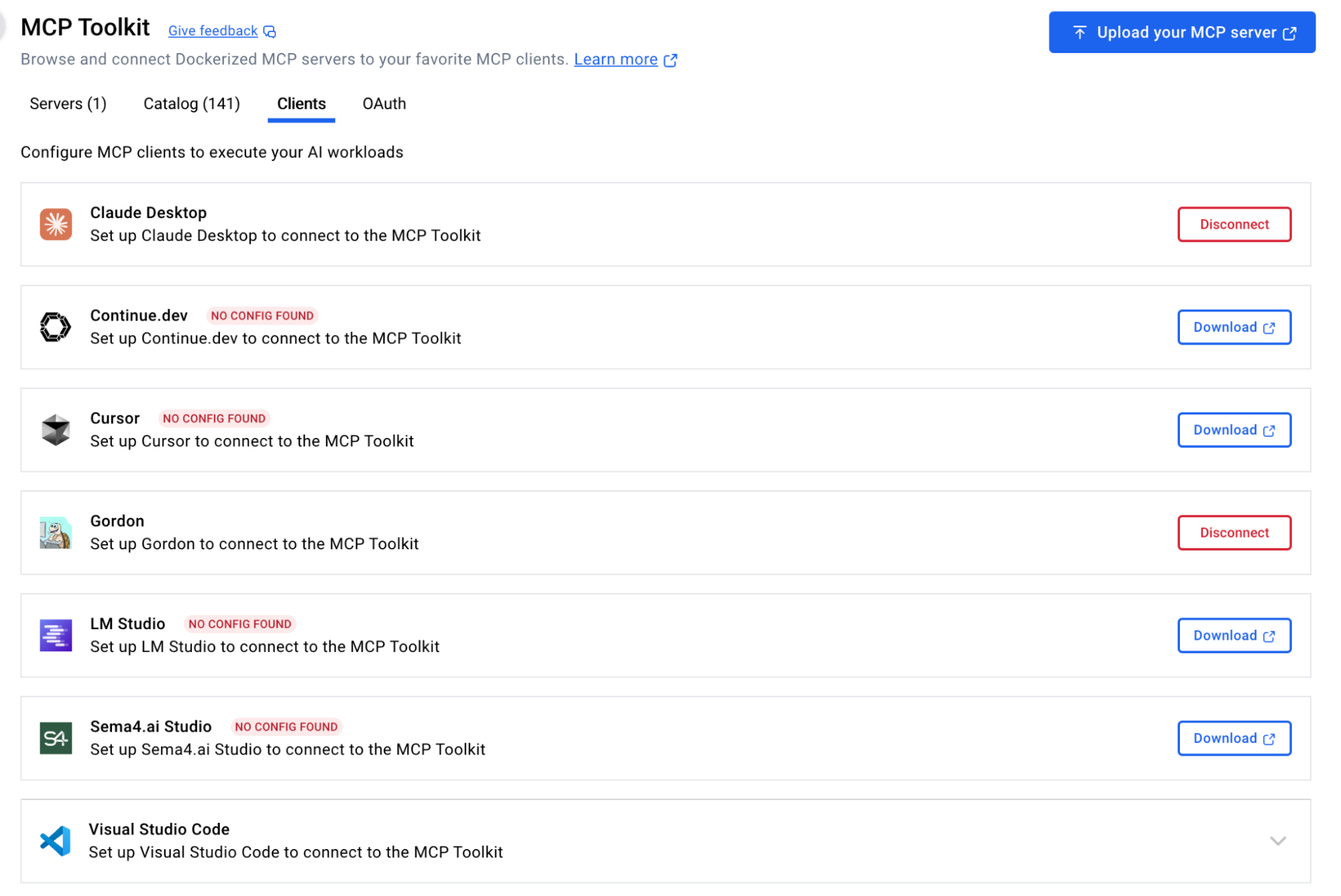

MCP Toolkit Interface in Docker Desktop

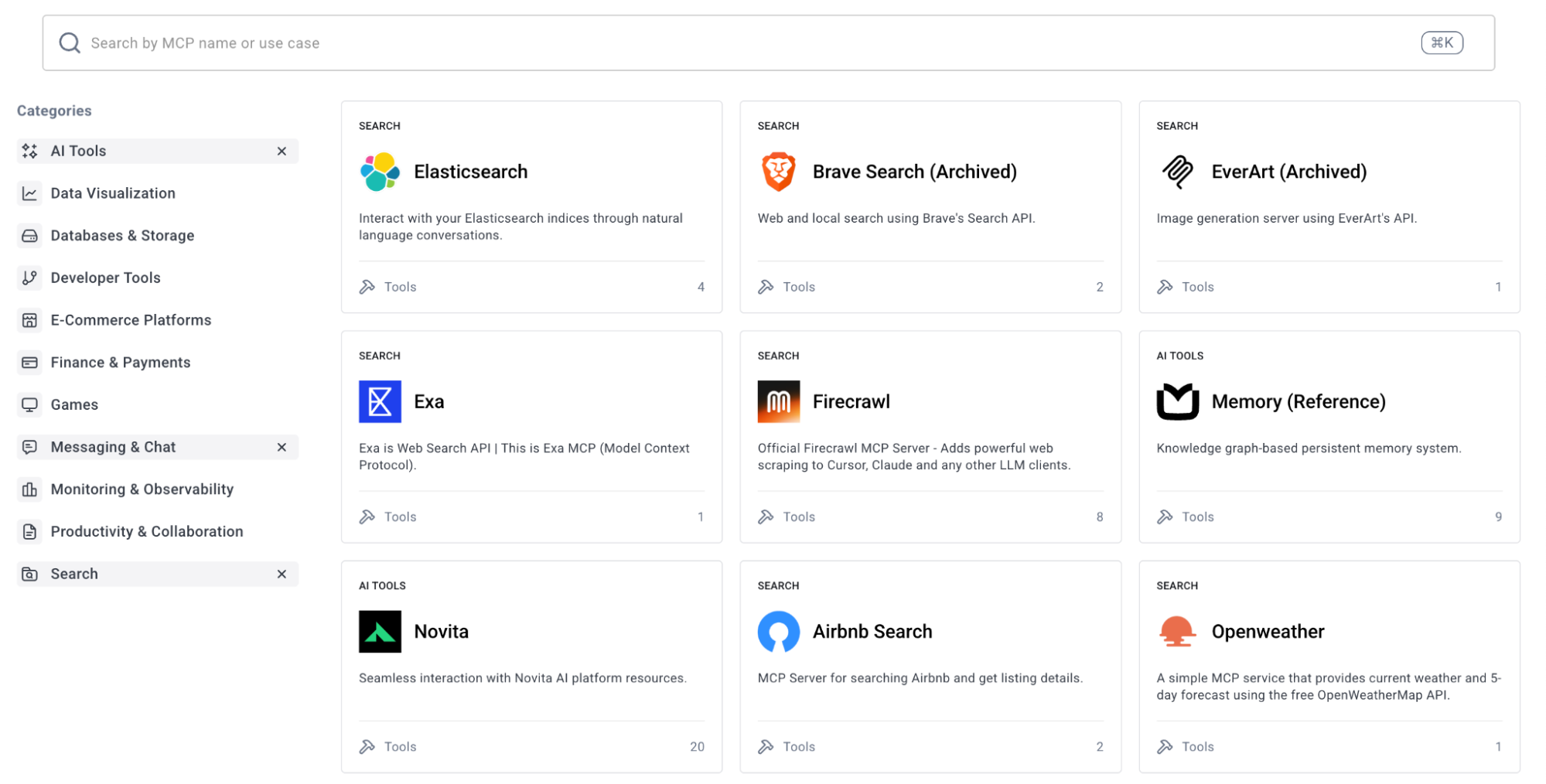

What is the Docker MCP Catalog?

The Docker MCP Catalog is a centralized, trusted registry that offers a curated collection of MCP-compatible tools packaged as Docker images. Integrated with Docker Hub and available directly through Docker Desktop, it simplifies the discovery, sharing, and execution of over 100 plus verified MCP servers from partners like Stripe, Grafana, etc. By running each tool in an isolated container, the catalog addresses common issues such as environment conflicts, inconsistent platform behavior, and complex setups, ensuring portability, security, and consistency across systems. Developers can instantly pull and run these tools using Docker CLI or Desktop, with built-in support for agent integration via the MCP Toolkit.

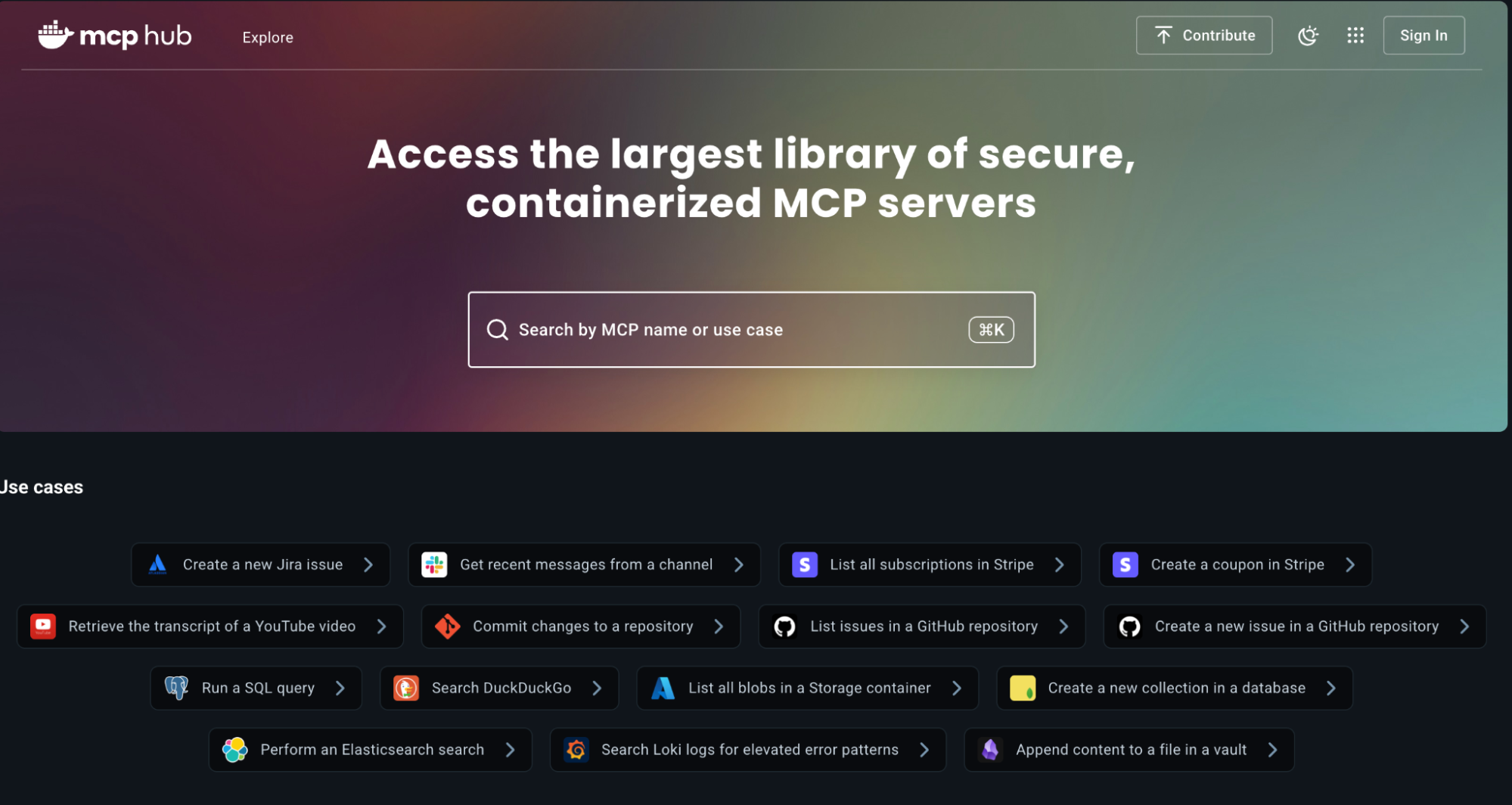

MCP Catalog on Docker Hub hosts the largest collection of containerized MCP servers

With Docker, you now have access to the largest library of secure, containerized MCP servers, all easily discoverable and runnable directly from Docker Desktop, Docker Hub, or the standalone MCP Catalog. Whether you want to create a Jira issue, fetch GitHub issues, run SQL queries, search logs in Loki, or pull transcripts from YouTube videos, there’s likely an MCP server for that. The enhanced catalog now lets you browse by use case, like Data Integration, Development Tools, Communication, Productivity, or Analytics, and features powerful search filters based on capabilities, GitHub tags, and tool categories. You can launch these tools in seconds, securely running them in isolated containers.

You can find the MCP servers online but they are all scattered, and every MCP server has its own process of installation, manual steps to configure with your client. This is where the MCP server catalog comes in. When browsing the Docker MCP Catalog, you’ll notice that MCP servers fall into two categories: Docker-built and community-built. This distinction helps developers understand the level of trust, verification, and security applied to each server.

Docker-Built Servers

These are MCP servers that Docker has packaged and verified through a secure build pipeline. You can think of them as certified and hardened; they come with verified metadata, supply chain transparency, and automated vulnerability scanning. These servers are ideal when security and provenance are critical, like in enterprise environments.

Community-Built Servers

These servers are built and maintained by individual developers or organizations. While Docker doesn’t oversee the build process, they still run inside isolated containers, offering users a safer experience compared to running raw scripts or binaries. They give developers a diverse set of tools to innovate and build, enabling rapid experimentation and expansion of the available tool catalog.

How to Find the Right AI Developer Tool with MCP Catalog

With Docker, you now have access to the largest library of secure, containerized MCP servers, all easily discoverable and runnable directly from Docker Desktop, Docker Hub, or the standalone MCP Catalog. Whether you want your AI agent to create a Jira issue, run SQL queries, search logs in Loki, or pull transcripts from YouTube videos, there’s likely an MCP server for that.

Enhanced Search and Browse by AI Use Case

The enhanced catalog now lets you browse by specific AI use cases, like Data Integration for LLMs, Development Tools for Agents, Communication Automation, AI Productivity Enhancers, or Analytics for AI Insights, and features powerful search filters based on capabilities, GitHub tags, and tool categories. You can launch these tools in seconds, securely running them in isolated containers to empower your AI agents.

The Docker MCP Catalog is built with AI developers in mind, making it easy to discover tools based on what you want your AI agent to do. Whether your goal is to automate workflows, connect to dev tools, retrieve data, or integrate AI into your app, the catalog organizes MCP servers by real-world use cases such as:

- AI Tools (e.g., summarization, chat, transcription for agentic workflows)

- Data Integration (e.g., Redis, MongoDB for feeding data to agents)

- Productivity & Developer Tools (e.g., Pulumi, Jira for agent-driven task management)

- Monitoring & Observability (e.g., Grafana for AI-powered system insights)

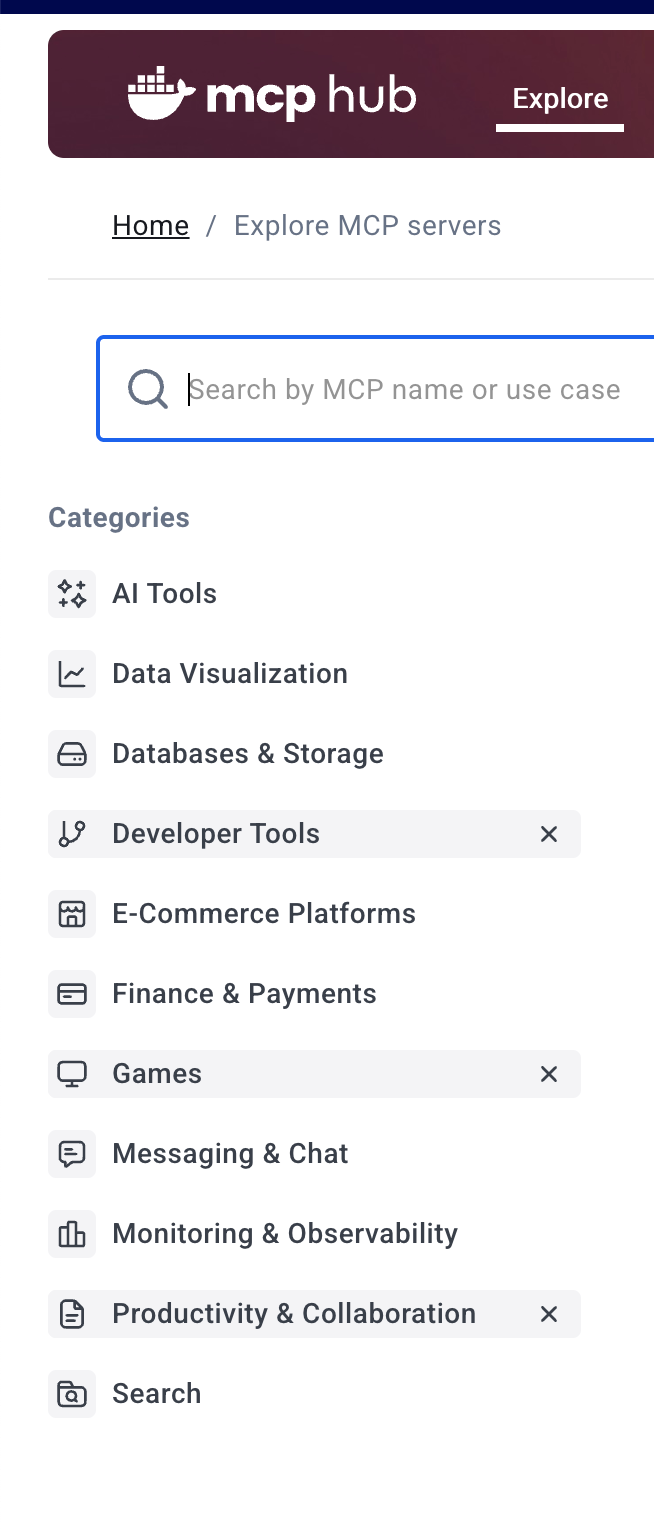

Browsing MCP Tools by AI Use Case

Search & Category Filters

The Catalog also includes powerful filtering capabilities to narrow down your choices:

- Filter by tool category, like “Data visualization” or “Developer tools”

- Search by keywords, GitHub tags, or specific capabilities

- View tools by their trust level (Docker-built vs. community-built)

These filters are particularly useful when you’re looking for a specific type of tool (like something for logs or tickets), but don’t want to scroll through a long list.

Browsing MCP Tools by AI Use Case (Expanded)

One-Click Setup Within Docker Desktop

Once you’ve found a suitable MCP server, setting it up is incredibly simple. Docker Desktop’s MCP Toolkit allows you to:

- View details about each MCP server (what it does, how it connects)

- Add your credentials or tokens, if required (e.g., GitHub PAT)

- Click “Connect”, and Docker will pull, configure, and run the MCP server in an isolated container

No manual config files, no YAML, no shell commands, just a unified, GUI-based experience that works across macOS, Windows, and Linux. It’s the fastest and easiest way to test or integrate new tools with your AI agent workflows.

Example – Powering Your AI Agent with Redis and Grafana MCP Servers

Let’s imagine you’re building an AI agent in your IDE (like VS Code with Agent Mode enabled) that needs to monitor application performance in real-time. Specifically, your agent needs to:

- Retrieve real-time telemetry data from a Redis database (e.g., user activity metrics, API call rates).

- Visualize performance trends from that data using Grafana dashboards, and potentially highlight anomalies.

Traditionally, an AI developer would have to manually set up both a Redis server and a Grafana instance, configure their connections, and then painstakingly figure out how your agent can interact with their respective APIs, a process prone to errors and security gaps. This is where the Docker MCP Catalog dramatically simplifies the AI tooling pipeline.

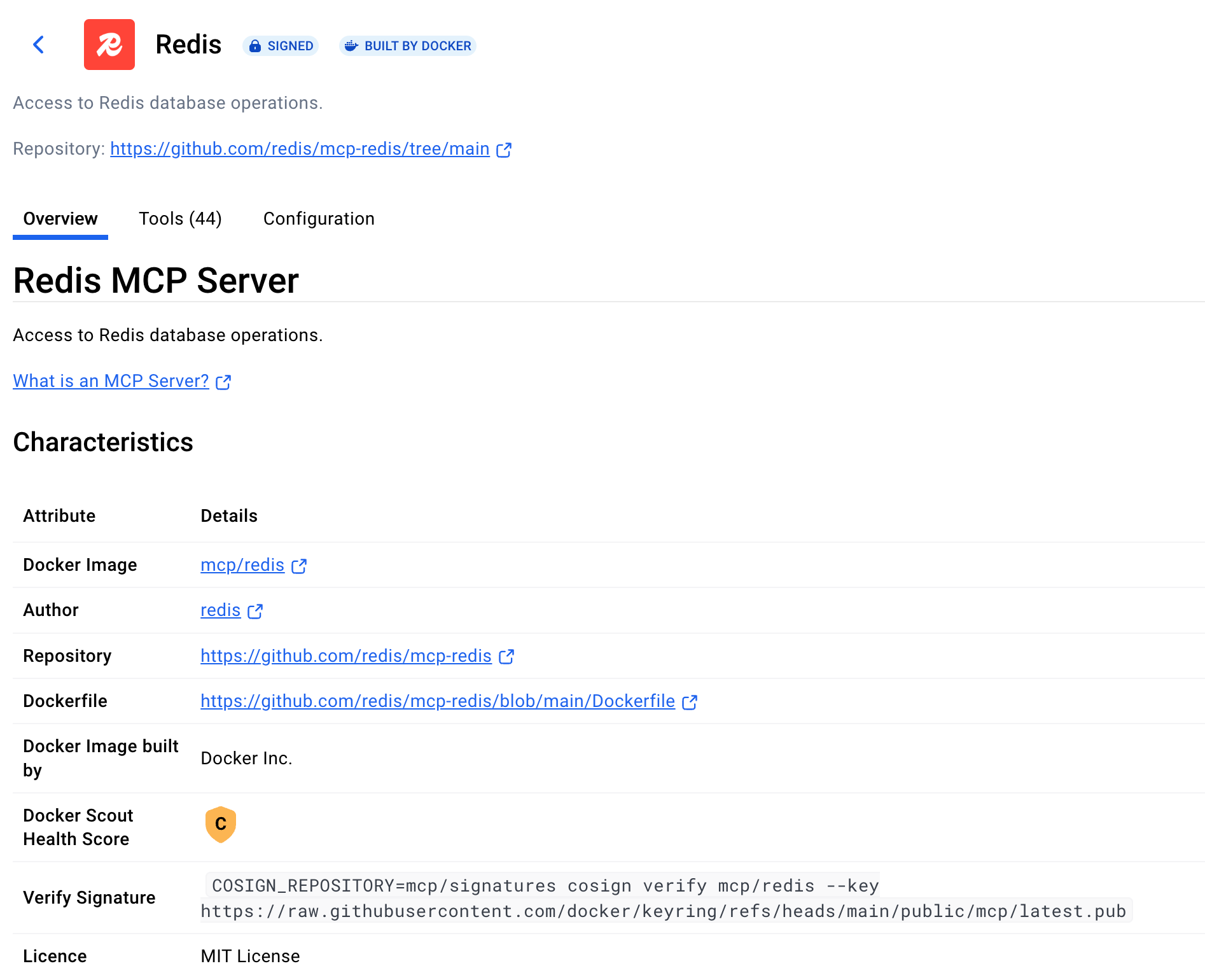

Step 1: Discover and Connect to Redis MCP Server for Agent Data Ingestion

Instead of manual setup, you’ll simply:

- Go to the Docker Desktop MCP Catalog: Search for “Redis.” You’ll find a Redis MCP Server listed, ready for integration with your agent.

Redis MCP Server

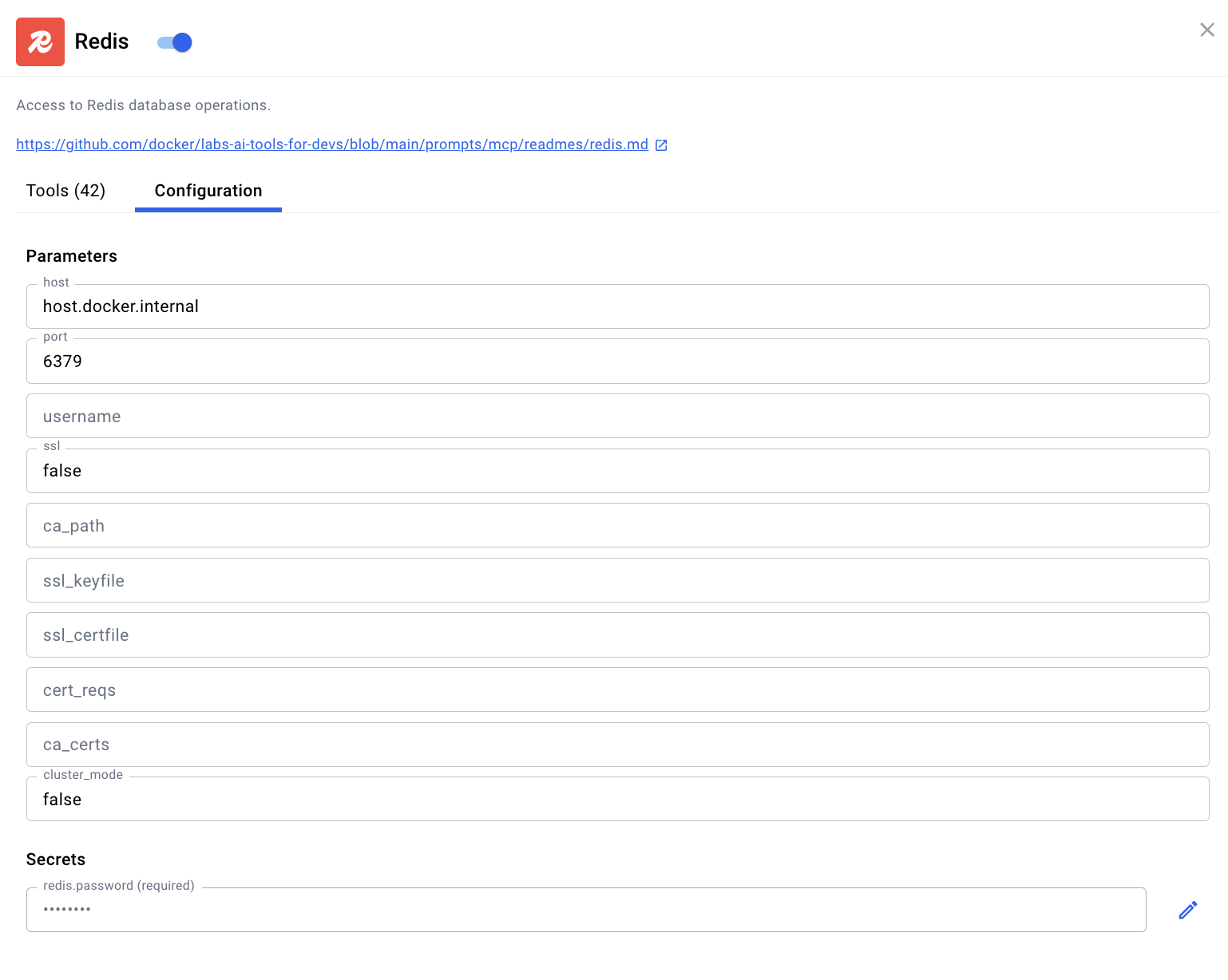

- Add MCP server: Docker Desktop handles pulling the Redis MCP server image, configuring it, and running it in an isolated container. You might need to provide basic connection details for your Redis instance, but it’s all within a guided UI, ensuring secure credential management for your agent. All the tools that will be visible in the MCP client are visible when you select the MCP server.

Currently I am running Redis as a Docker container locally and using that as the configuration for Redis MCP server.

Below is the Docker command to run Redis locally

docker run -d \

--name my-redis \

-p 6379:6379 \

-e REDIS_PASSWORD=secret123 \

redis:7.2-alpine \

redis-server --requirepass secret123

Running Redis MCP Server Locally

Step 2: Discover Grafana MCP Server for Agent-Driven Visualization

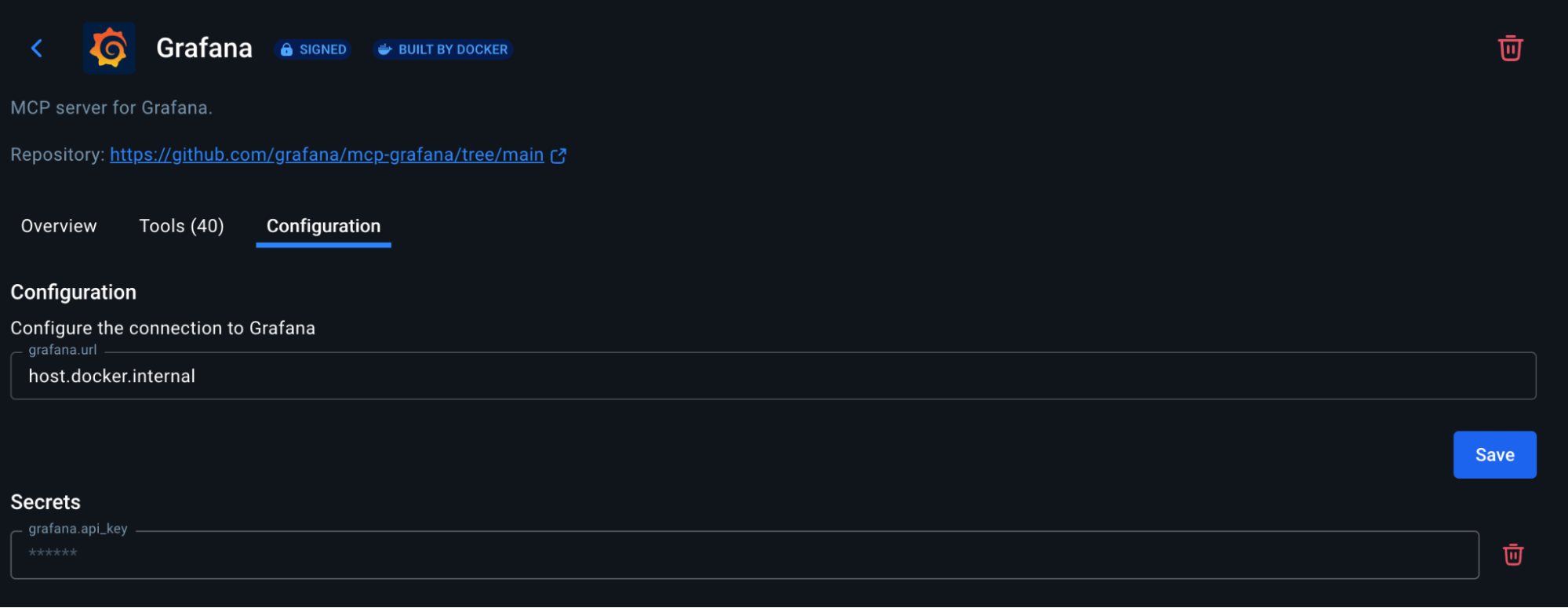

Next, for visualization and anomaly detection: Here also I am running Grafana as a docker container locally and then generating the api key secret using the grafana dashboard.

docker run -d \

--name grafana \

-p 3000:3000 \

-e "GF_SECURITY_ADMIN_USER=admin" \

-e "GF_SECURITY_ADMIN_PASSWORD=admin" \

grafana/grafana-oss

- Go back to the Docker Desktop MCP Catalog: Search for “Grafana.”

- Add MCP Server: Similar to Redis, Docker will spin up the Grafana MCP server. You’ll likely input your Grafana instance URL and API key directly into Docker Desktop’s secure interface.

Step 3: Connect via the MCP Toolkit to Empower Your AI Agent

With both Redis and Grafana MCP servers running and exposed via the Docker MCP Toolkit, your AI Clients like Claude or Gordon can now seamlessly interact with them. Your IDE’s agent, utilizing its tool-calling capabilities, can:

- Query the Redis MCP Server to fetch specific user activity metrics or system health indicators.

- Pass that real-time data to the Grafana MCP Server to generate a custom dashboard URL, trigger a refresh of an existing dashboard, or even request specific graph data points, which the agent can then analyze or present to you.

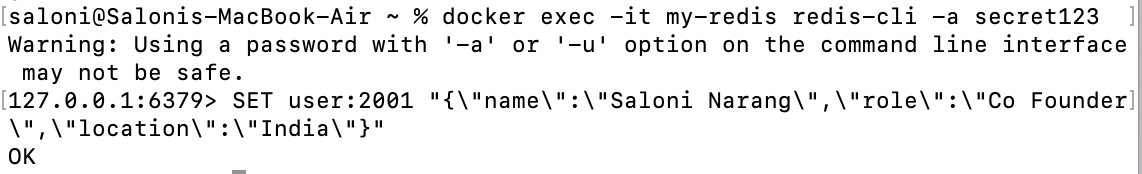

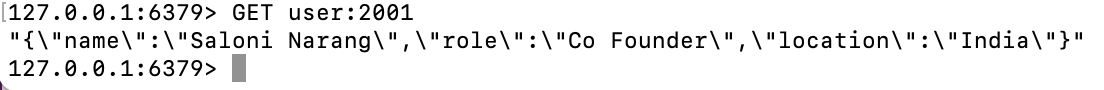

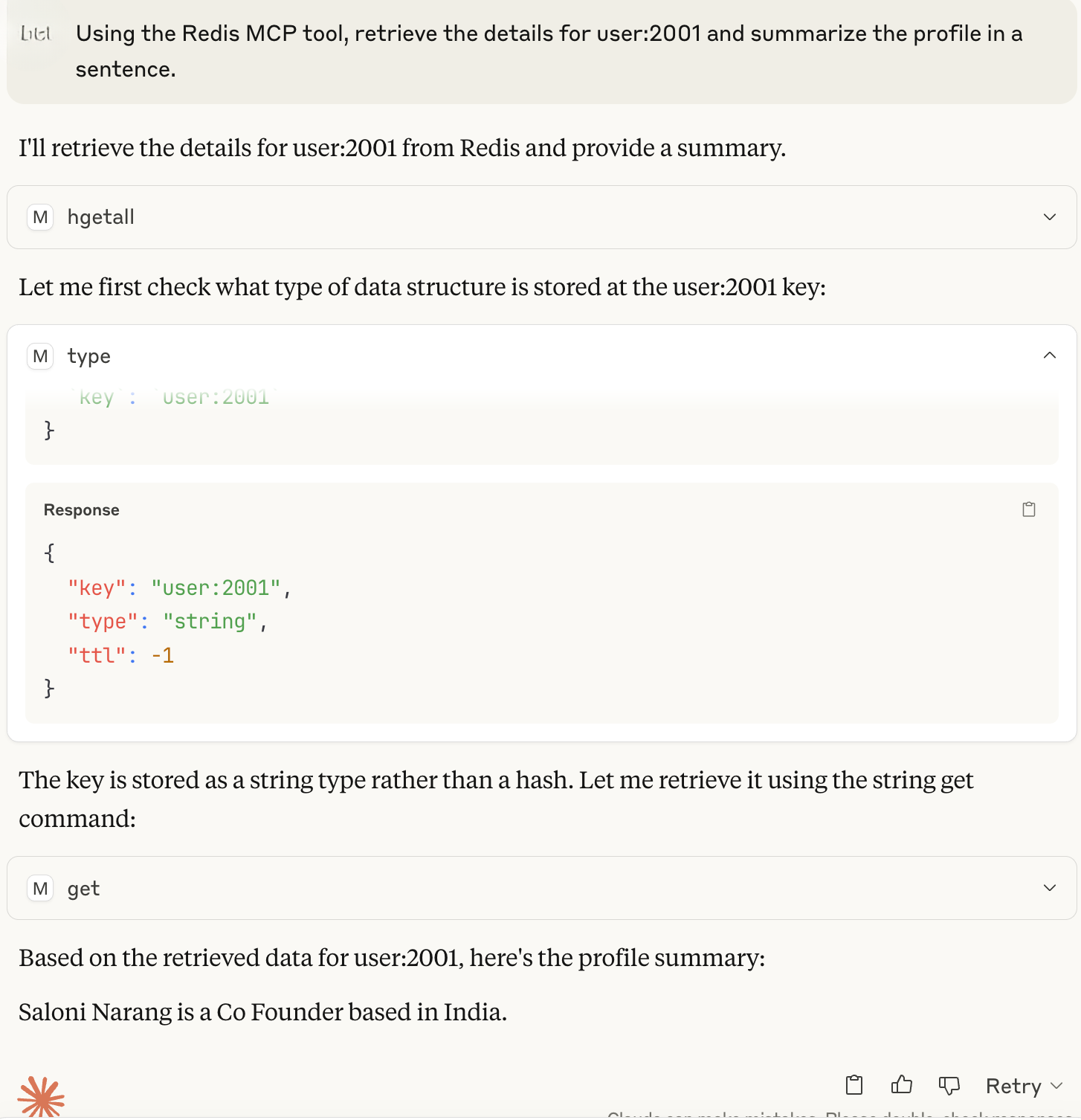

Before doing the tool call, let’s add some data to our Redis locally.

docker exec -it my-redis redis-cli -a secret123

SET user:2001 "{\"name\":\"Saloni Narang\",\"role\":\"Co Founder\",\"location\":\"India\"}"

The next step involves connecting the client to the MCP server. You can easily select from the provided list of clients and connect them with one click; for this example, Claude Desktop will be used. Upon successful connection, the system automatically configures and integrates the settings required to discover and connect to the MCP servers. Should any errors occur, a corresponding log file will be generated on the client side.

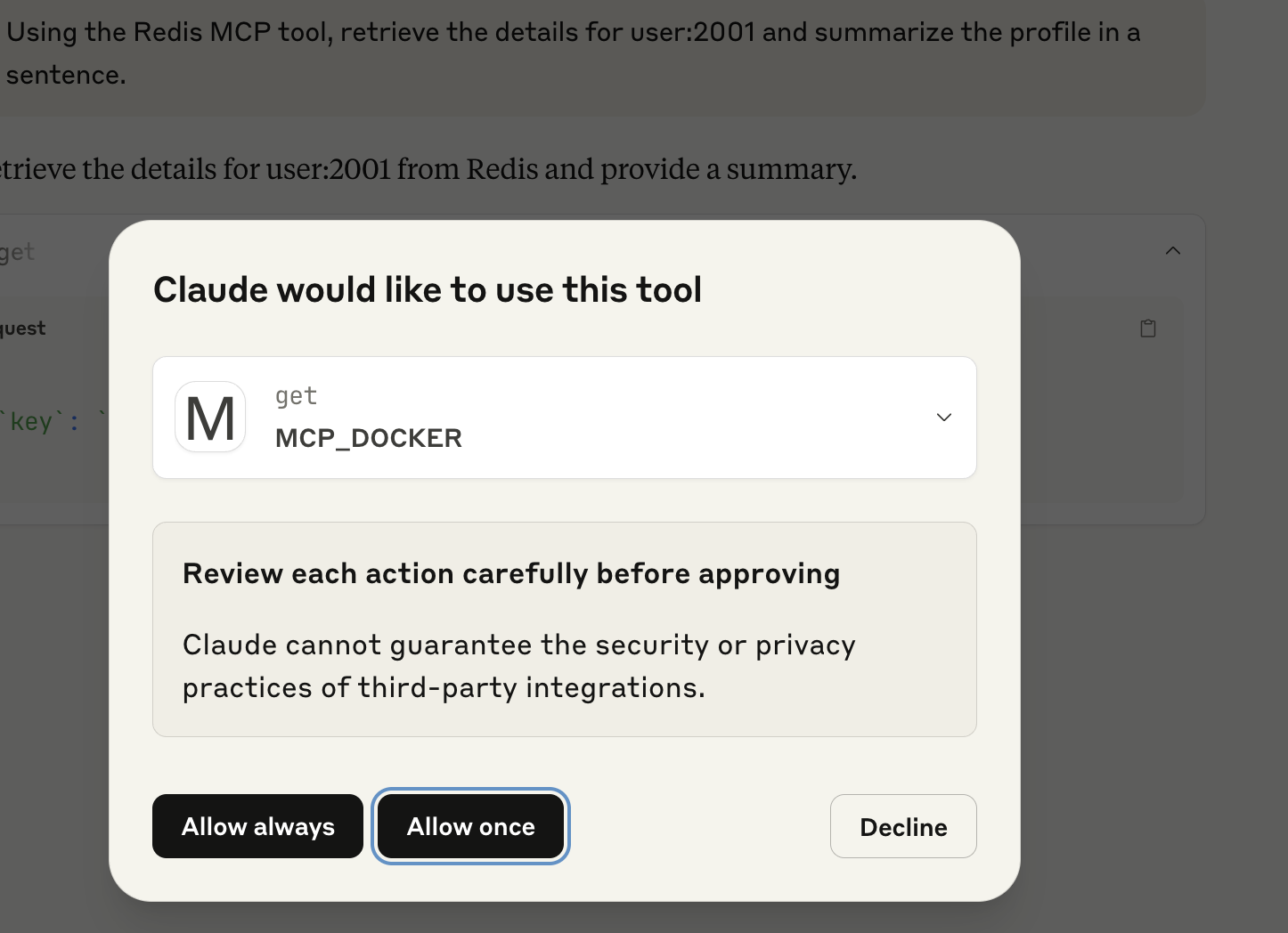

Now let’s open Claude Desktop and run a query

Claude UI Permission Prompt

Claude Agent Using Redis and Grafana MCP Servers

This is how you can use the power of AI along with MCP servers via Docker Desktop.

How to Contribute to the Docker MCP Registry

The Docker MCP Registry is open for community contributions, allowing developers and teams to publish their own MCP servers to the official Docker MCP Catalog. Once listed, these servers become accessible through Docker Desktop’s MCP Toolkit, Docker Hub, and the web-based MCP Catalog, making them instantly available to millions of developers.

Here’s how the contribution process works:

Option A: Docker-Built Image

In this model, contributors provide the MCP server metadata, and Docker handles the entire image build and publishing process. Once approved, Docker builds the image using their secure pipeline, signs it, and publishes it to the mcp/ namespace on Docker Hub.

Option B: Self-Built Image

Contributors who prefer to manage their own container builds can submit a pre-built image for inclusion in the catalog. These images won’t receive Docker’s build-time security guarantees, but still benefit from Docker’s container isolation model.

Updating or Removing an MCP Entry

If a submitted MCP server needs to be updated or removed, contributors can open an issue in the MCP Registry GitHub repo with a brief explanation.

Submission Requirements

To ensure quality and security across the ecosystem, all submitted MCP servers must:

- Follow basic security best practices

- Be containerized and compatible with MCP standards

- Include a working Docker deployment

- Provide documentation and usage instructions

- Implement basic error handling and logging

Non-compliant or outdated entries may be flagged for revision or removal.

Contributing to the Docker MCP Catalog is a great way to make your tools discoverable and usable by AI agents across the ecosystem-whether it’s for automating tasks, querying APIs, or powering real-time agentic workflows.

Want to contribute? Head over to github.com/docker/mcp-registry to get started.

Conclusion

Docker has always stood at the intersection of innovation and simplicity, from making containerization accessible to now enabling developers to build, share, and run AI developer tools effortlessly. With the rise of agentic AI, the Docker MCP Catalog and Toolkit bring much-needed structure, security, and ease-of-use to the world of AI integrations.

Whether you’re just exploring what MCP is or you’re deep into building AI agents that need to interact with external tools, Docker gives you the fastest on-ramp, no YAML wrangling, no token confusion, just click and go.

As we experiment with building our own MCP servers in the future, we’d love to hear from you:

– Which MCP server is your favorite?

– What use case are you solving with Docker + AI today?

You can quote this post and put your use case along with your favorite MCP server, and tag Docker on LinkedIn or X.