If you use Docker for development, you’re already well on your way to creating cloud-native software. Containerization takes care of all the essential elements for running code like system dependencies, language requirements, and application configurations. But can it handle more intricate use cases like Kubernetes local development?

In more complex systems, you might need to connect your code with several auxiliary services (like databases, storage volumes, APIs, caching layers, and message brokers). In modern Kubernetes systems, you’ll also need to manage service meshes and cloud-native deployment patterns (like probes, configuration, and structural and behavioral patterns).

Kubernetes offers a uniform interface for orchestrating scalable, resilient, and services-based applications. However, its complexity can be overwhelming, especially for developers without extensive experience setting up local Kubernetes clusters. Gefyra helps developers work in local Kubernetes development environments and create secure, reliable, and scalable software more easily.

What is Gefyra?

Gefyra, named after the Greek word for “bridge,” is a comprehensive toolkit that facilitates Docker-based development with Kubernetes. If you plan to use Kubernetes as your production platform, it’s essential to work with the same environment during development. This method ensures a good balance between development and production, minimizing any problems during the transition.

Gefyra is an open source project that provides docker run on steroids. You can use it to connect your local Docker with any Kubernetes cluster. This allows you to run a container locally that behaves as if it were running in the cluster. You can write code locally in your favorite code editor using the tools you love.

Gefyra also doesn’t require you to perform multiple tasks when making code changes. Instead, Gefyra lets you connect your code to the cluster without making any changes to your Dockerfile. No need to create a Docker image, send it to a registry, or restart the cluster.

This method is helpful for new code and when inspecting existing code with a debugger connected to a container. That makes Gefyra a productivity superstar for any Kubernetes-based development work.

How does Gefyra work?

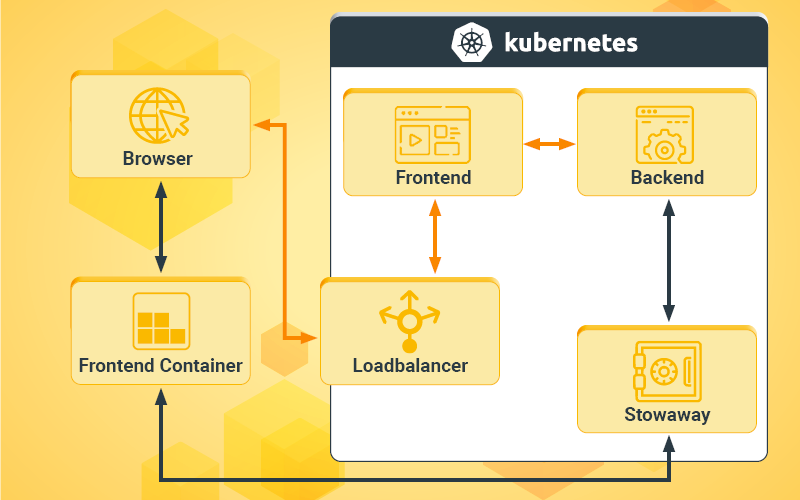

Gefyra installs several cluster-side components that allow it to control the local development machine and the development cluster.

These components include a tunnel between the local development machine and the Kubernetes cluster, a local DNS resolver that behaves like the cluster DNS, and sophisticated IP routing mechanisms. To build on these components, Gefyra uses popular open source technologies, such as Docker, WireGuard, CoreDNS, Nginx, and Rsync.

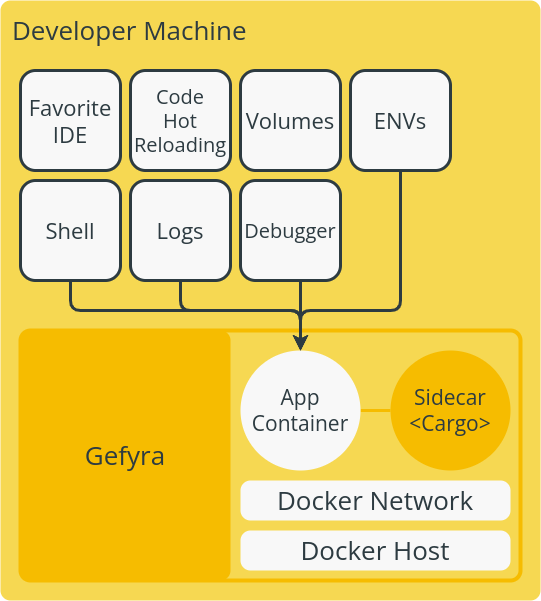

To set up the local development, the developer needs to run the application in a container on their machine. The container should have a sidecar container named Cargo. The sidecar container acts as a network gateway and forwards all requests to the cluster using a CoreDNS serve (Figure 1).

Cargo encrypts all the passing traffic with WireGuard using ad hoc connection secrets. Developers can use their existing tooling, including their favorite code editor and debuggers, to develop their applications.

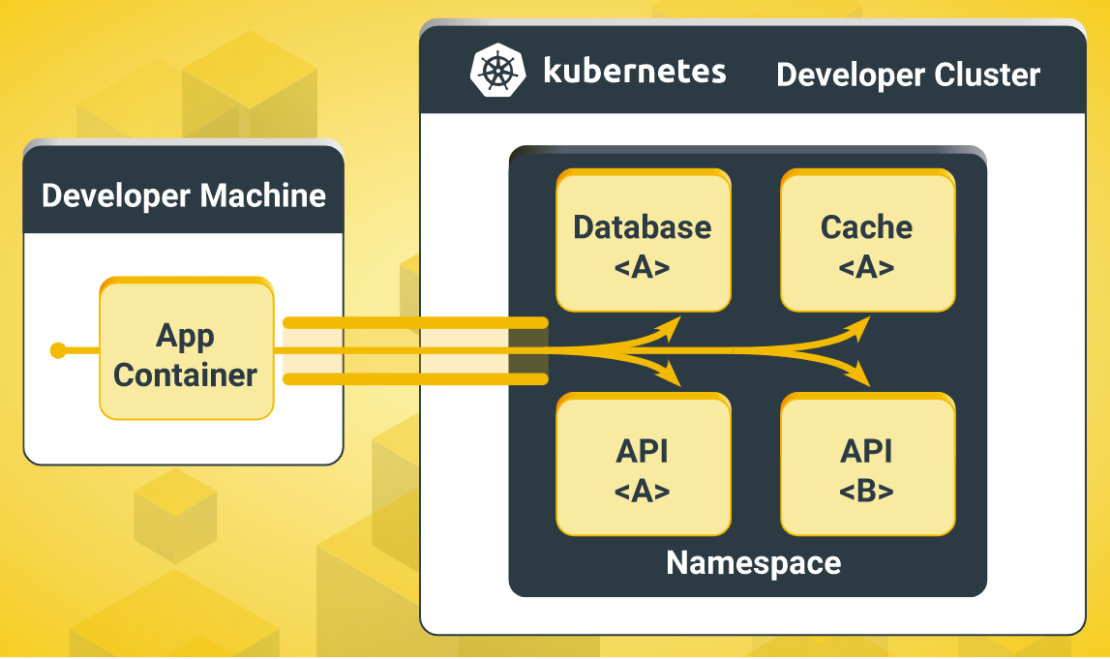

Gefyra manages two ends of a WireGuard connection and automatically establishes a VPN tunnel between the developer and the cluster. This creates a robust and fast connection without stressing the Kubernetes API server (Figure 2). The client side of Gefyra also manages a local Docker network with a VPN endpoint. This lets the container join the VPN that directs all traffic into the cluster.

Gefyra also allows bridging existing traffic from the cluster to the local container, enabling developers to test their code with real-world requests from the cluster and collaborate on changes in a team. The local container instance remains connected to auxiliary services and resources in the cluster while receiving requests from other Pods, Services, or the Ingress. This setup removes the need to create container images in a CI pipeline and update clusters for small changes.

Why run Gefyra as a Docker Extension?

The Python library containing Gefyra’s core functionality is available in its repository. The CLI that comes with the project has a long list of arguments that may be overwhelming for some users. To make it more accessible for developers, Gefyra developed the Docker Desktop extension.

The Gefyra extension lets developers work with a variety of Kubernetes clusters in Docker Desktop. These include the built-in cluster, local providers (like Minikube, K3d, or Kind, Getdeck Beiboot), and remote clusters. Let’s get started.

Installing the Gefyra Docker Desktop

Prerequisites: Docker Desktop 4.8 or later.

Step 1: Initial setup

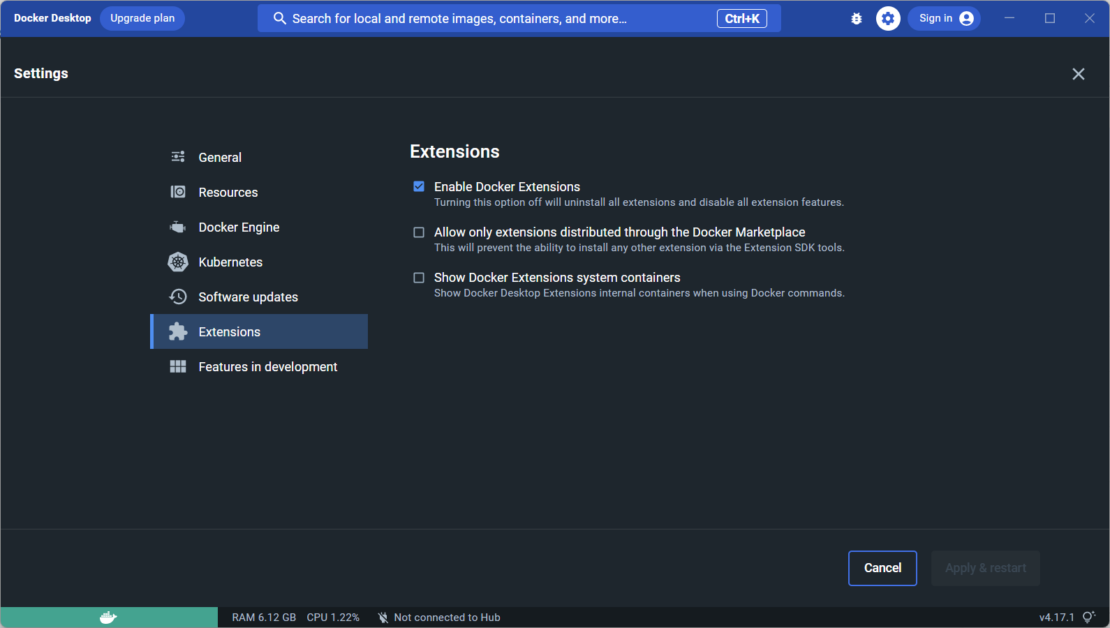

First, make sure you have enabled Docker Extensions in Docker Desktop (it should be enabled by default). In Settings | Extensions select the Enable Docker Extensions box (Figure 3).

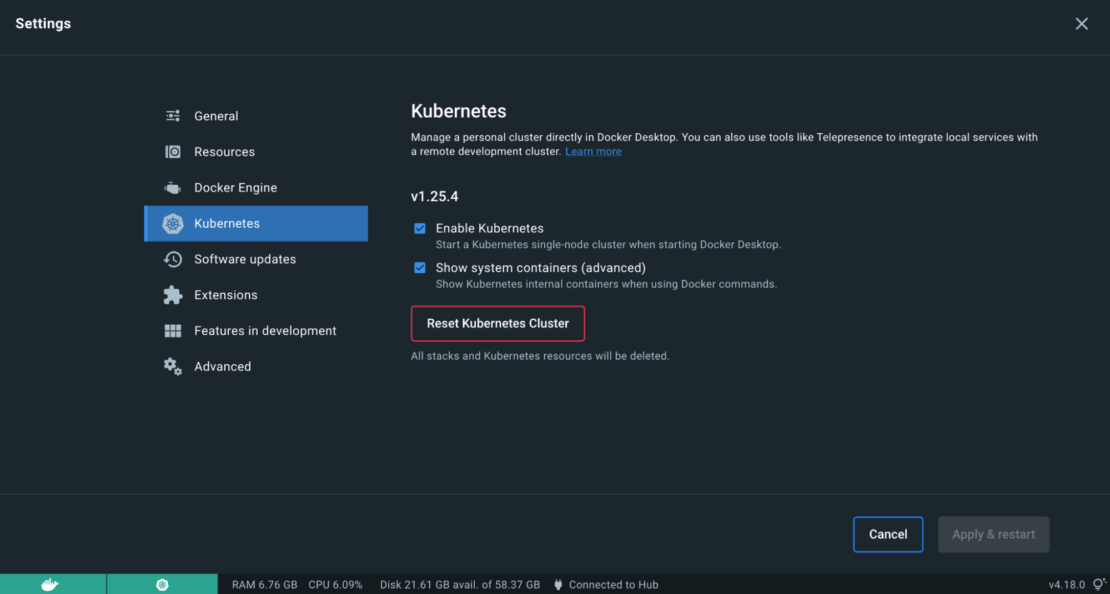

You’ll also need to enable Kubernetes under Settings (Figure 4).

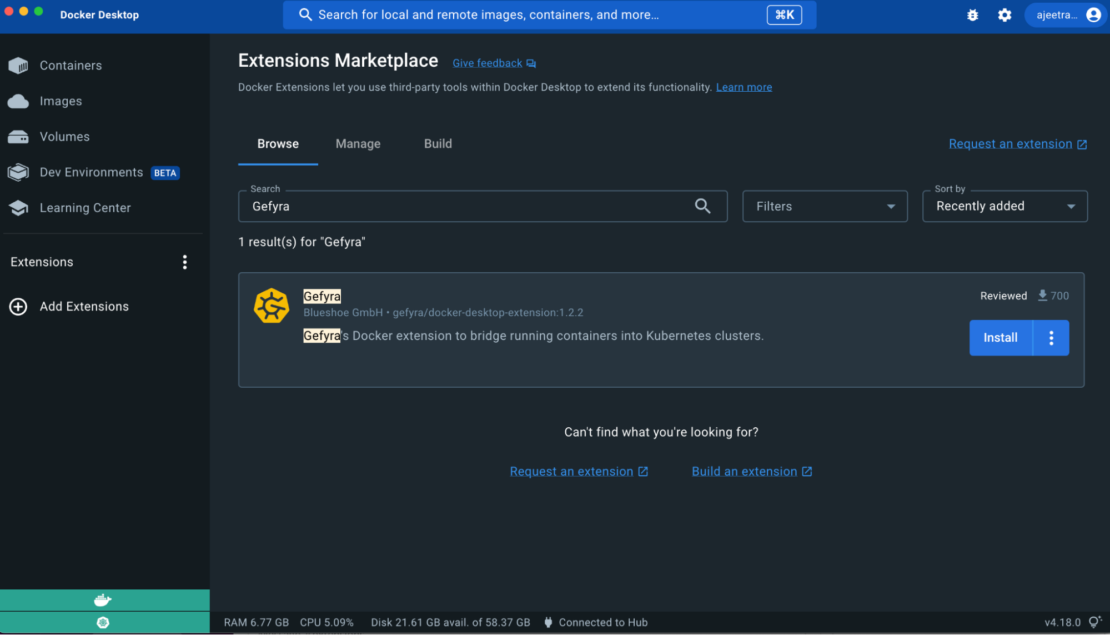

Gefyra is in the Docker Extensions Marketplace. Next, we’ll install Gefyra in Docker Desktop.

Step 2: Add the Gefyra extension

Open Docker Desktop and select Add Extensions to find the Gefyra extension in the Extensions Marketplace (Figure 5).

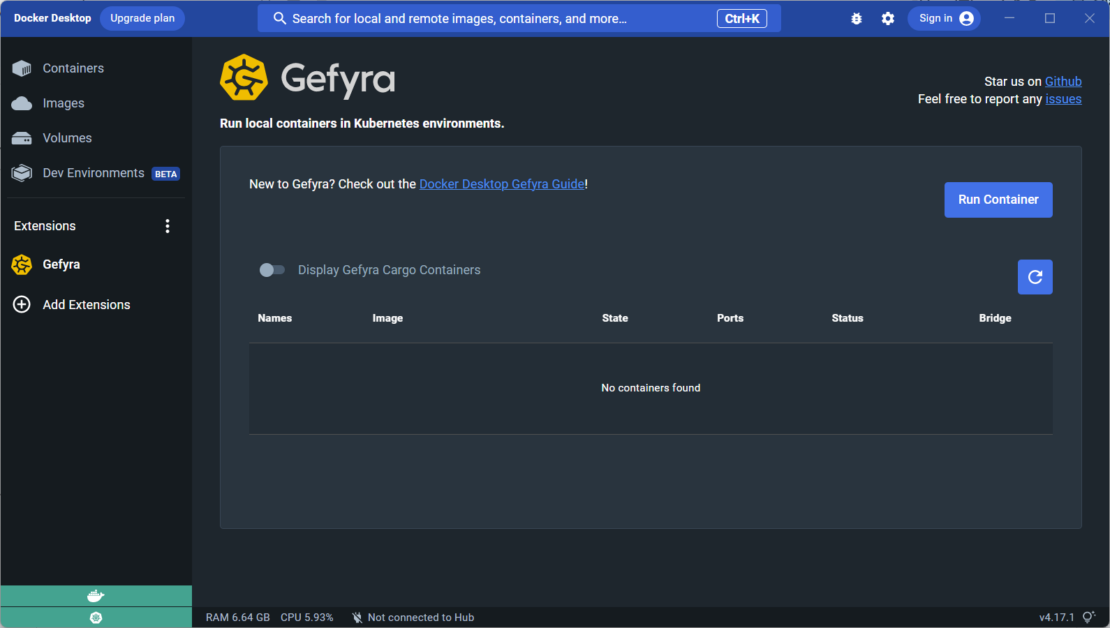

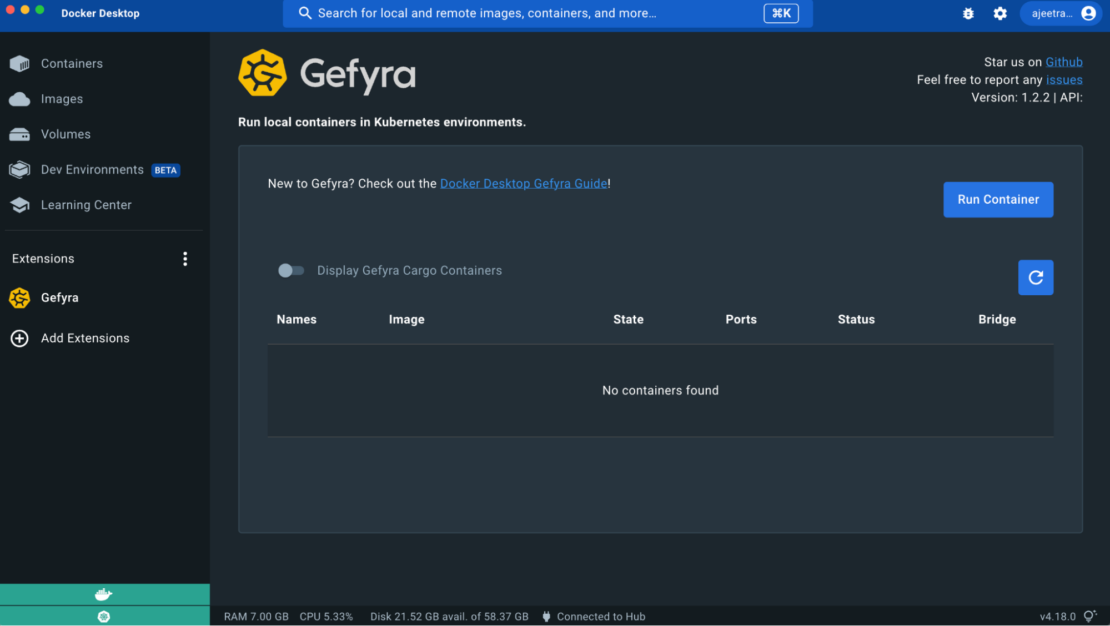

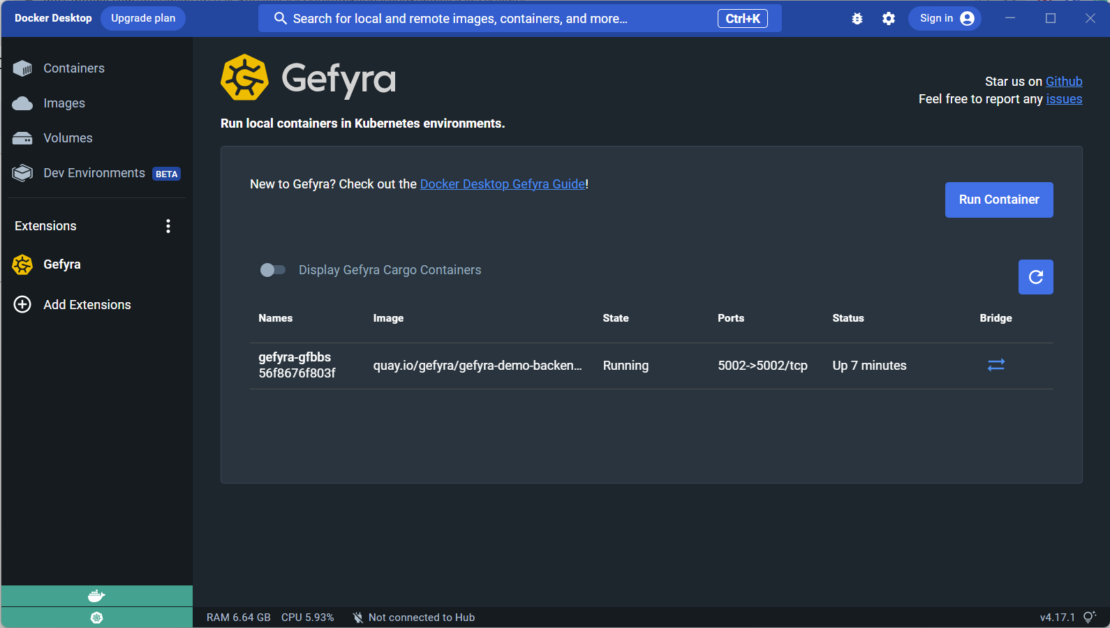

Once you install Gefyra, you can open the extension and find the Gefyra start screen. Here you’ll find a list of all the containers connected to a Kubernetes cluster. Of course, this section is empty on a fresh install.

To start a local container using Gefyra, you need to click the Run Container button. You can find this button in the top right (Figure 6).

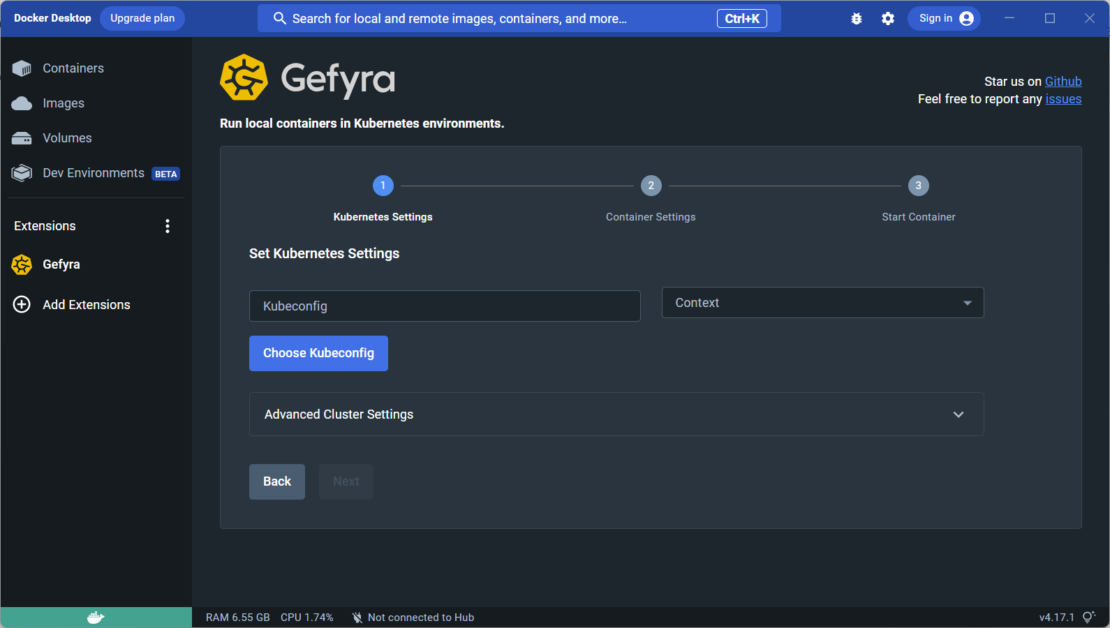

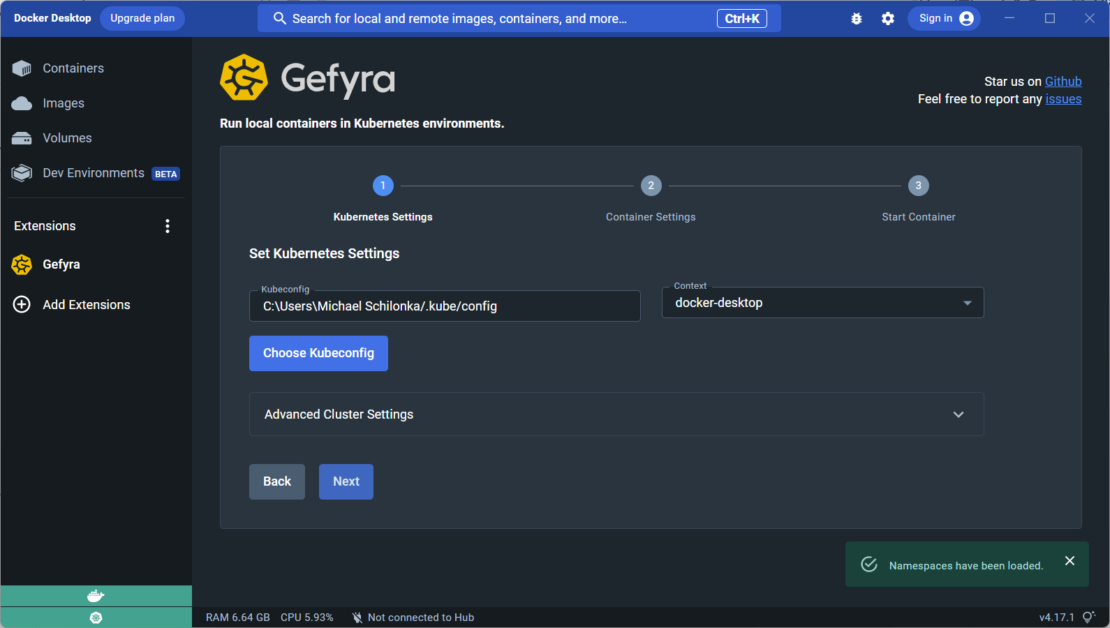

The next steps will vary based on whether you’re working with a local or remote Kubernetes cluster. If you’re using a local cluster, select the matching kubeconfig file and optionally set the context (Figure 7).

For remote clusters, you may need to manually specify additional parameters. We’ll give a detailed example for you to follow along with in the next section.

The Kubernetes demo workloads

The following example showcases how Gefyra uses the Kubernetes functionality in Docker Desktop to create a development environment for a simple application (Figure 8).

These two services include a backend and a frontend and are implemented as Python processes. And the frontend service uses a color property obtained from the backend to generate an HTML document. The two services communicate using HTTP, with the backend address given to the frontend as an environment variable.

The Gefyra team has created a repository for the Kubernetes demo workloads, which you can find on GitHub.

But if you prefer video tutorials, check out this video on YouTube.

Prerequisite

You’ll want to make sure to switch the current Kubernetes context to Docker Desktop. This step lets the user interact with the Kubernetes cluster and deploy applications to it using kubectl.

kubectl config current-context

docker-desktop

1. Clone the repository

First, you’ll need to clone the repository:

git clone https://github.com/gefyrahq/gefyra-demos

2. Apply the workload

The following YAML file sets up a simple two-tier app consisting of a backend service and a frontend service. The SVC_URL environment variable passed to the frontend container establishes communication between the two services.

It defines two pods, named backend and frontend, and two services, named backend and frontend, respectively. The backend pod is defined with a container that runs the quay.io/gefyra/gefyra-demo-backend image on port 5002.

The frontend pod is defined with a container that runs the quay.io/gefyra/gefyra-demo-frontend image on port 5003. The frontend container has an environment variable named SVC_URL set to the value backend.default.svc.cluster.local:5002.

The backend service is defined to select the backend pod using the app: backend label, and expose port 5002. The frontend service is defined to select the frontend pod using the app: frontend label, and expose port 80 as a load balancer, which routes traffic to port 5003 of the frontend container.

/gefyra-demos/kcd-munich> kubectl apply -f manifests/demo.yaml

pod/backend created

pod/frontend created

service/backend created

service/frontend created

Let’s watch the workload getting ready:

kubectl get pods

NAME READY STATUS RESTARTS AGE

backend 1/1 Running 0 2m6s

frontend 1/1 Running 0 2m6s

After the backend and frontend pods have initialized (check for the READY column in the output), you can access the application at http://localhost in your web browser. This URL is served from the Kubernetes environment of Docker Desktop.

Upon loading the page, you’ll see the application’s output displayed in your browser. While the output may not be visually stunning, it’s functional and should provide the necessary functionality you need.

Now, let’s explore how we can correct or adjust the color of the output generated by the frontend component.

3. Using Gefyra “Run Container” with the frontend process

In the first part of this section, you’ll learn how to execute a frontend process on your local machine that is associated with a resource based on the Kubernetes cluster: the backend API. This can be anything ranging from a database to a message broker or other service utilized in the architecture.

Kick off a local container with Run Container from the Gefyra start screen (Figure 9).

Once you’ve entered the first step of this process, you’ll see that the kubeconfig and context are set automatically. That’s a lifesaver if you don’t know where to find the default kubeconfig on your host.

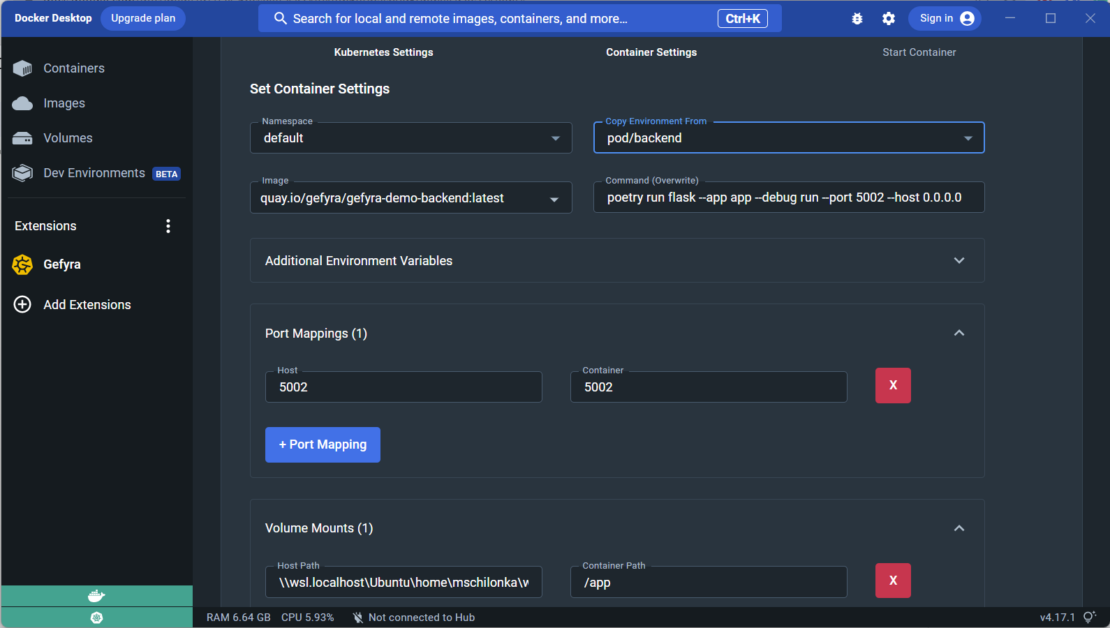

Just hit the Next button and proceed with the container settings (Figure 10).

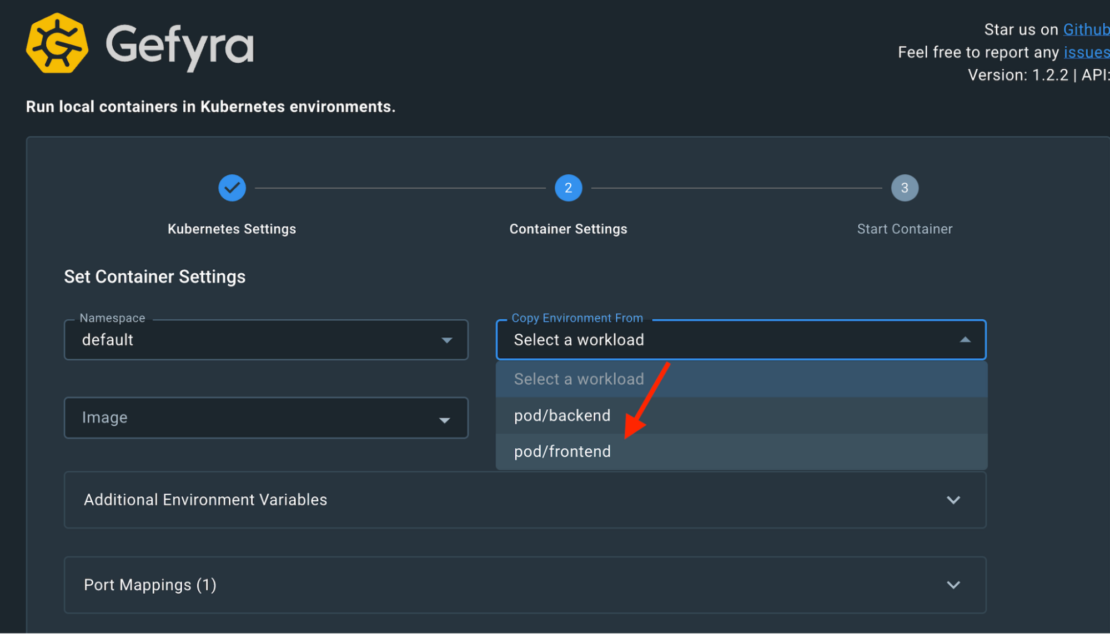

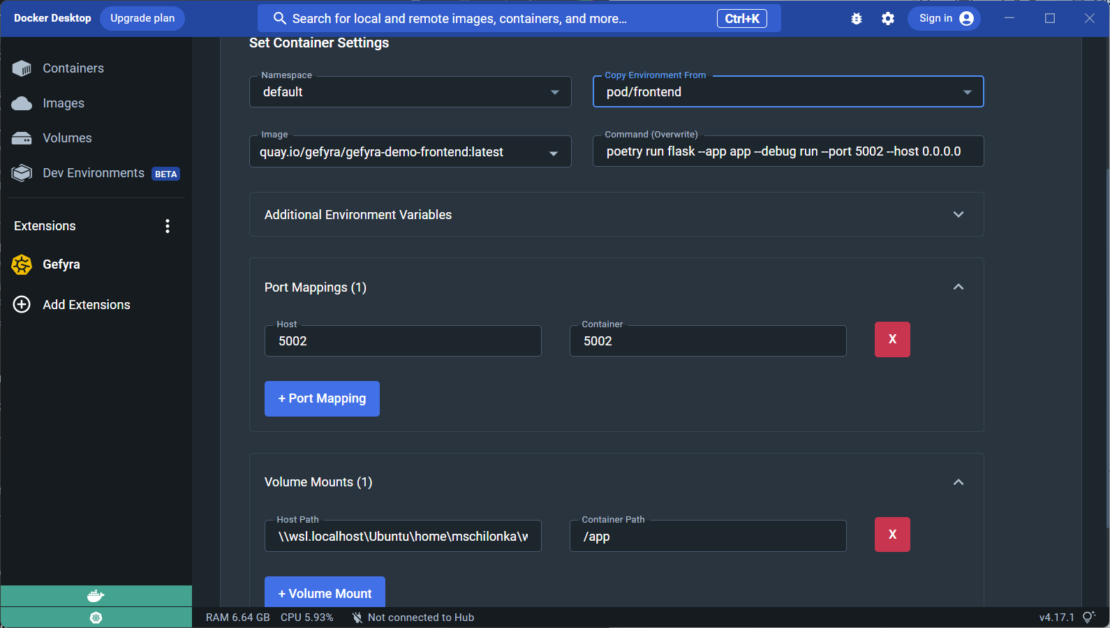

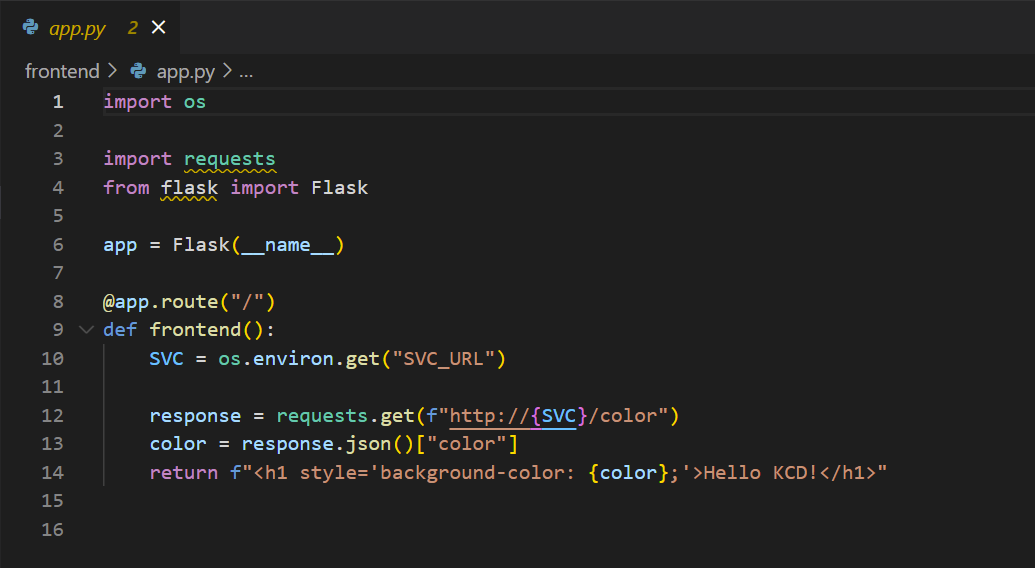

In the Container Settings step, you can configure the Kubernetes-related parameters for your local container. In this example, everything happens in the default Kubernetes namespace. Select it in the first drop-down input (Figure 11).

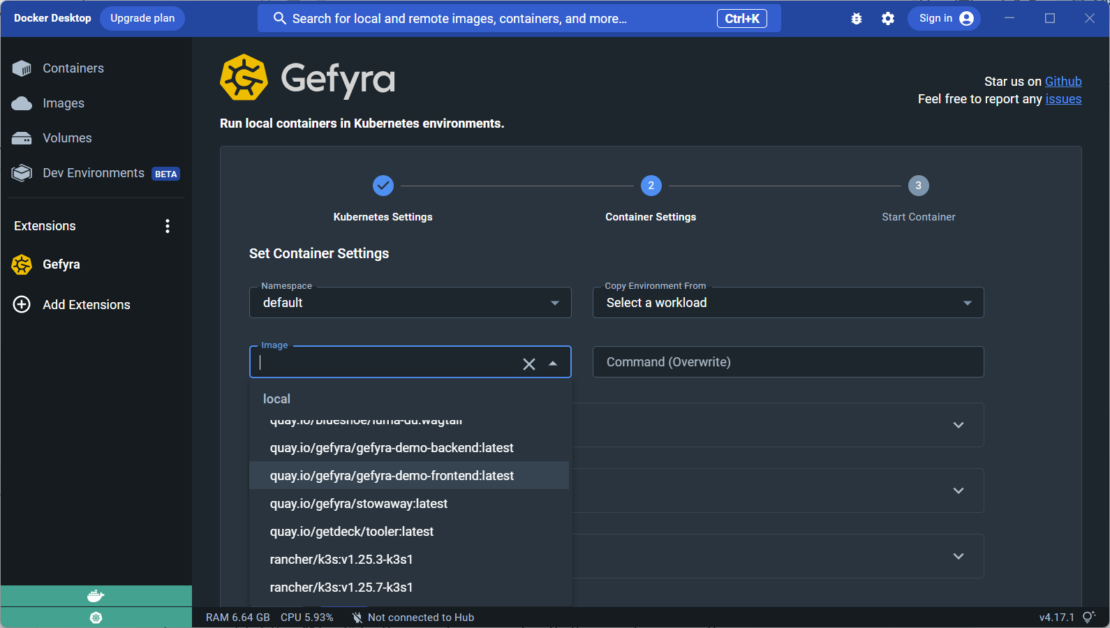

In the drop-down input below Image, you can specify the image to run locally. Note that it lists all images used in the selected namespace (from the Namespace selector). This way, you don’t need to worry about what images are in the cluster or find them yourself.

Instead, you get a suggestion to work with the image at hand, which is what we want to do in this example (Figure 12). You can still specify any image you want, even if it’s a new one you made on your machine.

Next, we’ll copy the environment of the frontend container running in the cluster. To do this, you’ll need to select pod/frontend from the Copy Environment From selector (Figure 13). This step is important because you need the backend service address, which is passed to the pod in the cluster using an environment variable.

For the upper part of the container settings, you need to overwrite the following container image run command to enable code reloading:

poetry run flask --app app --debug run --port 5002 --host 0.0.0.0

Let’s start the container process on port 5002 and expose this port on the local machine. We’ll also mount the code directory (/gefyra-demos/kcd-munich/frontend) to make code changes immediately visible. Then, click on the Run button to start the process.

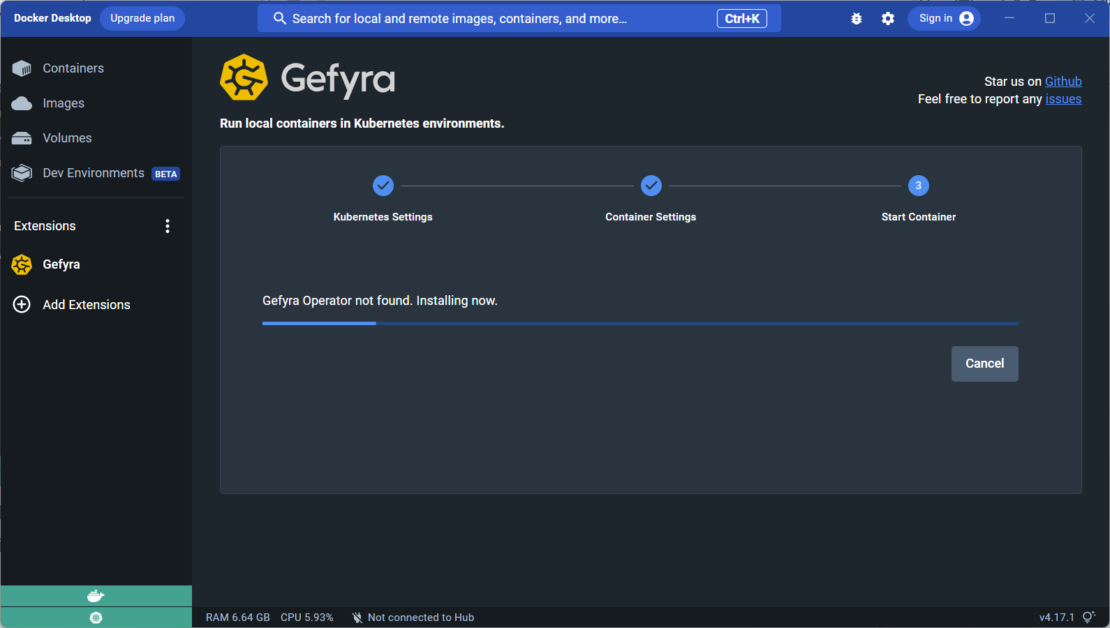

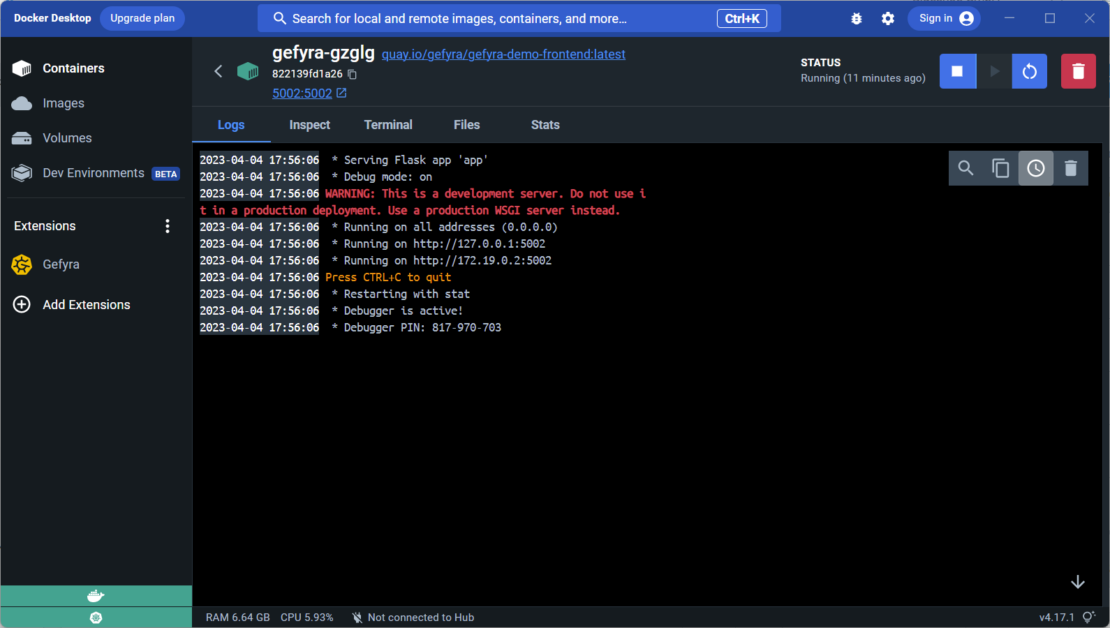

Next, we’ll quickly install Gefyra’s cluster-side components, prepare the local networking part, and pull the container image to start locally (Figure 14). Once it’s ready, you’ll redirect to the native container view of Docker Desktop from this container (Figure 15).

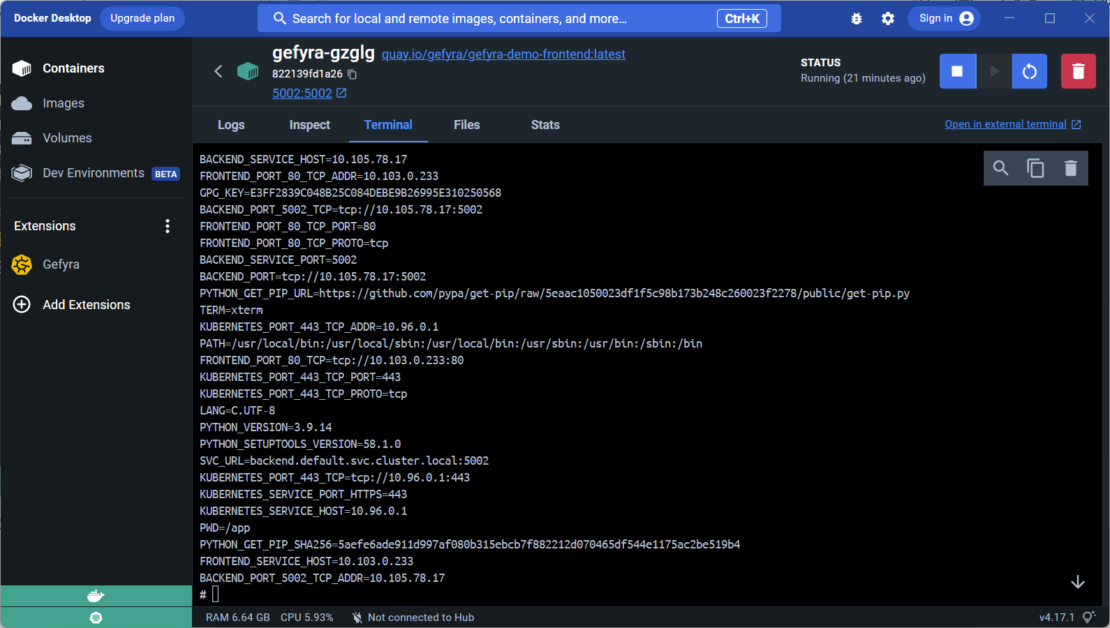

You can look around in the container using the Terminal tab (Figure 16). Type in the env command in the shell, and you’ll see all the environment variables coming with Kubernetes.

We’re particularly interested in the SVC_URL variable that points the frontend to the backend process, which is still running in the cluster. Now, when browsing to the URL http://localhost:5002, you’ll get a slightly different output:

Why is that? To investigate, let’s look at the code that we already mounted into the local container, specifically the app.py that runs a Flask server (Figure 17).

The last line of the code in the Gefyra example displays the text Hello KCD!, and any changes made to this code are immediately updated in the local container. This feature is noteworthy because developers can freely modify the code and see the changes reflected in real time without having to rebuild or redeploy the container.

Line 12 of the code in the Gefyra example sends a request to a service URL, which is stored in the variable SVC. The value of SVC is read from an environment variable named SVC_URL, which is copied from the pod in the Kubernetes cluster. The URL, backend.default.svc.cluster.local:5002, is a fully qualified domain name (FQDN) that points to a Kubernetes service object and a port.

These URLs are commonly used by applications in Kubernetes to communicate with each other. The local container process is capable of sending requests to services running in Kubernetes using the native connection parameters, without the need for developers to make any changes, which may seem like magic at times.

In most development scenarios, the capabilities of Gefyra we just discussed are sufficient. In other words, you can use Gefyra to run a local container that can communicate with resources in the Kubernetes cluster, and you can access the app on a local port. However, what if you need to modify the backend while the frontend is still running in Kubernetes? This is where the “bridge” feature of Gefyra comes in, which we will explore next.

4. Gefyra “bridge” with the backend process

We could choose to run the frontend process locally and connect it to the backend process running in Kubernetes through a bridge. But this approach may not always be necessary or desirable for backend developers not concerned with the frontend. In this case, it may be more convenient to leave the frontend running in the cluster and stop the local instance by selecting the stop button in Docker Desktop’s container view.

To do this, we have to run a local instance of the backend service. It’s the same as with the frontend, but this time with the backend container image (Figure 18).

Compared to the frontend example from above, you can run the backend container image (quay.io/gefyra/gefyra-demo-backend:latest), which is suggested by the drop-down selector. This time we need to copy the environment from the backend pod running in Kubernetes. Note that the volume mount is now set to the code of the backend service to make it work.

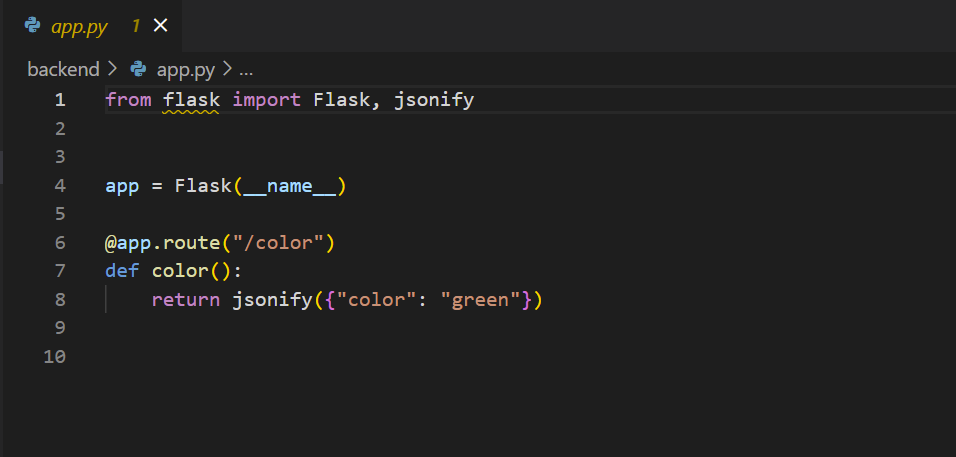

After starting the container, you can check http://localhost:5002/color, which serves the backend API response. Looking at the app.py of the backend service shows the source of this response. In line 8, this app returns a JSON response with the color property set to green (Figure 19).

At this point, keep in mind that we’re only running a local instance of the backend service. This time, you won’t need a connection to a Kubernetes-based resource since this container runs without external dependencies.

The idea is to make the frontend process that serves from the Kubernetes cluster on http://localhost (still blue) pick up our backend information to render its output. That’s done using Gefyra’s bridge feature. In the next step, we’ll overlay the backend process running in the cluster with our local container instance so that the local code becomes effective in the cluster.

Getting back to the Gefyra container list on the start screen, you can find the Bridge column on each locally running container (Figure 20). Once you click this button, you can create a bridge of your local container into the cluster.

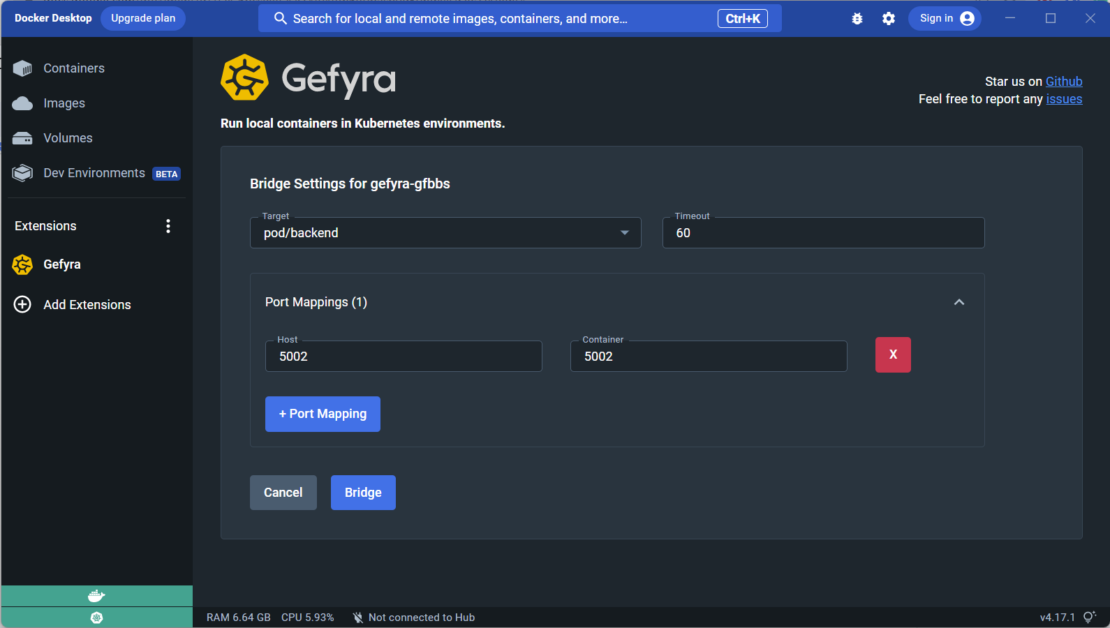

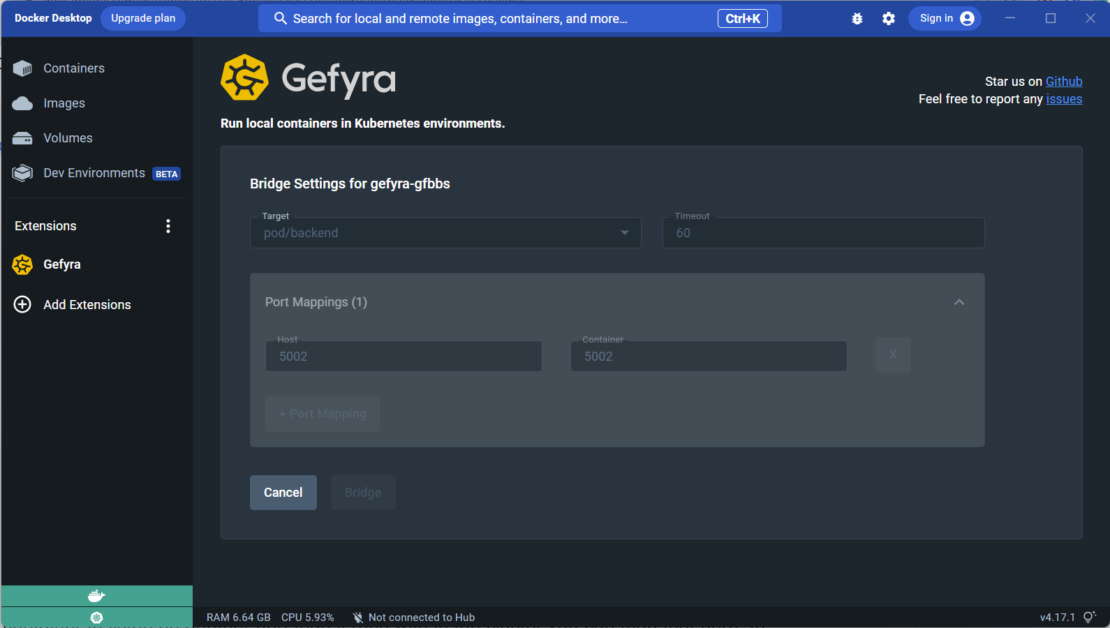

In the next dialog, we need to enter the bridge configuration (Figure 21).

Let’s set the “Target” for the bridge to the backend pod, which is currently serving the frontend process in the cluster, and set a timeout for the bridge to 60 seconds. We also need to map the port of the proxy running in the cluster with the local instance.

If your local container is configured to listen on a different port from the cluster, you can specify the mapping here (Figure 22). In this example, the service is running on port 5003 in both the cluster and on the local machine, so we need to map that port. After clicking the Bridge button, it takes a few seconds to return to the container list on Gefyra’s start view.

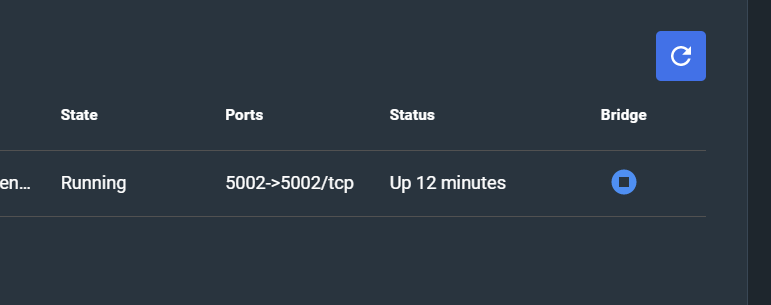

Observe the change in the icon of the Bridge button, which now depicts a stop symbol (Figure 23). This means the bridge function is operational and can be terminated by clicking this button again.

At this point, the local code is able to handle requests from the frontend process in the cluster using the URL stored in the SVC_URL variable. This can be done without making any changes to the frontend process itself.

To confirm this, you can open http://localhost in your browser (which is served from the Kubernetes of Docker Desktop) and check that the output is green. This is because the local code is returning the value green for the color property. You can change this value to any valid one in your IDE, and it will be immediately reflected in the cluster. This is the amazing power of this tool.

Remember to release the bridge of your container once you are finished making changes to your backend. This will reset the cluster to its original state, and the frontend will display the original “beautiful” blue H1 again.

This approach allows us to intercept containers running in Kubernetes with our local code without modifying the Kubernetes cluster itself. That’s because we didn’t make any changes to the Kubernetes cluster itself. Instead, we kind of intercepted containers running in Kubernetes with our local code and released that intercept afterward.

Conclusion

Gefyra is an easy-to-use Docker Desktop extension that connects with Kubernetes to improve development workflows and team collaboration. It lets you run containers as usual while being connected with Kubernetes, thereby saving time and ensuring high dev/prod parity.

The Blueshoe development team would appreciate a star on GitHub and welcomes you to join their Discord community for more information.

About the Author

Michael Schilonka is a strong believer that Kubernetes can be a software development platform, too. He is the co-founder and managing director of the Munich-based agency Blueshoe and the technical lead of Gefyra and Getdeck. He talks about Kubernetes in general and how they are using Kubernetes for development. Follow him on LinkedIn to stay connected.