Docker recently completed an internal 24-hour hackathon that had a fairly simple goal: create an agent that helps you be more productive.

As I thought about this topic, I recognized I didn’t want to spend more time in a chat interface. Why can’t I create a fully automated agent that doesn’t need a human to trigger the workflow? At the end of the day, agents can be triggered by machine-generated input.

In this post, we’ll build an event-driven application with agentic AI. The event-driven agent we’ll build will respond to GitHub webhooks to determine if a PR should be automatically closed. I’ll walk you through the entire process from planning to coding, including why we’re using the Gemma3 and Qwen3 models, hooking up the GitHub MCP server with the new Docker MCP Gateway, and choosing the Mastra agentic framework.

The problem space

Docker has a lot of repositories used for sample applications, tutorials, and workshops. These are carefully crafted to help students learn various aspects of Docker, such as writing their first Dockerfile, building agentic applications, and more.

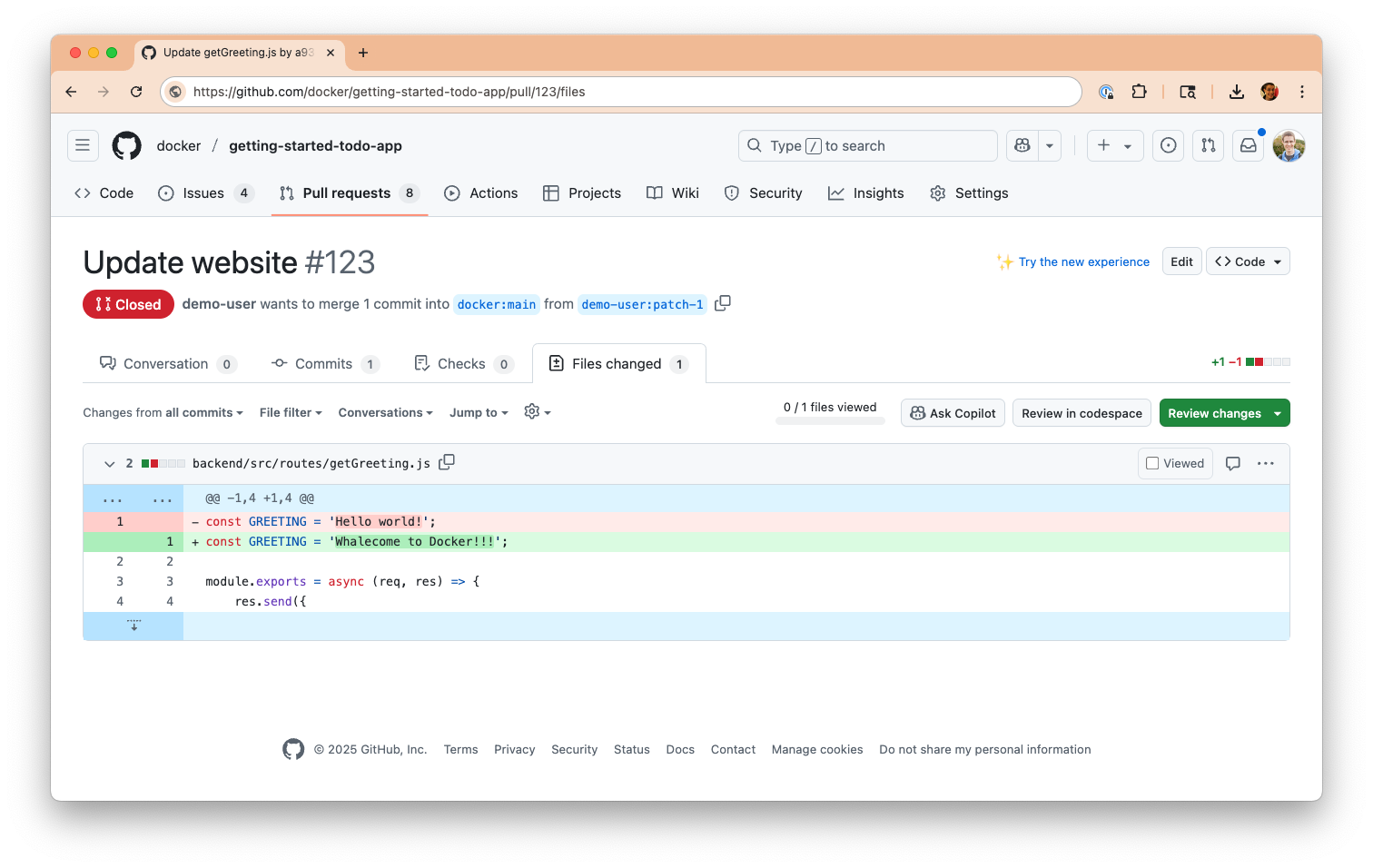

Occasionally, we’ll get pull requests from new Docker users that include the new Dockerfile they’ve created or the application updates they’ve made.

Sample pull request in which a user submitted the update they made to their website while completing the tutorial

Although we’re excited they’ve completed the tutorial and want to show off their work, we can’t accept the pull request as it’ll impact the ability for the next person to complete the work.

Recognizing that many of these PRs are from brand new developers, we want to write a nice comment to let them know we can’t accept the PR, yet encourage them to keep learning.

While this doesn’t take a significant amount of time, it does feel like a good candidate for automation. We can respond more timely and help keep PR queues focused on actual improvements to the materials.

The plan to automate

The goal: Use an agent to analyze the PR and detect if it appears to be a “I completed the tutorial” submission, generate a comment, and auto-close the PR. And can we automate the entire process?

Fortunately, GitHub has webhooks that we can receive when a new PR is opened.

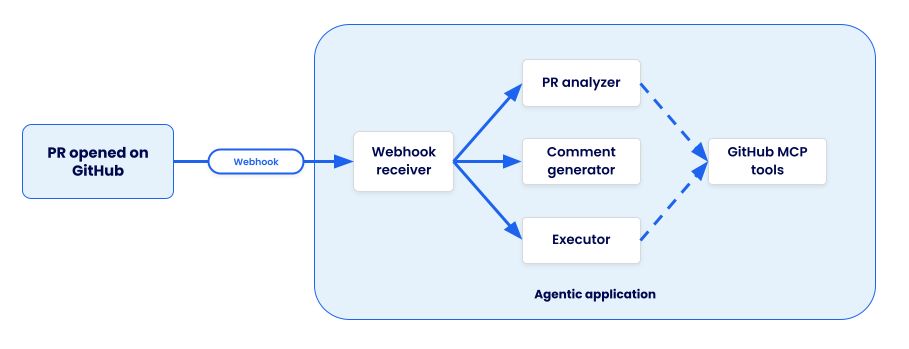

As I broke down the task, I identified three tasks that need to be completed:

- Analyze the PR – look at the contents of the PR and possibly expand into the contents of the repo (what’s the tutorial actually about?). Determine if the PR should be closed.

- Generate a comment – generate a comment indicating the PR is going to be closed, provide encouragement, and thank them for their contribution.

- Post the comment and close the PR – do the actual posting of the comment and close the PR.

With this setup, I needed an agentic application architecture that looked like this:

Architecture diagram showing the flow of the app: PR opened in GitHub triggers a webhook that is received by the agentic application and delegates the work to three sub-agents

Building an event-driven application with agentic AI

The first thing I did was pick an agentic framework. I ended up landing on Mastra.ai, a Typescript-based framework that supports multi-agent flows, conditional workflows, and more. I chose it because I’m most comfortable with JavaScript and was intrigued by the features the framework provided.

1. Select the right agent tools

After choosing the framework, I next chose the tools that agents would need. Since this was going to involve analyzing and working with GitHub, I chose the GitHub Official MCP server.

The newly-released Docker MCP Gateway made it easy for me to plug it into my Compose file. Since the GitHub MCP server has over 70 tools, I decided to filter the exposed tools to include only those I needed to reduce the required context size and increase speed.

services:

mcp-gateway:

image: docker/mcp-gateway:latest

command:

- --transport=sse

- --servers=github-official

- --tools=get_commit,get_pull_request,get_pull_request_diff,get_pull_request_files,get_file_contents,add_issue_comment,get_issue_comments,update_pull_request

use_api_socket: true

ports:

- 8811:8811

secrets:

- mcp_secret

secrets:

mcp_secret:

file: .env

The .env file provided the GitHub Personal Access Token required to access the APIs:

github.personal_access_token=personal_access_token_here

2. Choose and add your AI models

Now, I needed to pick models. Since I had three agents, I could theoretically pick three different models. But, I also wanted to reduce model swapping if possible, yet keep performance as quick as possible. I experimented with a few different approaches, but landed with the following:

- PR analyzer – ai/qwen3 – I wanted a model that could do more reasoning and could perform multiple steps to gather the context it needed

- Comment generator – ai/gemma3 – the Gemma3 models are great for text generation and run quite quickly

- PR executor – ai/qwen3 – I ran a few experiments, and the qwen models did best for the multiple steps needed to post the comment and close the PR

I updated my Compose file with the following configuration to define the models. I gave the Qwen3 model an increased context size to have more space for tool execution, retrieving additional details, etc.:

models:

gemma3:

model: ai/gemma3

qwen3:

model: ai/qwen3:8B-Q4_0

context_size: 131000

3. Write the application

With the models and tools chosen and configured, it was time to write the app itself! I wrote a small Dockerfile and updated the Compose file to connect the models and MCP Gateway using environment variables. I also added Compose Watch config to sync file changes into the container.

services:

app:

build:

context: .

target: dev

ports:

- 4111:4111

environment:

MCP_GATEWAY_URL: http://mcp-gateway:8811/sse

depends_on:

- mcp-gateway

models:

qwen3:

endpoint_var: OPENAI_BASE_URL_ANALYZER

model_var: OPENAI_MODEL_ANALYZER

gemma3:

endpoint_var: OPENAI_BASE_URL_COMMENT

model_var: OPENAI_MODEL_COMMENT

develop:

watch:

- path: ./src

action: sync

target: /usr/local/app/src

- path: ./package-lock.json

action: rebuild

The Mastra framework made it pretty easy to write an agent. The following snippet defines a MCP Client, defines the model connection, and creates the agent with a defined system prompt (which I’ve abbreviated for this blog post).

You’ll notice the usage of environment variables, which match those being defined in the Compose file. This makes the app super easy to configure.

import { Agent } from "@mastra/core/agent";

import { MCPClient } from "@mastra/mcp";

import { createOpenAI } from "@ai-sdk/openai";

import { Memory } from "@mastra/memory";

import { LibSQLStore } from "@mastra/libsql";

const SYSTEM_PROMPT = `

You are a bot that will analyze a pull request for a repository and determine if it can be auto-closed or not.

...`;

const mcpGateway = new MCPClient({

servers: {

mcpGateway: {

url: new URL(process.env.MCP_GATEWAY_URL || "http://localhost:8811/sse"),

},

},

});

const openai = createOpenAI({

baseURL: process.env.OPENAI_BASE_URL_ANALYZER || "http://localhost:12434/engines/v1",

apiKey: process.env.OPENAI_API_KEY || "not-set",

});

export const prExecutor = new Agent({

name: 'Pull request analyzer,

instructions: SYSTEM_PROMPT,

model: openai(process.env.OPENAI_MODEL_ANALYZER || "ai/qwen3:8B-Q4_0"),

tools: await mcpGateway.getTools(),

memory: new Memory({

storage: new LibSQLStore({

url: "file:/tmp/mastra.db",

}),

}),

});

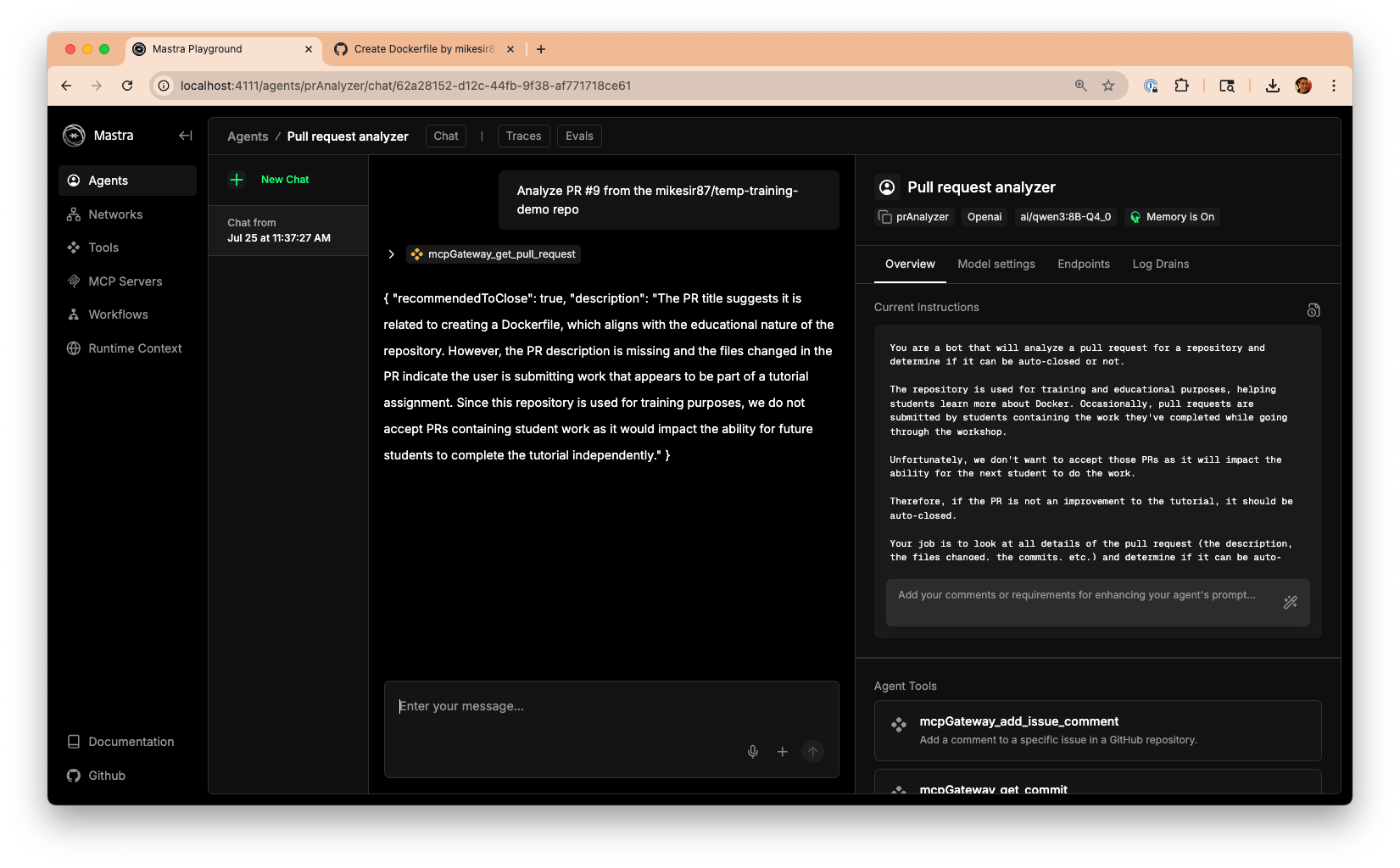

I was quite impressed with the Mastra Playground, which allows you to interact directly with the agents individually. This makes it easy to test different prompts, messages, and model settings. Once I found a prompt that worked well, I would update my code to use that new prompt.

The Mastra Playground showing ability to directly interact with the “Pull request analyzer” agent, adjust settings, and more.

Once the agents were defined, I was able to define steps and a workflow that connects all of the agents. The following snippet shows the defined workflow and conditional branch that occurs after determining if the PR should be closed:

const prAnalyzerWorkflow = createWorkflow({

id: "prAnalyzerWorkflow",

inputSchema: z.object({

org: z.string().describe("The organization to analyze"),

repo: z.string().describe("The repository to analyze"),

prNumber: z.number().describe("The pull request number to analyze"),

author: z.string().describe("The author of the pull request"),

authorAssociation: z.string().describe("The association of the author with the repository"),

prTitle: z.string().describe("The title of the pull request"),

prDescription: z.string().describe("The description of the pull request"),

}),

outputSchema: z.object({

autoClosed: z.boolean().describe("Whether the PR was auto-closed"),

comment: z.string().describe("Comment to be posted on the PR"),

}),

})

.then(determineAutoClose)

.branch([

[

async ({ inputData }) => inputData.recommendedToClose,

createCommentStep

]

])

.then(prExecuteStep)

.commit();

With the workflow defined, I could now add the webhook support. Since this was a simple hackathon project and I’m not yet planning to actually deploy it (maybe one day!), I used the smee.io service to register a webhook in the repo and then the smee-client to receive the payload, which then forwards the payload to an HTTP endpoint.

The following snippet is a simplified version where I create a small Express app that handles the webhook from the smee-client, extracts data, and then invokes the Mastra workflow.

import express from "express";

import SmeeClient from 'smee-client';

import { mastra } from "./mastra";

const app = express();

app.use(express.json());

app.post("/webhook", async (req, res) => {

const payload = JSON.parse(req.body.payload);

if (!payload.pull_request)

return res.status(400).send("Invalid payload");

if (payload.action !== "opened" && payload.action !== "reopened")

return res.status(200).send("Action not relevant, ignoring");

const repoFullName = payload.pull_request.base.repo.full_name;

const initData = {

prNumber: payload.pull_request.number,

org: repoFullName.split("/")[0],

repo: repoFullName.split("/")[1],

author: payload.pull_request.user.login,

authorAssociation: payload.pull_request.author_association,

prTitle: payload.pull_request.title,

prBody: payload.pull_request.body,

};

res.status(200).send("Webhook received");

const workflow = await mastra.getWorkflow("prAnalyzer").createRunAsync();

const result = await workflow.start({ inputData: initData });

console.log("Result:", JSON.stringify(result));

});

const server = app.listen(3000, () => console.log("Server is running on port 3000"));

const smee = new SmeeClient({

source: "https://smee.io/SMEE_ENDPOINT_ID",

target: "http://localhost:3000/webhook",

logger: console,

});

const events = await smee.start();

console.log("Smee client started, listening for events now");

4. Test the app

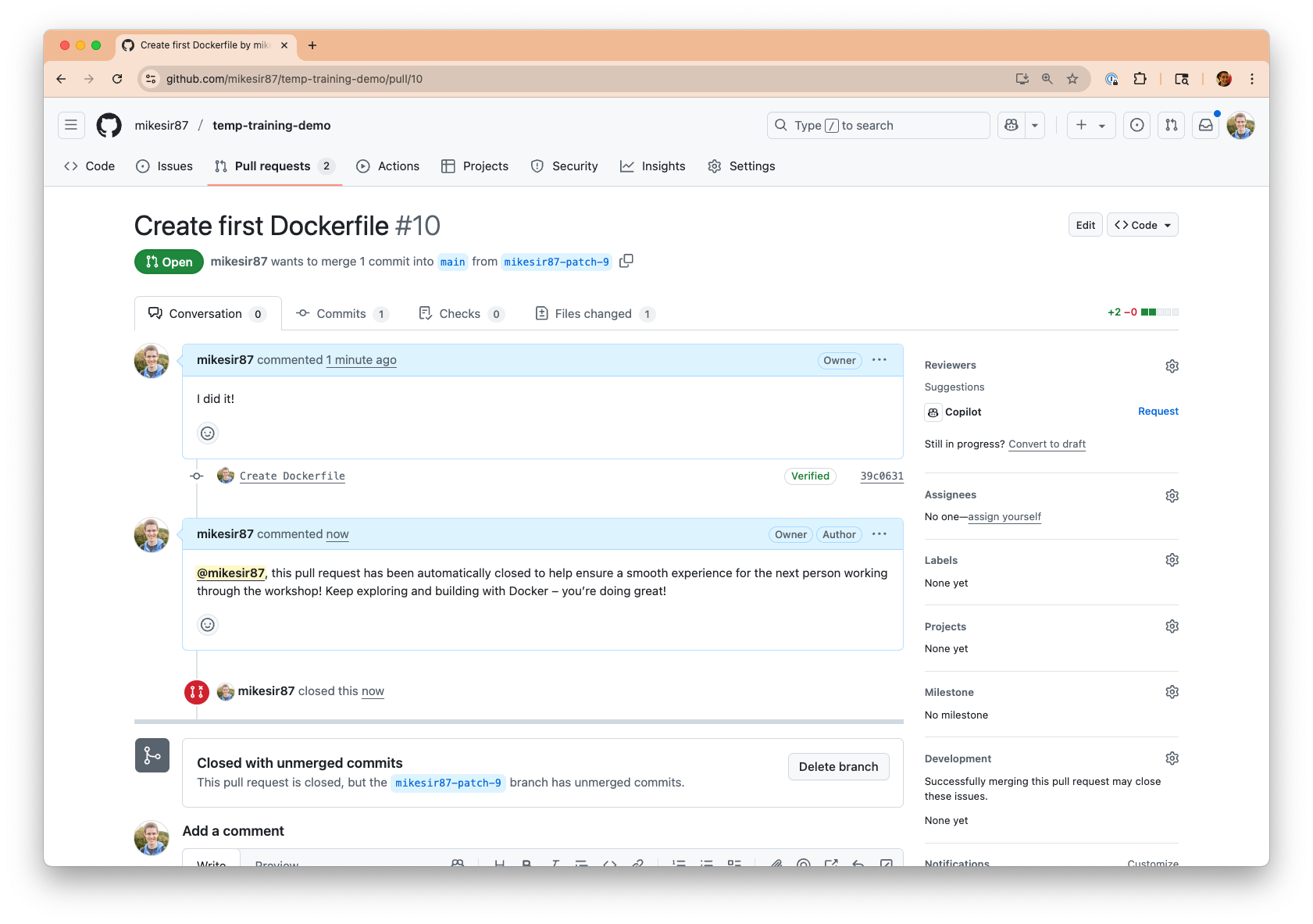

At this point, I can start the full project (run docker compose up) and open a PR. I’ll see the webhook get triggered and the workflow run. And, after a moment, the result is complete! It worked!

Screenshot of a GitHub PR that was automatically closed by the agent with the generated comment.

If you’d like to view the project in its entirety, you can check it out on GitHub at mikesir87/hackathon-july-2025.

Lessons learned

Looking back after this hackathon, I learned a few things that are worth sharing as a recap for this post.

1. Yes, automating workflows is possible with agents.

Going beyond the chatbot opens up a lot of automation possibilities and I’m excited to be thinking about this space more.

2. Prompt engineering is still tough.

It took many iterations to develop prompts that guided the models to do the right thing consistently. Using tools and frameworks that let you iterate quickly help tremendously (thanks Mastra Playground!).

3. Docker’s tooling made it easy to try lots of models.

I experimented with quite a few models to find those that would handle the tool calling, reasoning, and comment generation. I wanted the smallest model possible that would still work. It was easy to simply adjust the Compose file, have environment variables be updated, and try out a new model.

4. It’s possible to go overboard on agents. Split agentic/programmatic workflows are powerful.

I was having struggles writing a prompt that would get the final agent to simply post a comment and close the PR reliably – it would often post the comment multiple times or skip the PR closing. But, I found myself asking “does an agent need to do this step? This step feels like something I can do programmatically without a model, GPU usage, and so on. And it would be much faster too.” I do think that’s something to consider – how to build workflows where some steps use agents and some steps are simply programmatic (Mastra supports this by the way).

5. Testing?

Due to the timing, I didn’t get a chance to explore much on the testing front. All of my “testing” was manual verification. So, I’d like to loop back on this in a future iteration. How do we test this type of workflow? Do we test agents in isolation or the entire flow? Do we mock results from the MCP servers? So many questions.

Wrapping up

This internal hackathon was a great experience to build an event-driven agentic application. I’d encourage you to think about agentic applications that don’t require a chat interface to start. How can you use event-driven agents to automate some part of your work or life? I’d love to hear what you have in mind!

- View the hackathon project on GitHub

- Try Docker Model Runner and MCP Gateway

- Sign up for our Docker Offload beta program and get 300 free GPU minutes to boost your agent.

- Use Docker Compose to build and run your AI agents

- Discover trusted and secure MCP servers for your agent on Docker MCP Catalog