Agentic applications – what actually are they and how do we make them easier to build, test, and deploy? At WeAreDevelopers, we defined agentic apps as those that use LLMs to define execution workflows based on desired goals with access to your tools, data, and systems.

While there are new elements to this application stack, there are many aspects that feel very similar. In fact, many of the same problems experienced with microservices now exist with the evolution of agentic applications.

Therefore, we feel strongly that teams should be able to use the same processes and tools, but with the new agentic stack. Over the past few months, we’ve been working to evolve the Docker tooling to make this a reality and we were excited to share it with the world at WeAreDevelopers.

Let’s unpack those announcements, as well as dive into a few other things we’ve been working on!

Docker Captain Alan Torrance from JPMC with Docker COO Mark Cavage and WeAreDevelopers organizers

WeAreDeveloper keynote announcements

Mark Cavage, Docker’s President and COO, and Tushar Jain, Docker’s EVP of Product and Engineering, took the stage for a keynote at WeAreDevelopers and shared several exciting new announcements – Compose for agentic applications, native Google Cloud support for Compose, and Docker Offload. Watch the keynote in its entirety here.

Docker EVP, Product & Engineering Tushar Jain delivering the keynote at WeAreDevelopers

Compose has evolved to support agentic applications

Agentic applications need three things – models, tools, and your custom code that glues it all together.

The Docker Model Runner provides the ability to download and run models.

The newly open-sourced MCP Gateway provides the ability to run containerized MCP servers, giving your application access to the tools it needs in a safe and secure manner.

With Compose, you can now define and connect all three in a single compose.yaml file!

Here’s an example of a Compose file bringing it all together:

# Define the models

models:

gemma3:

model: ai/gemma3

services:

# Define a MCP Gateway that will provide the tools needed for the app

mcp-gateway:

image: docker/mcp-gateway

command: --transport=sse --servers=duckduckgo

use_api_socket: true

# Connect the models and tools with the app

app:

build: .

models:

gemma3:

endpoint_var: OPENAI_BASE_URL

model_var: OPENAI_MODEL

environment:

MCP_GATEWAY_URL: http://mcp-gateway:8811/sse

The application can leverage a variety of agentic frameworks – ADK, Agno, CrewAI, Vercel AI SDK, Spring AI, and more.

Check out the newly released compose-for-agents sample repo for examples using a variety of frameworks and ideas.

Taking Compose to production with native cloud-provider support

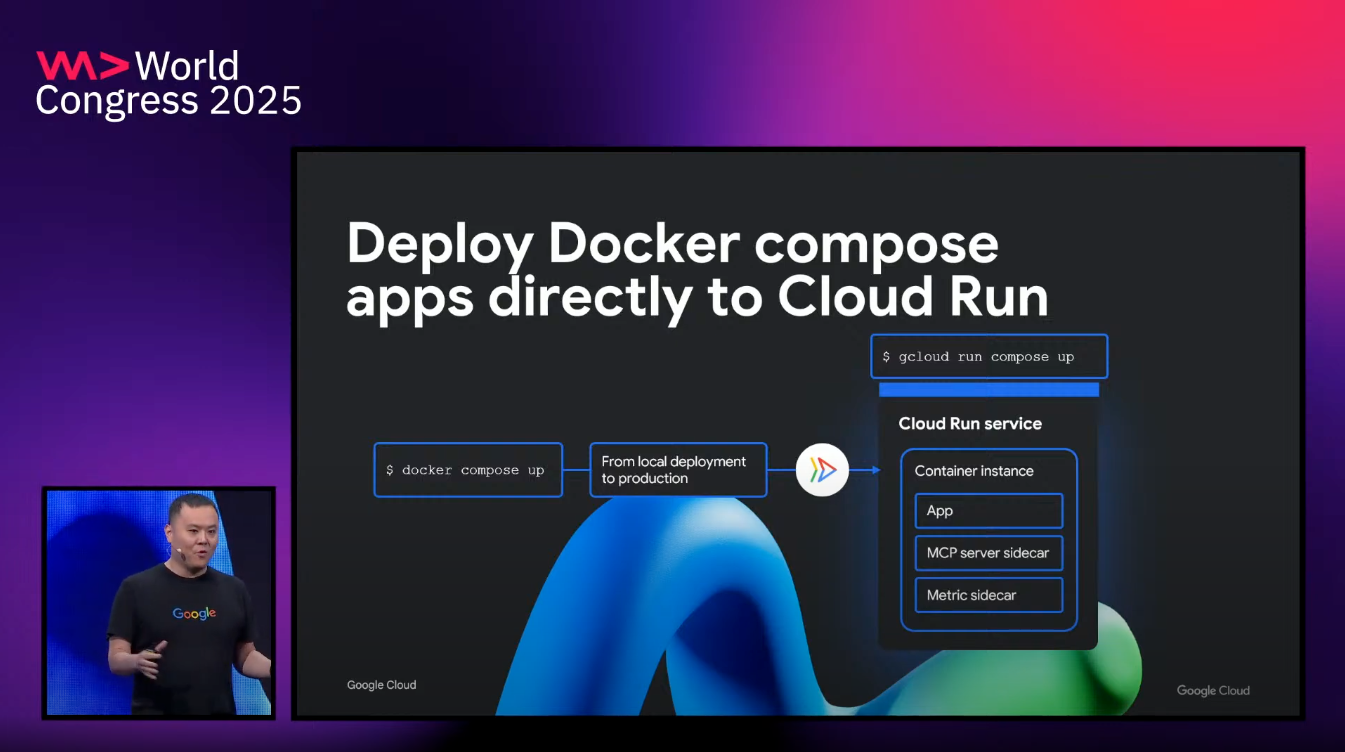

During the keynote, we shared the stage with Google Cloud’s Engineering Director Yunong Xiao to demo how you can now easily deploy your Compose-based applications with Google Cloud Run. With this capability, the same Compose specification works from dev to prod – no rewrites and no reconfig.

Google Cloud’s Engineering Director Yunong Xiao announcing the native Compose support in Cloud Run

With Google Cloud Run (via gcloud run compose up) and soon Microsoft Azure Container Apps, you can deploy apps to serverless platforms with ease. It already has support for the newly released model support too!

Compose makes the entire journey from dev to production consistent, portable, and effortless – just the way applications should be.

Learn more about the Google Cloud Run support with their announcement post here.

Announcing Docker Offload – access to cloud-based compute resources and GPUs during development and testing

Running LLMs requires a significant amount of compute resources and large GPUs. Not every developer has access to those resources on their local machines. Docker Offload allows you to run your containers and models using cloud resources, yet still feel local. Port publishing and bind mounts? It all just works.

No complex setup, no GPU shortages, no configuration headaches. It’s a simple toggle switch in Docker Desktop. Sign up for our beta program and get 300 free GPU minutes!

Getting hands-on with our new agentic Compose workshop

Selfie with at the end of the workshop with attendees

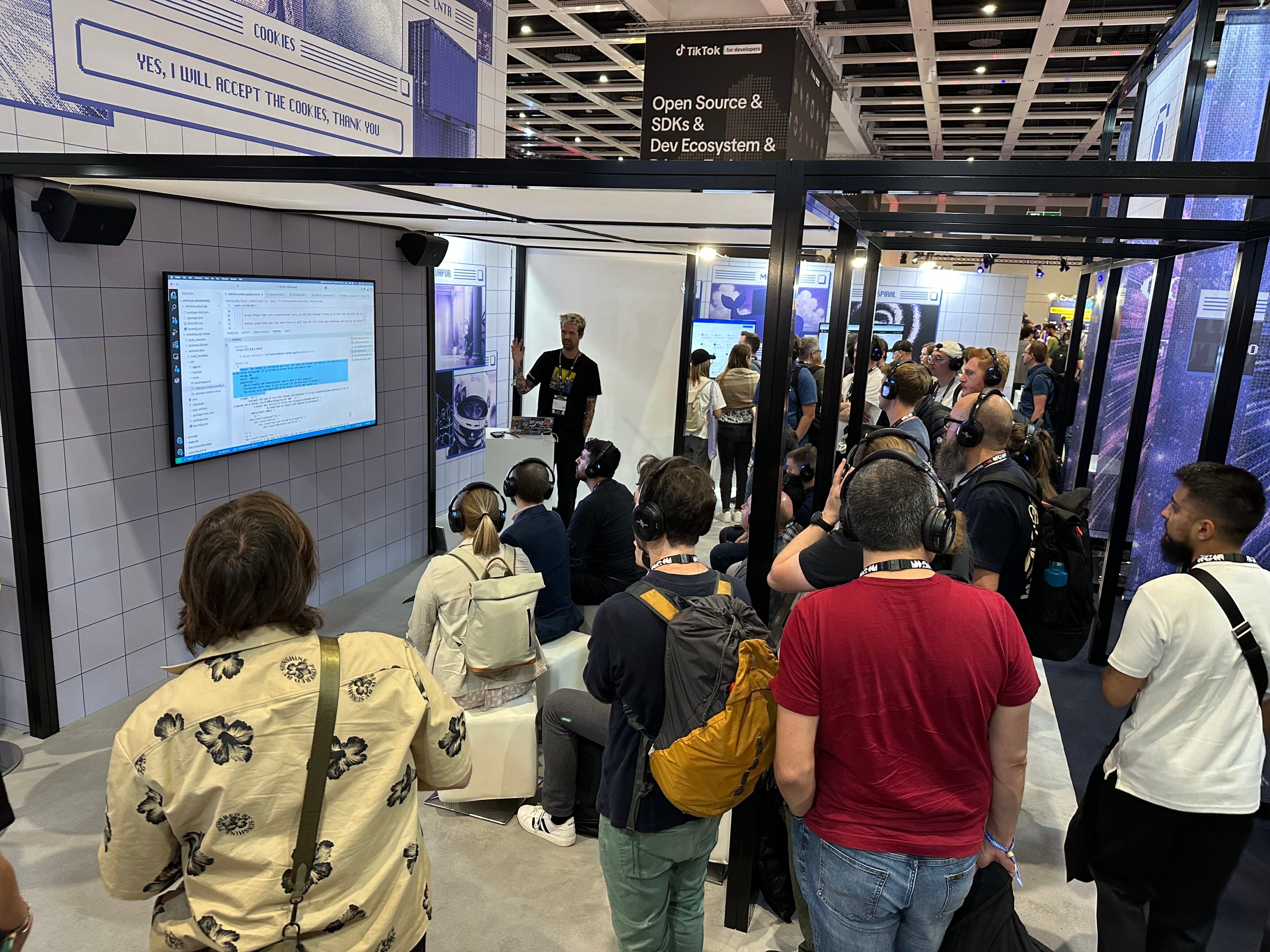

At WeAreDevelopers, we released and ran a workshop to enable everyone to get hands-on with the new Compose capabilities, and the response blew us away!

In the room, every seat was filled and a line remained outside well into the workshop hoping folks would leave early to open a spot. But, not a single person left early! It was so thrilling to see attendees stay fully engaged for the entire workshop.

During the workshop, participants were able to learn about the agentic application stack, digging deep into models, tools, and agentic frameworks. They used Docker Model Runner, the Docker MCP Gateway, and the Compose integrations to package it all together.

Want to try the workshop yourself? Check it out on GitHub at dockersamples/workshop-agentic-compose.

Lightning talks that sparked ideas

Lightning talk on testing with LLMs in the Docker booth

In addition to the workshop, we hosted a rapid-fire series of lightning talks in our booth on a range of topics. These talks were intended to inspire additional use cases and ideas for agentic applications:

- Digging deep into the fundamentals of GenAI applications

- Going beyond the chatbot with event-driven agentic applications

- Using LLMs to perform semantic testing of applications and websites

- Using Gordon to build safer and more secure images with Docker Hardened Images

These talks made it clear: agentic apps aren’t just a theory—they’re here, and Docker is at the center of how they get built.

Stay tuned for future blog posts that dig deeper into each of these topics.

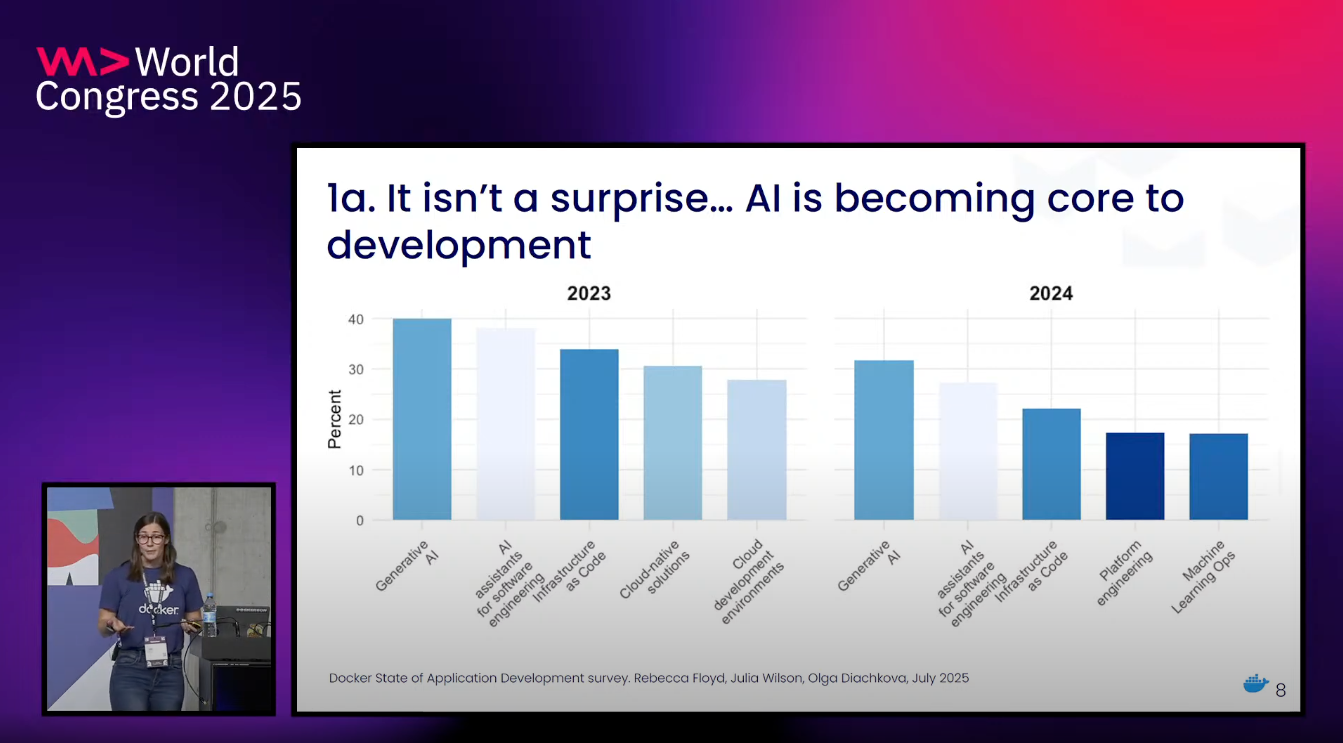

Sharing our industry insights and learnings

At WeAreDevelopers, our UX Research team was able to present their findings and insights after analyzing the past three years of Docker-sponsored industry research. And interestingly, the AI landscape is already starting to have an impact in language selection, attitudes toward trends like shifted-left security, and more!

Julia Wilson on stage sharing insights from the Docker UX research team

To learn more about the insights, view the talk here.

Bringing a European Powerhouse to North America

In addition to the product announcements, we announced a major co‑host partnership between Docker and WeAreDevelopers, launching WeAreDevelopers World Congress North America, set for September 2026. Personally, I’m super excited for this because WeAreDevelopers is a genuine developer-first conference – it covers topics at all levels, has an incredibly fun and exciting atmosphere, live coding hackathons, and helps developers find jobs and further their career!

The 2026 WeAreDevelopers World Congress North America will mark the event’s first major expansion outside Europe. This creates a new, developer-first alternative to traditional enterprise-style conferences, with high-energy talks, live coding, and practical takeaways tailored to real builders.

Docker Captains Mohammad-Ali A’râbi (left) and Francesco Ciulla (right) in attendance with Docker Principal Product Manager Francesco Corti (center)

Try it, build it, contribute

We’re excited to support this next wave of AI-native applications. If you’re building with agentic AI, try out these tools in your workflow today. Agentic apps are complex, but with Docker, they don’t have to be hard. Let’s build cool stuff together.

- Sign up for our beta program and get 300 free GPU minutes!

- Use Docker Compose to build and run your AI agents

- Watch the keynote, a panel on securing the agentic workflow, and dive into insights from our annual developer survey here.

- Try Docker Model Runner and MCP Gateway