If you’re looking to bring a stack of old family photos back to life, check out Ubuntu’s demo on how to use OpenVINO on Ubuntu containers to colorize monochrome pictures. This magical use of containers, neural networks, and Kubernetes is packed with helpful resources and a fun way to dive into deep learning!

A version of Part 1 and Part 2 of this article was first published on Ubuntu’s blog.

Table of contents:

- OpenVINO on Ubuntu containers: making developers’ lives easier

- OpenVINO and Ubuntu container images

- Neural networks to colorize a black & white image

- Demo architecture

- Deployment with Kubernetes

OpenVINO on Ubuntu containers: making developers’ lives easier

Suppose you’re curious about AI/ML and what you can do with OpenVINO on Ubuntu containers. In that case, this blog is an excellent read for you too.

Docker image security isn’t only about provenance and supply chains; it’s also about the user experience. More specifically, the developer experience.

Removing toil and friction from your app development, containerization, and deployment processes avoids encouraging developers to use untrusted sources or bad practices in the name of getting things done. As AI/ML development often requires complex dependencies, it’s the perfect proof point for secure and stable container images.

Why Ubuntu Docker images?

As the most popular container image in its category, the Ubuntu base image provides a seamless, easy-to-set-up experience. From public cloud hosts to IoT devices, the Ubuntu experience is consistent and loved by developers.

One of the main reasons for adopting Ubuntu-based container images is the software ecosystem. More than 30,000 packages are available in one “install” command, with the option to subscribe to enterprise support from Canonical. It just makes things easier.

In this blog, you’ll see that using Ubuntu Docker images greatly simplifies component containerization. We even used a prebuilt & preconfigured container image for the NGINX web server from the LTS images portfolio maintained by Canonical for up to 10 years.

Beyond providing a secure, stable, and consistent experience across container images, Ubuntu is a safe choice from bare metal servers to containers. Additionally, it comes with hardware optimization on clouds and on-premises, including Intel hardware.

Why OpenVINO?

When you’re ready to deploy deep learning inference in production, binary size and memory footprint are key considerations – especially when deploying at the edge. OpenVINO provides a lightweight Inference Engine with a binary size of just over 40MB for CPU-based inference. It also provides a Model Server for serving models at scale and managing deployments.

OpenVINO includes open-source developer tools to improve model inference performance. The first step is to convert a deep learning model (trained with TensorFlow, PyTorch,…) to an Intermediate Representation (IR) using the Model Optimizer. In fact, it cuts the model’s memory usage in half by converting it from FP32 to FP16 precision. You can unlock additional performance by using low-precision tools from OpenVINO. The Post-training Optimisation Tool (POT) and Neural Network Compression Framework (NNCF) provide quantization, binarisation, filter pruning, and sparsity algorithms. As a result, Intel devices’ throughput increases on CPUs, integrated GPUs, VPUs, and other accelerators.

Open Model Zoo provides pre-trained models that work for real-world use cases to get you started quickly. Additionally, Python and C++ sample codes demonstrate how to interact with the model. More than 280 pre-trained models are available to download, from speech recognition to natural language processing and computer vision.

For this blog series, we will use the pre-trained colorization models from Open Model Zoo and serve them with Model Server.

OpenVINO and Ubuntu container images

The Model Server – by default – ships with the latest Ubuntu LTS, providing a consistent development environment and an easy-to-layer base image. The OpenVINO tools are also available as prebuilt development and runtime container images.

To learn more about Canonical LTS Docker Images and OpenVINO™, read:

- Intel and Canonical to secure containers software supply chain – Ubuntu blog

- OpenVINO Documentation – OpenVINO™

- Webinar: Secure AI deployments at the edge – Canonical and Intel

Neural networks to colorize a black & white image

Now, back to the matter at hand: how will we colorize grandma and grandpa’s old pictures? Thanks to Open Model Zoo, we won’t have to train a neural network ourselves and will only focus on the deployment. (You can still read about it.)

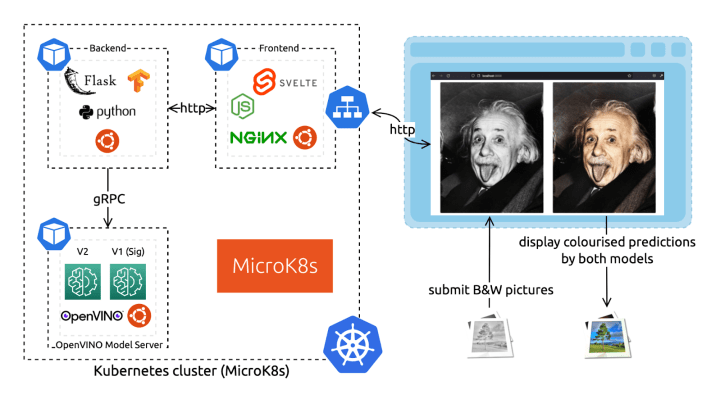

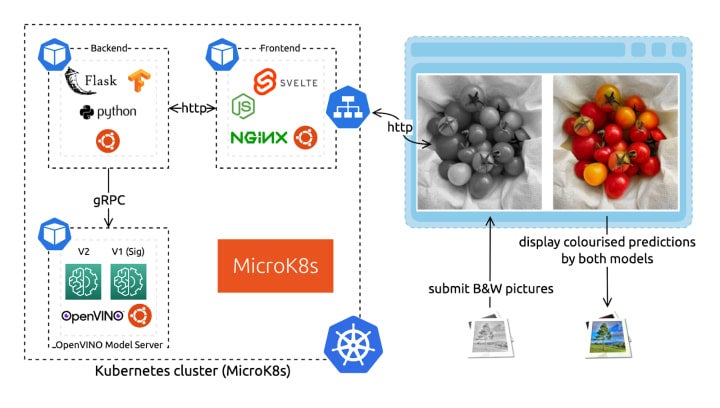

Our architecture consists of three microservices: a backend, a frontend, and the OpenVINO Model Server (OVMS) to serve the neural network predictions. The Model Server component hosts two different demonstration neural networks to compare their results (V1 and V2). These components all use the Ubuntu base image for a consistent software ecosystem and containerized environment.

A few reads if you’re not familiar with this type of microservices architecture:

gRPC vs REST APIs

The OpenVINO Model Server provides inference as a service via HTTP/REST and gRPC endpoints for serving models in OpenVINO IR or ONNX format. It also offers centralized model management to serve multiple different models or different versions of the same model and model pipelines.

The server offers two sets of APIs to interface with it: REST and gRPC. Both APIs are compatible with TensorFlow Serving and expose endpoints for prediction, checking model metadata, and monitoring model status. For use cases where low latency and high throughput are needed, you’ll probably want to interact with the model server via the gRPC API. Indeed, it introduces a significantly smaller overhead than REST. (Read more about gRPC.)

OpenVINO Model Server is distributed as a Docker image with minimal dependencies. For this demo, we will use the Model Server container image deployed to a MicroK8s cluster. This combination of lightweight technologies is suitable for small deployments. It suits edge computing devices, performing inferences where the data is being produced – for increased privacy, low latency, and low network usage.

Ubuntu minimal container images

Since 2019, the Ubuntu base images have been minimal, with no “slim” flavors. While there’s room for improvement (keep posted), the Ubuntu Docker image is a less than 30MB download, making it one of the tiniest Linux distributions available on containers.

In terms of Docker image security, size is one thing, and reducing the attack surface is a fair investment. However, as is often the case, size isn’t everything. In fact, maintenance is the most critical aspect. The Ubuntu base image, with its rich and active software ecosystem community, is usually a safer bet than smaller distributions.

A common trap is to start smaller and install loads of dependencies from many different sources. The end result will have poor performance, use non-optimized dependencies, and not be secure. You probably don’t want to end up effectively maintaining your own Linux distribution … So, let us do it for you.

Demo architecture

“As a user, I can drag and drop black and white pictures to the browser so that it displays their ready-to-download colorized version.” – said the PM (me).

For that – replied the one-time software engineer (still me) – we only need:

- A fancy yet lightweight frontend component.

- OpenVINO™ Model Server to serve the neural network colorization predictions.

- A very light backend component.

Whilst we could target the Model Server directly with the frontend (it exposes a REST API), we need to apply transformations to the submitted image. The colorization models, in fact, each expect a specific input.

Finally, we’ll deploy these three services with Kubernetes because … well … because it’s groovy. And if you think otherwise (everyone is allowed to have a flaw or two), you’ll find a fully functional docker-compose.yaml in the source code repository.

In the upcoming sections, we will first look at each component and then show how to deploy them with Kubernetes using MicroK8s. Don’t worry; the full source code is freely available, and I’ll link you to the relevant parts.

Neural network – OpenVINO Model Server

The colorization neural network is published under the BSD 2-clause License, accessible from the Open Model Zoo. It’s pre-trained, so we don’t need to understand it in order to use it. However, let’s look closer to understand what input it expects. I also strongly encourage you to read the original work from Richard Zhang, Phillip Isola, and Alexei A. Efros. They made the approach super accessible and understandable on this website and in the original paper.

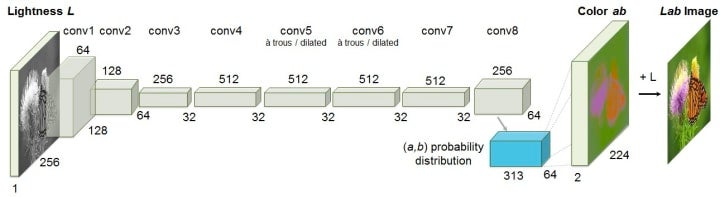

As you can see on the network architecture diagram, the neural network uses an unusual color space: LAB. There are many 3-dimensional spaces to code colors: RGB, HSL, HSV, etc. The LAB format is relevant here as it fully isolates the color information from the lightness information. Therefore, a grayscale image can be coded with only the L (for Lightness) axis. We will send only the L axis to the neural network’s input. It will generate predictions for the colors coded on the two remaining axes: A and B.

From the architecture diagram, we can also see that the model expects a 256×256 pixels input size. For these reasons, we cannot just send our RGB-coded grayscale picture in its original size to the network. We need to first transform it.

We compare the results of two different model versions for the demo. Let them be called ‘V1’ (Siggraph) and ‘V2’. The models are served with the same instance of the OpenVINO™ Model Server as two different models. (We could also have done it with two different versions of the same model – read more in the documentation.)

Finally, to build the Docker image, we use the first stage from the Ubuntu-based development kit to download and convert the model. We then rebase on the more lightweight Model Server image.

# Dockerfile FROM openvino/ubuntu20_dev:latest AS omz # download and convert the model … FROM openvino/model_server:latest # copy the model files and configure the Model Server …

Backend – Ubuntu-based Flask app (Python)

For the backend microservice that interfaces between the user-facing frontend and the Model Server hosting the neural network, we chose to use Python. There are many valuable libraries to manipulate data, including images, specifically for machine learning applications. To provide web serving capabilities, Flask is an easy choice.

The backend takes an HTTP POST request with the to-be-colorized picture. It synchronously returns the colorized result using the neural network predictions. In between – as we’ve just seen – it needs to convert the input to match the model architecture and to prepare the output to show a displayable result.

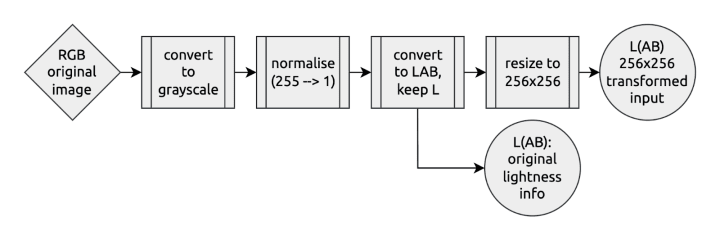

Here’s what the transformation pipeline looks like on the input:

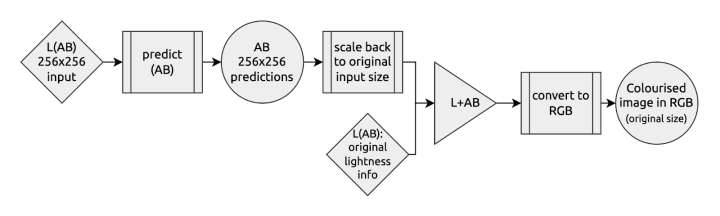

And the output looks something like that:

To containerize our Python Flask application, we use the first stage with all the development dependencies to prepare our execution environment. We copy it onto a fresh Ubuntu base image to run it, configuring the model server’s gRPC connection.

Frontend – Ubuntu-based NGINX container and Svelte app

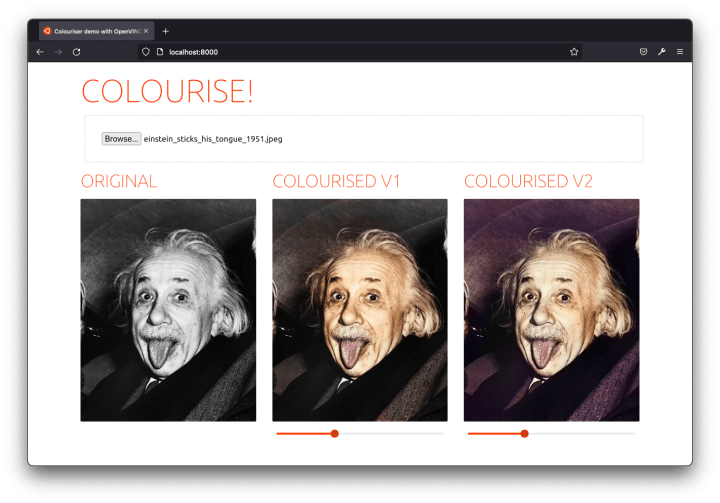

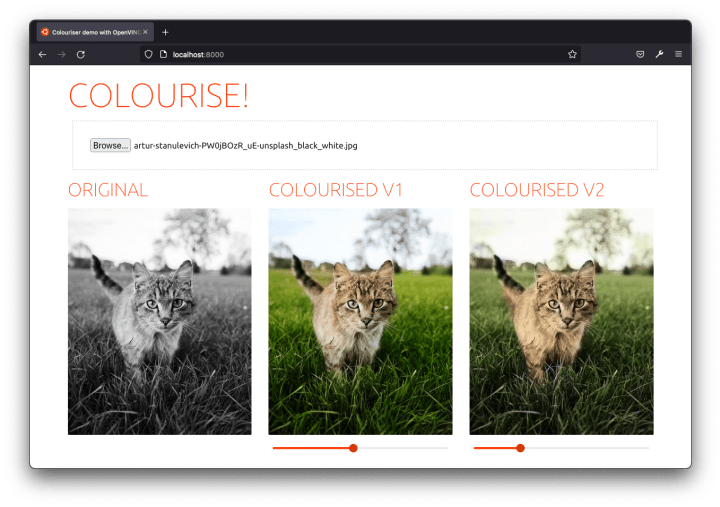

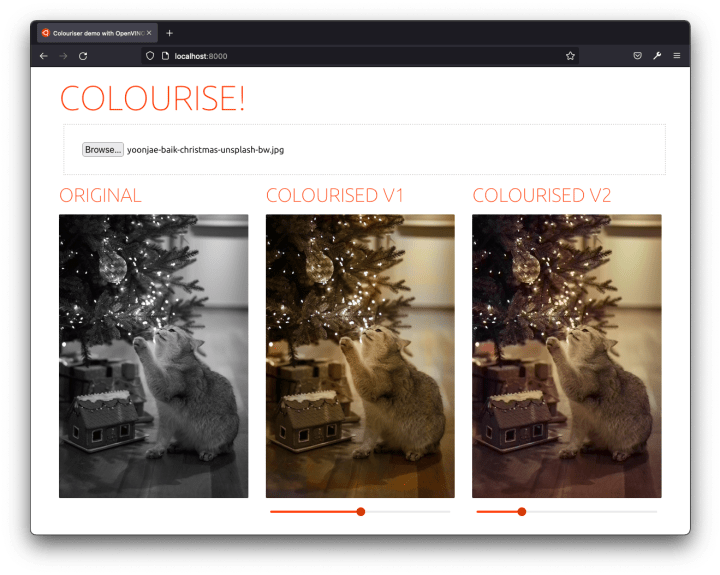

Finally, I put together a fancy UI for you to try the solution out. It’s an effortless single-page application with a file input field. It can display side-by-side the results from the two different colorization models.

I used Svelte to build the demo as a dynamic frontend. Below each colorization result, there’s even a saturation slider (using a CSS transformation) so that you can emphasize the predicted colors and better compare the before and after.

To ship this frontend application, we again use a Docker image. We first build the application using the Node base image. We then rebase it on top of the preconfigured NGINX LTS image maintained by Canonical. A reverse proxy on the frontend side serves as a passthrough to the backend on the /API endpoint to simplify the deployment configuration. We do that directly in an NGINX.conf configuration file copied to the NGINX templates directory. The container image is preconfigured to use these template files with environment variables.

Deployment with Kubernetes

I hope you had the time to scan some black and white pictures because things are about to get serious(ly colorized).

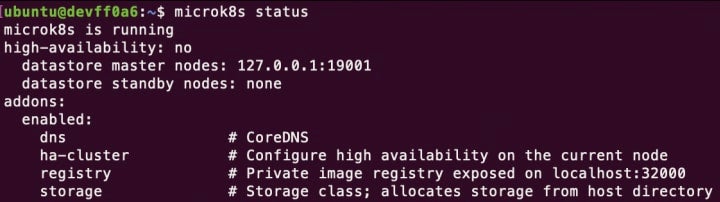

We’ll assume you already have a running Kubernetes installation from the next section. If not, I encourage you to run the following steps or go through this MicroK8s tutorial.

# https://microk8s.io/docs sudo snap install microk8s --classic # Add current user ($USER) to the microk8s group sudo usermod -a -G microk8s $USER && sudo chown -f -R $USER ~/.kube newgrp microk8s # Enable the DNS, Storage, and Registry addons required later microk8s enable dns storage registry # Wait for the cluster to be in a Ready state microk8s status --wait-ready # Create an alias to enable the `kubectl` command sudo snap alias microk8s.kubectl kubectl

Yes, you deployed a Kubernetes cluster in about two command lines.

Build the components’ Docker images

Every component comes with a Dockerfile to build itself in a standard environment and ship its deployment dependencies (read What are containers for more information). They all create an Ubuntu-based Docker image for a consistent developer experience.

Before deploying our colorizer app with Kubernetes, we need to build and push the components’ images. They need to be hosted in a registry accessible from our Kubernetes cluster. We will use the built-in local registry with MicroK8s. Depending on your network bandwidth, building and pushing the images will take a few minutes or more.

sudo snap install docker cd ~ && git clone https://github.com/valentincanonical/colouriser-demo.git # Backend docker build backend -t localhost:32000/backend:latest docker push localhost:32000/backend:latest # Model Server docker build modelserver -t localhost:32000/modelserver:latest docker push localhost:32000/modelserver:latest # Frontend docker build frontend -t localhost:32000/frontend:latest docker push localhost:32000/frontend:latest

Apply the Kubernetes configuration files

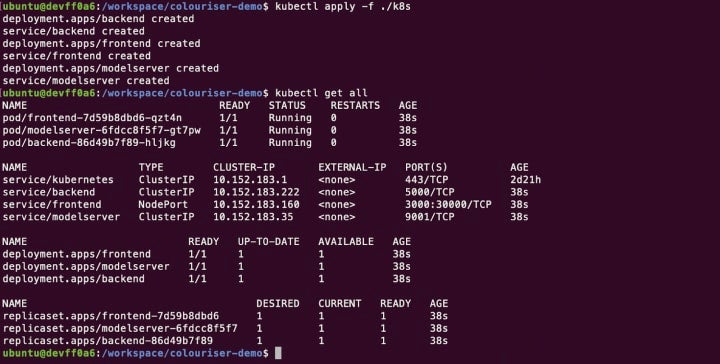

All the components are now ready for deployment. The Kubernetes configuration files are available as deployments and services YAML descriptors in the ./K8s folder of the demo repository. We can apply them all at once, in one command:

kubectl apply -f ./k8s

Give it a few minutes. You can watch the app being deployed with watch kubectl status. Of all the services, the frontend one has a specific NodePort configuration to make it publicly accessible by targeting the Node IP address.

Once ready, you can access the demo app at http://localhost:30000/ (or replace localhost with a cluster node IP address if you’re using a remote cluster). Pick an image from your computer, and get it colorized!

All in all, the project was pretty easy considering the task we accomplished. Thanks to Ubuntu containers, building each component’s image with multi-stage builds was a consistent and straightforward experience. And thanks to OpenVINO™ and the Open Model Zoo, serving a pre-trained model with excellent inference performance was a simple task accessible to all developers.

That’s a wrap!

You didn’t even have to share your pics over the Internet to get it done. Thanks for reading this article; I hope you enjoyed it. Feel free to reach out on socials. I’ll leave you with the last colorization example.

—

To learn more about Ubuntu, the magic of Docker images, or even how to make your own Dockerfiles, see below for related resources:

- Find more helpful Docker images on Docker Hub.

- Check out Ubuntu’s Docker Hub profile.

- Learn how to create your own Dockerfiles for Docker Desktop.

- Read about secure AI deployments at the edge.

- Learn more about Canonical’s maintained Ubuntu-based OCI images.

- Read Ubuntu’s take on running EKS locally.

- Get started and download Docker Desktop for Windows, Mac, or Linux.