When thinking about starting a Generative AI (GenAI) project, you might assume that Python is required to get started in this new space. However, if you’re already a Java developer, there’s no need to learn a new language. The Java ecosystem offers robust tools and libraries that make building GenAI applications both accessible and productive.

In this blog, you’ll learn how to build a GenAI app using Java. We’ll do a step-by-step demo to show you how RAG enhances the model response, using Spring AI and Docker tools. Spring AI integrates with many model providers (for both chat and embeddings), vector databases, and more. In our example, we’ll use the OpenAI and Qdrant modules provided by the Spring AI project to take advantage of built-in support for these integrations. Additionally, we’ll use Docker Model Runner (instead of a cloud-hosted OpenAI model), which offers an OpenAI-compatible API, making it easy to run AI models locally. We’ll automate the testing process using Testcontainers and Spring AI’s tools to ensure the LLM’s answers are contextually grounded in the documents we’ve provided. Last, we’ll show you how to use Grafana for observability and ensure our app behaves as designed.

Getting started

Let’s start building a sample application by going to Spring Initializr and choosing the following dependencies: Web, OpenAI, Qdrant Vector Database, and Testcontainers.

It’ll have two endpoints: a “/chat” endpoint that interacts directly with the model and a “/rag” endpoint that provides the model with additional context from documents stored in the vector database.

Configuring Docker Model Runner

Enable Docker Model Runner in your Docker Desktop or Docker Engine as described in the official documentation.

Then pull the following two models:

docker model pull ai/llama3.1

docker model pull ai/mxbai-embed-large

- ai/llama3.1 – chat model

- ai/mxbai-embed-large – embedding model

Both models are hosted at Docker Hub under the ai namespace. You can also pick specific tags for the model, which usually provide different quantization of the model. If you don’t know which tag to pick, the default one is a good starting point.

Building the GenAI app

Let’s create a ChatController under /src/main/java/com/example, which will be our entry point to interact with the chat model:

@RestController

public class ChatController {

private final ChatClient chatClient;

public ChatController(ChatModel chatModel) {

this.chatClient = ChatClient.builder(chatModel).build();

}

@GetMapping("/chat")

public String generate(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

return this.chatClient.prompt().user(message).call().content();

}

}

- ChatClient is the interface that provides the available operations to interact with the model. We’ll be injecting the actual model value (which model to use) via configuration properties.

- If no message query param is provided, then we’ll ask the model to tell a joke (as seen in the defaultValue).

Let’s configure our application to point to Docker Model Runner and use the “ai/llama3.1” model by adding the following properties to /src/test/resources/application.properties

spring.ai.openai.base-url=http://localhost:12434/engines

spring.ai.openai.api-key=test

spring.ai.openai.chat.options.model=ai/llama3.1

spring.ai.openai.api-key is required by the framework, but we can use any value here since it is not needed for Docker Model Runner.

Let’s start our application by running ./mvnw spring-boot:test-run or ./gradlew bootTestRun and ask it about Testcontainers:

http :8080/chat message=="What’s testcontainers?"

Below, we can find the answer provided by the LLM (ai/llama3.1)

Testcontainers is a fantastic and increasingly popular library for **local testing with containers**. Let's break down what it is, why it's useful, and how it works:

**What is Testcontainers?**

Testcontainers provides a way to run real, fully functional containerized services (like databases, message queues, web servers, etc.) directly within your tests. Instead of relying on mocked or stubbed versions of these services, you're using the *actual* services, which leads to much more realistic and reliable test results.

**Why Use Testcontainers?**

* **Realistic Testing:** This is the biggest benefit. Mocking databases or message queues can be brittle and difficult to maintain. Testcontainers provides a service that behaves exactly like the real thing, leading to tests that more accurately reflect how your application will perform in production.

* **Simplified Test Setup:** Forget about manually setting up and configuring databases or other services on your test machine. Testcontainers automatically handles the container creation, configuration, and cleanup for you.

* **Faster Tests:** Because the services are running locally, there’s no network latency involved, resulting in significantly faster test execution times.

* **Consistent Environments:** You eliminate the "it works on my machine" problem. Everyone running the tests will be using the same, pre-configured environment.

* **Supports Many Services:** Testcontainers supports a huge range of services, including:

* **Databases:** PostgreSQL, MySQL, MongoDB, Redis, Cassandra, MariaDB

* **Message Queues:** RabbitMQ, Kafka, ActiveMQ

* **Web Servers:** Tomcat, Jetty, H2 (for in-memory databases)

* **And many more!** The list is constantly growing.

**How Does It Work?**

1. **Client Library:** Testcontainers provides client libraries for various programming languages (Java, Python, JavaScript, Ruby, Go, .NET, and more).

2. **Container Run:** When you use the Testcontainers client library in your test, it automatically starts the specified container (e.g., a PostgreSQL database) in the background.

3. **Connection:** Your test code then connects to the running container using standard protocols (e.g., JDBC for PostgreSQL, HTTP for a web server).

4. **Test Execution:** You execute your tests as usual.

5. **Cleanup:** When the tests are finished, Testcontainers automatically shuts down the container, ensuring a clean state for the next test run.

**Example (Conceptual - Python):**

```python

from testcontainers.postgresql import PostgreSQLEnvironment

# Create a PostgreSQL environment

env = PostgreSQLEnvironment()

# Start the container

env.start()

# Connect to the database

db = env.db() # This creates a connection object to the running PostgreSQL container

# Perform database operations in your test

# ...

# Stop the container (cleanup)

env.shutdown()

```

**Key Concepts:**

* **Environment:** A Testcontainers environment is a configuration that defines which containers to run and how they should be configured.

* **Container:** A running containerized service (e.g., a database instance).

* **Connection:** An object that represents a connection to a specific container.

**Resources to Learn More:**

* **Official Website:** [https://testcontainers.io/](https://testcontainers.io/) - This is the best place to start.

* **GitHub Repository:** [https://github.com/testcontainers/testcontainers](https://github.com/testcontainers/testcontainers) - See the source code and contribute.

* **Documentation:** [https://testcontainers.io/docs/](https://testcontainers.io/docs/) - Comprehensive documentation with examples for various languages.

**In short, Testcontainers is a powerful tool that dramatically improves the quality and reliability of your local tests by allowing you to test against real, running containerized services.**

Do you want me to delve deeper into a specific aspect of Testcontainers, such as:

* A specific language implementation (e.g., Python)?

* A particular service it supports (e.g., PostgreSQL)?

* How to integrate it with a specific testing framework (e.g., JUnit, pytest)?

We can see that the answer provided by the LLM has some mistakes, for example, PostgreSQLEnvironment doesn’t exist in testcontainers-python. Another one is the links to the docs, testcontainers.io doesn’t exist. So, we can see some hallucinations in the answer.

Of course, LLM responses are non-deterministic, and since each model is trained until a certain cutoff date, the information may be outdated, and the answers might not be accurate.

To improve this situation, let’s provide the model with some curated context about Testcontainers!

We’ll create another controller, RagController, which will retrieve documents from a vector search database.

@RestController

public class RagController {

private final ChatClient chatClient;

private final VectorStore vectorStore;

public RagController(ChatModel chatModel, VectorStore vectorStore) {

this.chatClient = ChatClient.builder(chatModel).build();

this.vectorStore = vectorStore;

}

@GetMapping("/rag")

public String generate(@RequestParam(value = "message", defaultValue = "What's Testcontainers?") String message) {

return callResponseSpec(this.chatClient, this.vectorStore, message).content();

}

static ChatClient.CallResponseSpec callResponseSpec(ChatClient chatClient, VectorStore vectorStore,

String question) {

QuestionAnswerAdvisor questionAnswerAdvisor = QuestionAnswerAdvisor.builder(vectorStore)

.searchRequest(SearchRequest.builder().topK(1).build())

.build();

return chatClient.prompt().advisors(questionAnswerAdvisor).user(question).call();

}

}

Spring AI provides many advisors. In this example, we are going to use the QuestionAnswerAdvisor to perform the query against the vector search database. It takes care of all the individual integrations with the vector database.

Ingesting documents into the vector database

First, we need to load the relevant documents into the vector database. Under src/test/java/com/example, let’s create an IngestionConfiguration class:

@TestConfiguration(proxyBeanMethods = false)

public class IngestionConfiguration {

@Value("classpath:/docs/testcontainers.txt")

private Resource testcontainersDoc;

@Bean

ApplicationRunner init(VectorStore vectorStore) {

return args -> {

var javaTextReader = new TextReader(this.testcontainersDoc);

javaTextReader.getCustomMetadata().put("language", "java");

var tokenTextSplitter = new TokenTextSplitter();

var testcontainersDocuments = tokenTextSplitter.apply(javaTextReader.get());

vectorStore.add(testcontainersDocuments);

};

}

}

testcontainers.txt under /src/test/resources/docs directory will have the following content specific information. For a real-world use case, you would probably have a more extensive collection of documents.

Testcontainers is a library that provides easy and lightweight APIs for bootstrapping local development and test dependencies with real services wrapped in Docker containers. Using Testcontainers, you can write tests that depend on the same services you use in production without mocks or in-memory services.

Testcontainers provides modules for a wide range of commonly used infrastructure dependencies including relational databases, NoSQL datastores, search engines, message brokers, etc. See https://testcontainers.com/modules/ for a complete list.

Technology-specific modules are a higher-level abstraction on top of GenericContainer which help configure and run these technologies without any boilerplate, and make it easy to access their relevant parameters.

Official website: https://testcontainers.com/

Getting Started: https://testcontainers.com/getting-started/

Module Catalog: https://testcontainers.com/modules/

Now, let’s add an additional property to the src/test/resources/application.properties file.

spring.ai.openai.embedding.options.model=ai/mxbai-embed-large

spring.ai.vectorstore.qdrant.initialize-schema=true

spring.ai.vectorstore.qdrant.collection-name=test

ai/mxbai-embed-large is an embedding model that will be used to create the embeddings of the documents. They will be stored in the vector search database, in our case, Qdrant. Spring AI will initialize the Qdrant schema and use the collection named test.

Let’s update our TestDemoApplication Java class and add the IngestionConfiguration.class

public class TestDemoApplication {

public static void main(String[] args) {

SpringApplication.from(DemoApplication::main)

.with(TestcontainersConfiguration.class, IngestionConfiguration.class)

.run(args);

}

}

Now we start our application by running ./mvnw spring-boot:test-run or ./gradlew bootTestRun and ask it again about Testcontainers:

http :8080/rag message=="What’s testcontainers?"

This time, the answer contains references from the docs we have provided and is more accurate.

Testcontainers is a library that helps you write tests for your applications by bootstrapping real services in Docker containers, rather than using mocks or in-memory services. This allows you to test your applications as they would run in production, but in a controlled and isolated environment.

It provides modules for commonly used infrastructure dependencies such as relational databases, NoSQL datastores, search engines, and message brokers.

If you have any specific questions about how to use Testcontainers or its features, I'd be happy to help.

Integration testing

Testing is a key part of software development. Fortunately, Testcontainers and Spring AI’s utilities support testing of GenAI applications. So far, we’ve been testing the application manually, starting the application and performing requests to the given endpoints, verifying the correctness of the response ourselves. Now, we’re going to automate it by writing an integration test to check if the answer provided by the LLM is more contextual, augmented by the information provided in the documents.

@SpringBootTest(classes = { TestcontainersConfiguration.class, IngestionConfiguration.class },

webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT)

class RagControllerTest {

@LocalServerPort

private int port;

@Autowired

private VectorStore vectorStore;

@Autowired

private ChatClient.Builder chatClientBuilder;

@Test

void verifyTestcontainersAnswer() {

var question = "Tell me about Testcontainers";

var answer = retrieveAnswer(question);

assertFactCheck(question, answer);

}

private String retrieveAnswer(String question) {

RestClient restClient = RestClient.builder().baseUrl("http://localhost:%d".formatted(this.port)).build();

return restClient.get().uri("/rag?message={question}", question).retrieve().body(String.class);

}

private void assertFactCheck(String question, String answer) {

FactCheckingEvaluator factCheckingEvaluator = new FactCheckingEvaluator(this.chatClientBuilder);

EvaluationResponse evaluate = factCheckingEvaluator.evaluate(new EvaluationRequest(docs(question), answer));

assertThat(evaluate.isPass()).isTrue();

}

private List<Document> docs(String question) {

var response = RagController

.callResponseSpec(this.chatClientBuilder.build(), this.vectorStore, question)

.chatResponse();

return response.getMetadata().get(QuestionAnswerAdvisor.RETRIEVED_DOCUMENTS);

}

}

- Importing ContainerConfiguration, Qdrant will be provided.

- Importing IngestionConfiguration, will load the documents into the vector database.

- We’re going to use FactCheckingEvaluator to tell the chat model (ai/llama3.1) to check the answer provided by the LLM and verify it with the documents stored in the vector database.

Note: The integration test is using the same model we have declared in the previous steps. But we can definitely use a different model.

Automating your tests ensures consistency and reduces the risk of errors that often come with manual execution.

Observability with the Grafana LGTM Stack

Finally, let’s introduce some observability into our application. By introducing metrics and tracing, we can understand if our application is behaving as designed during development and in production.

Add the following dependencies to the pom.xml

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-otlp</artifactId>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-tracing-bridge-otel</artifactId>

</dependency>

<dependency>

<groupId>io.opentelemetry</groupId>

<artifactId>opentelemetry-exporter-otlp</artifactId>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>grafana</artifactId>

<scope>test</scope>

</dependency>

Now, let’s create GrafanaContainerConfiguration under src/test/java/com/example.

@TestConfiguration(proxyBeanMethods = false)

public class GrafanaContainerConfiguration {

@Bean

@ServiceConnection

LgtmStackContainer lgtmContainer() {

return new LgtmStackContainer("grafana/otel-lgtm:0.11.4");

}

}

Grafana provides the grafana/otel-lgtm image, which will start Prometheus, Tempo, and OpenTelemetry Collector, and other related services, all combined into a single convenient Docker image.

For the sake of our demo, let’s add a couple of properties at /src/test/resources/application.properties to sample 100% of requests.

spring.application.name=demo

management.tracing.sampling.probability=1

Update the TestDemoApplication class to include GrafanaContainerConfiguration.class

public class TestDemoApplication {

public static void main(String[] args) {

SpringApplication.from(DemoApplication::main)

.with(TestcontainersConfiguration.class, IngestionConfiguration.class, GrafanaContainerConfiguration.class)

.run(args);

}

}

Now, run ./mvnw spring-boot:test-run or ./gradlew bootTestRun one more time, and perform a request.

http :8080/rag message=="What’s testcontainers?"

Then, look for the following text in logs.

o.t.grafana.LgtmStackContainer : Access to the Grafana dashboard:

http://localhost:64908

The port can be different for you, but clicking on it should open the Grafana dashboard. This is where you can query for metrics related to the model or vector search, and also see the traces.

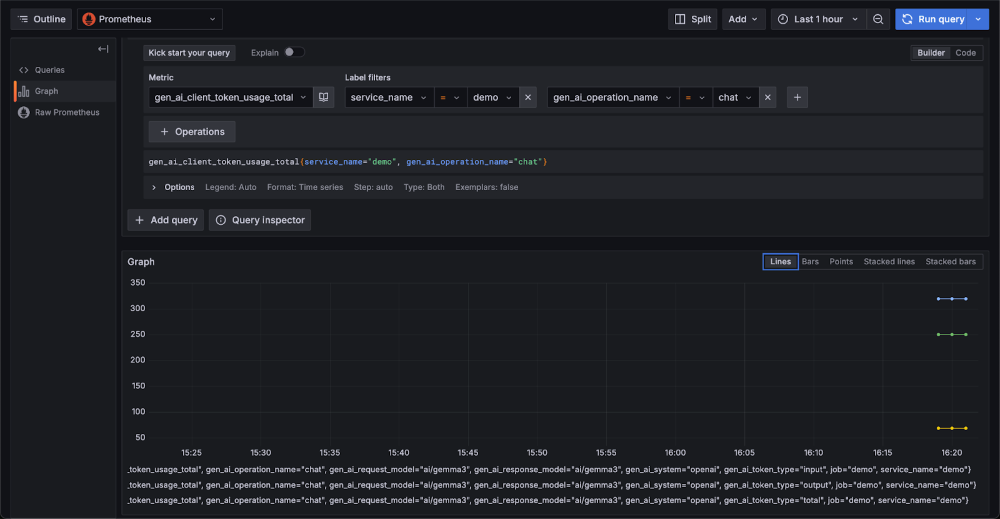

Figure 1: Grafana dashboard showing model metrics, vector search performance, and traces

We can also display the token usage metric used by the chat endpoint.

Figure 2: Grafana dashboard panel displaying token usage metrics for the chat endpoint

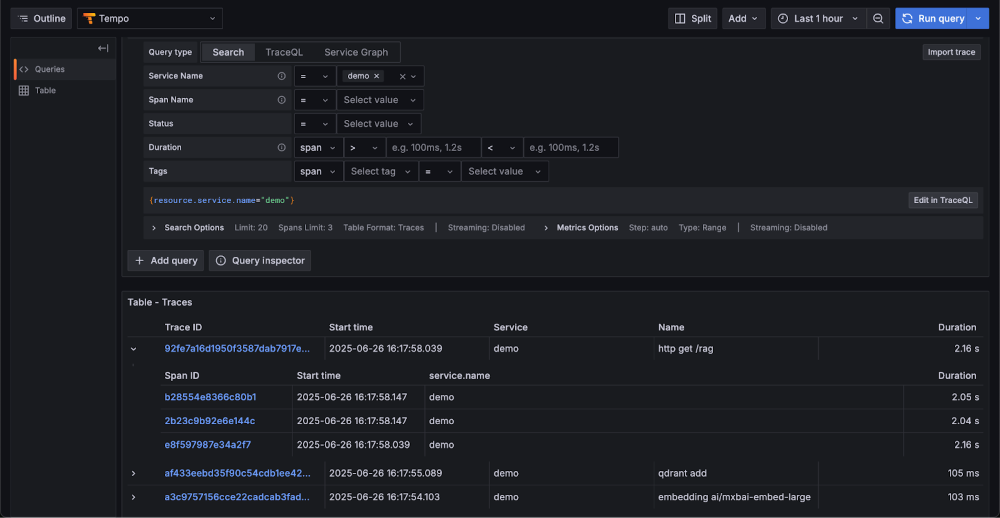

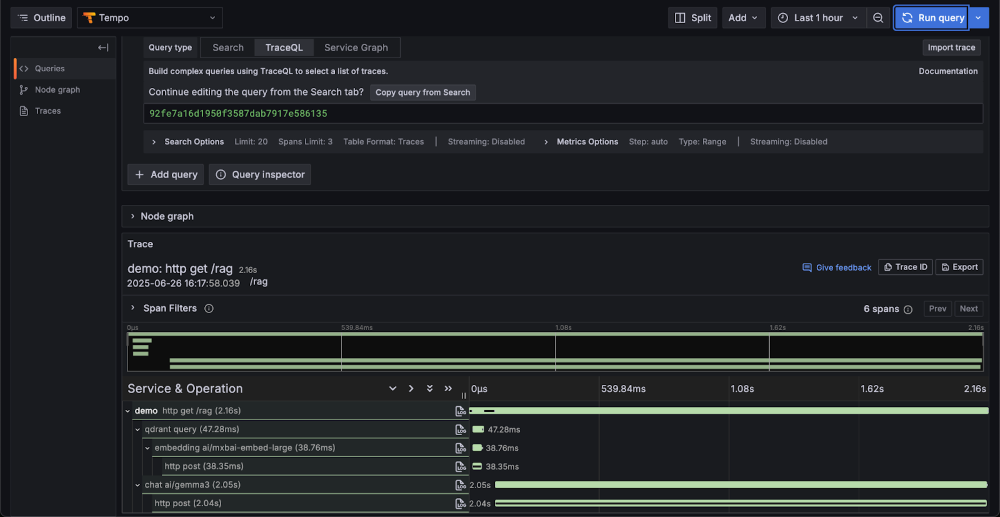

List traces for the service with name “demo”, and we can see a list of operations executed as part of this trace. You can use the trace ID with the name http get /rag to see the full control flow within the same HTTP request.

Figure 3: Grafana dashboard showing trace details for a /rag endpoint in a Java GenAI application

Conclusion

Docker offers powerful capabilities that complement the Spring AI project, allowing developers to build GenAI applications efficiently with Docker tools that they know and trust. It simplifies the startup of service dependencies, including the Docker Model Runner, which exposes an OpenAI-compatible API for running local models. Testcontainers help to quickly spin out integration testing to evaluate your app by providing lightweight containers for your services and dependencies. From development to testing, Docker and Spring AI have proven to be a reliable and productive combination for building modern AI-driven applications.

Learn more

- Get an inside look at the design architecture of the Docker Model Runner.

- Explore the story behind our model distribution specification

- Read our quickstart guide to Docker Model Runner.

- Find documentation for Model Runner.

- Visit our new AI solution page