At Docker, we always believe in the power of community and collaboration. It reminds me of what Robert Axelrod said in The Evolution of Cooperation: “The key to doing well lies not in overcoming others, but in eliciting their cooperation.” And what better place for Docker Model Runner to foster this cooperation than at Hugging Face, the well-known gathering place for the AI, ML, and data science community. We’re excited to share that developers can use Docker Model Runner as the local inference engine for running models and filtering for Model Runner supported models on Hugging Face!

Of course, Docker Model Runner has supported pulling models directly from Hugging Face repositories for some time now:

docker model pull hf.co/bartowski/Llama-3.2-1B-Instruct-GGUF

Local Inference with Model Runner on Hugging Face

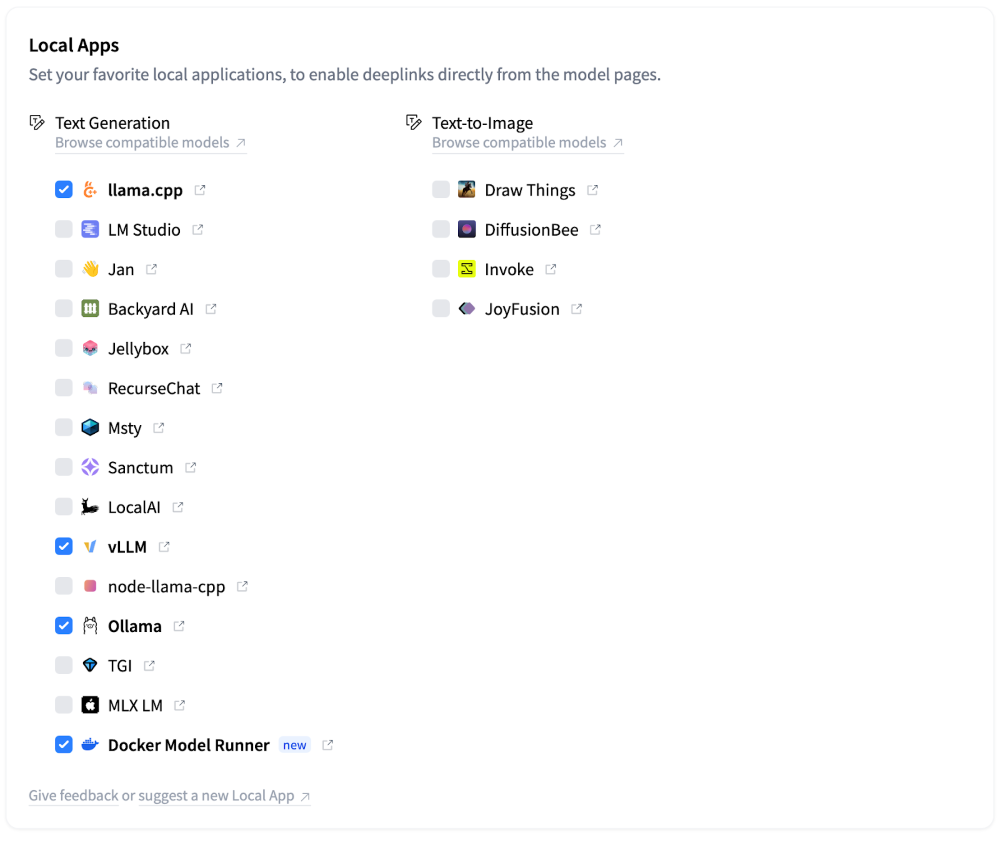

But so far, it has been cumbersome to rummage through the vast collection of models available at Hugging Face and find repositories that work with Docker Model Runner. But not anymore! Hugging Face now supports Docker as a Local Apps provider, so you can select it as the local inference engine to run models. And you don’t even have to configure it in your account; it is already selected as a default Local Apps provider for all users.

Figure 1: Docker Model Runner is a new inference engine available in Hugging Face for running local models.

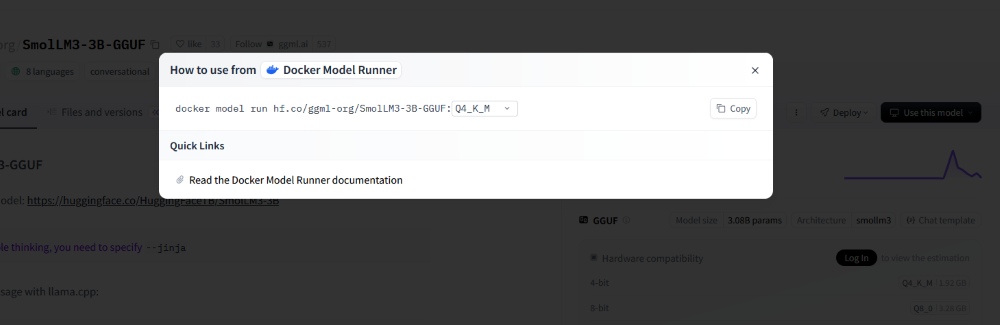

This makes running a model directly from HuggingFace as easy as visiting a repository page, selecting Docker Model Runner as the Local Apps provider, and executing the provided snippet:

Figure 2: Running models from Hugging Face using Docker Model Runner is now a breeze!

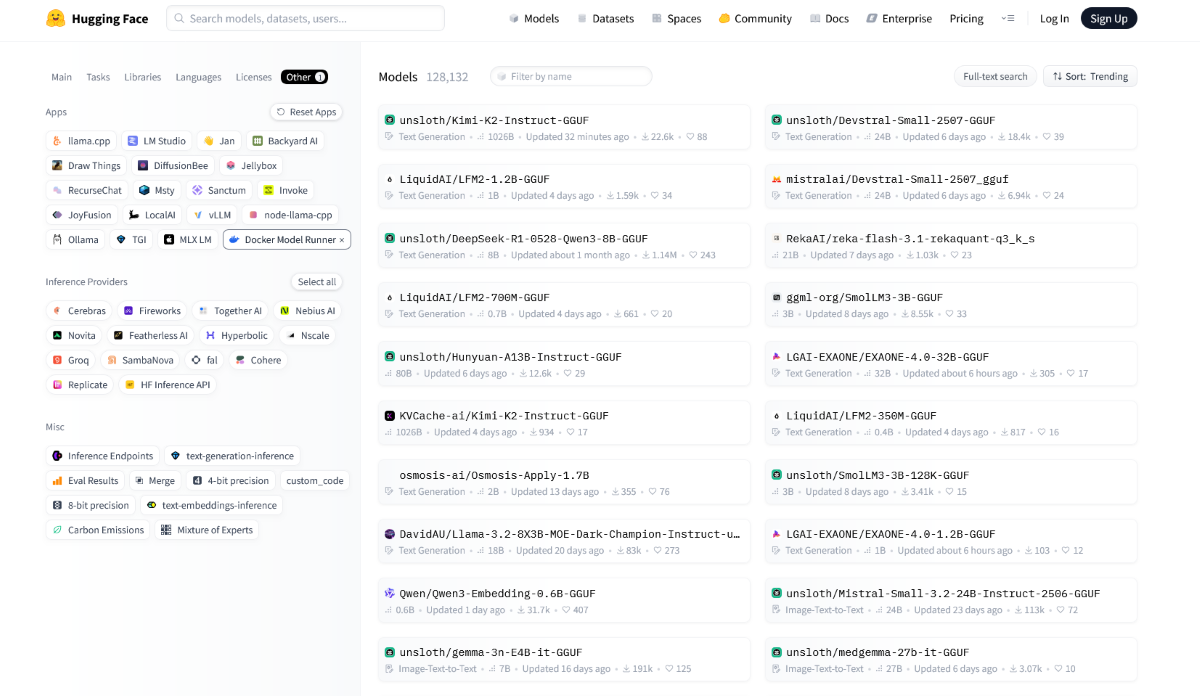

You can even get the list of all models supported by Docker Model Runner (meaning repositories containing models in GGUF format) through a search filter!

Figure 3: Easily discover models supported by Docker Model Runner with a search filter in Hugging Face

We are very happy that HuggingFace is now a first-class source for Docker Model Runner models, making model discovery as routine as pulling a container image. It’s a small change, but one that quietly shortens the distance between research and runnable code.

Conclusion

With Docker Model Runner now directly integrated on Hugging Face, running local inference just got a whole lot more convenient. Developers can filter for compatible models, pull them with a single command, and get the run command directly from the Hugging Face UI using Docker Model Runner as the Local Apps engine. This tighter integration makes model discovery and execution feel as seamless as pulling a container image.

And coming back to Robert Axelrod and The Evolution of Cooperation, Docker Model Runner has been an open-source project from the very beginning, and we are interested in building it together with the community. So head over to GitHub, check out our repositories, log issues and suggestions, and let’s keep on building the future together.

Learn more

- Sign up for the Docker Offload Beta and get 300 free minutes to run resource-intensive models in the cloud, right from your local workflow.

- Get an inside look at the design architecture of the Docker Model Runner.

- Explore the story behind our model distribution specification

- Read our quickstart guide to Docker Model Runner.

- Find documentation for Model Runner.

- Visit our new AI solution page

- New to Docker? Create an account.